What matters for consciousness is not what you do, but how you do it.

While atmospheric turbulence is a familiar culprit of rough flights, the chaotic movement of turbulent flows remains an unsolved problem in physics. To gain insight into the system, a team of researchers used explainable AI to pinpoint the most important regions in a turbulent flow, according to a Nature Communications study led by the University of Michigan and the Universitat Politècnica de València.

A clearer understanding of turbulence could improve forecasting, helping pilots navigate around turbulent areas to avoid passenger injuries or structural damage. It can also help engineers manipulate turbulence, dialing it up to help industrial mixing like water treatment or dialing it down to improve fuel efficiency in vehicles.

“For more than a century, turbulence research has struggled with equations too complex to solve, experiments too difficult to perform, and computers too weak to simulate reality. Artificial Intelligence has now given us a new tool to confront this challenge, leading to a breakthrough with profound practical implications,” said Sergio Hoyas, a professor of aerospace engineering at the Universitat Politècnica de València and co-author of the study.

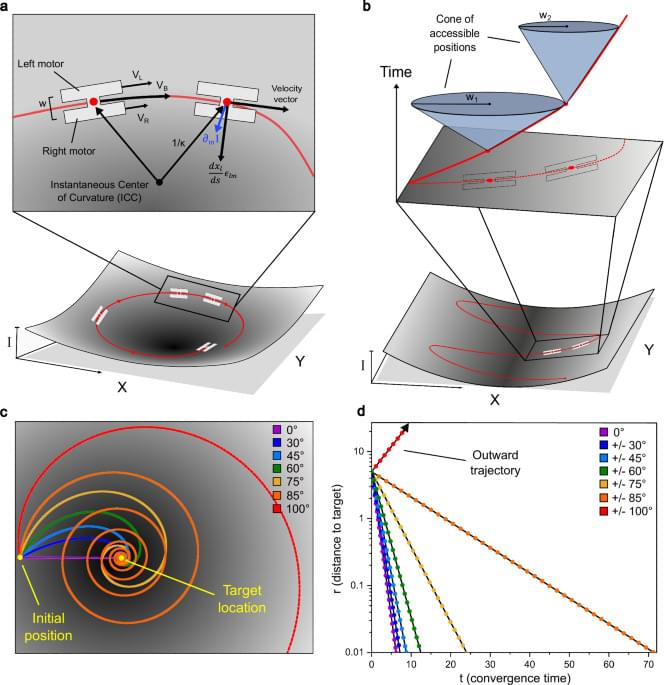

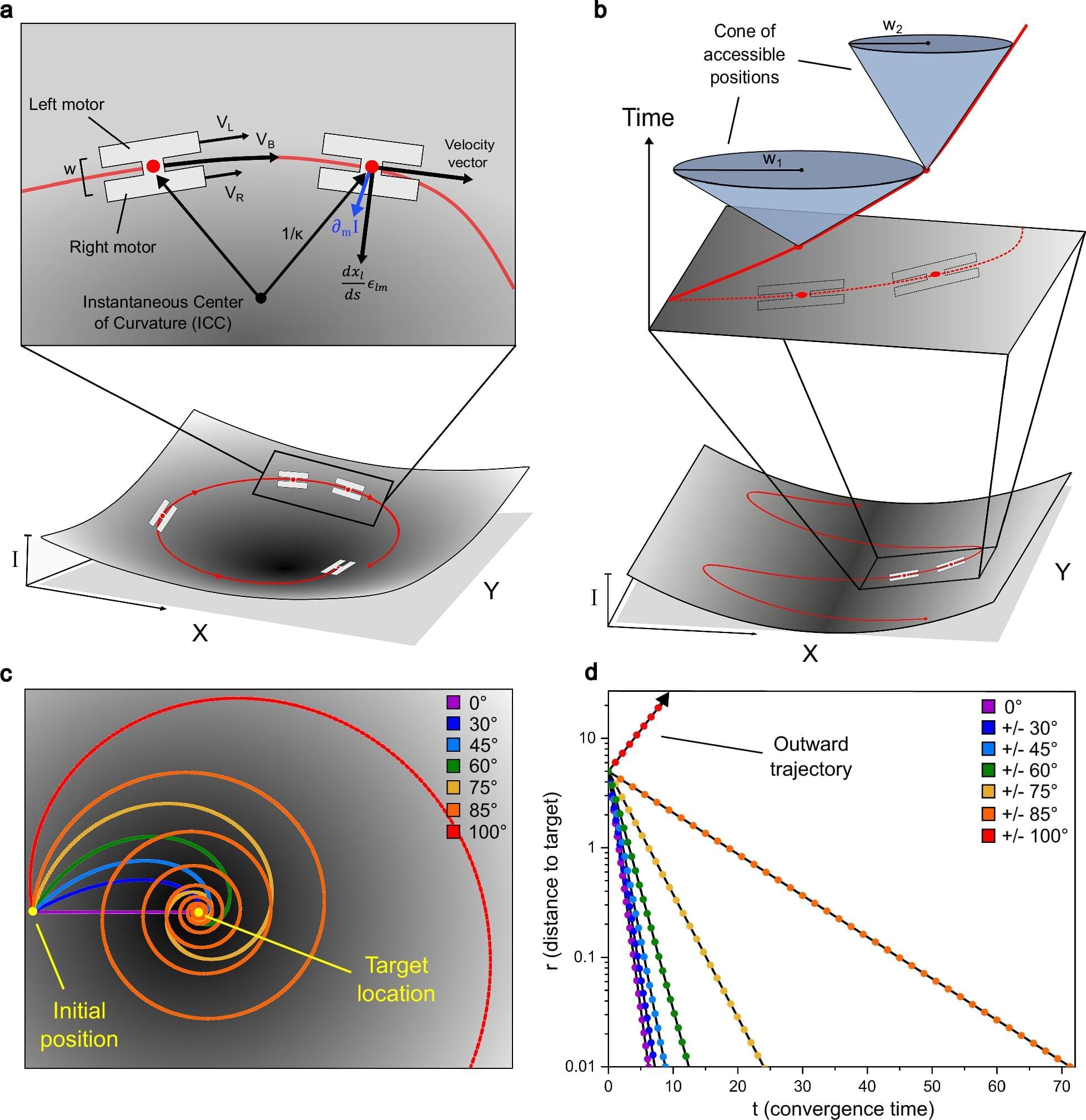

Not metaphorically—literally. The light intensity field becomes an artificial “gravity,” and the robot’s trajectory becomes a null geodesic, the same path light takes in warped spacetime.

By calculating the robot’s “energy” and “angular momentum” (just like planetary orbits), they mathematically prove: robots starting within 90 degrees of a target will converge exponentially, every time. No simulations or wishful thinking—it’s a theorem.

They use the Schwarz-Christoffel transformation (a tool from black hole physics) to “unfold” a maze onto a flat rectangle, program a simple path, then “fold” it back. The result: a single, static light pattern that both guides robots and acts as invisible walls they can’t cross.

npj Robot ics — Artificial spacetimes for reactive control of resource-limited robots. npj Robot 3, 39 (2025). https://doi.org/10.1038/s44182-025-00058-9

Not exactly a brain chip per se by a bit of nanotech.

While companies like Elon Musk’s Neuralink are hard at work on brain-computer interfaces that require surgery to cut open the skull and insert a complex array of wires into a person’s head, a team of researchers at MIT have been researching a wireless electronic brain implant that they say could provide a non-invasive alternative that makes the technology far easier to access.

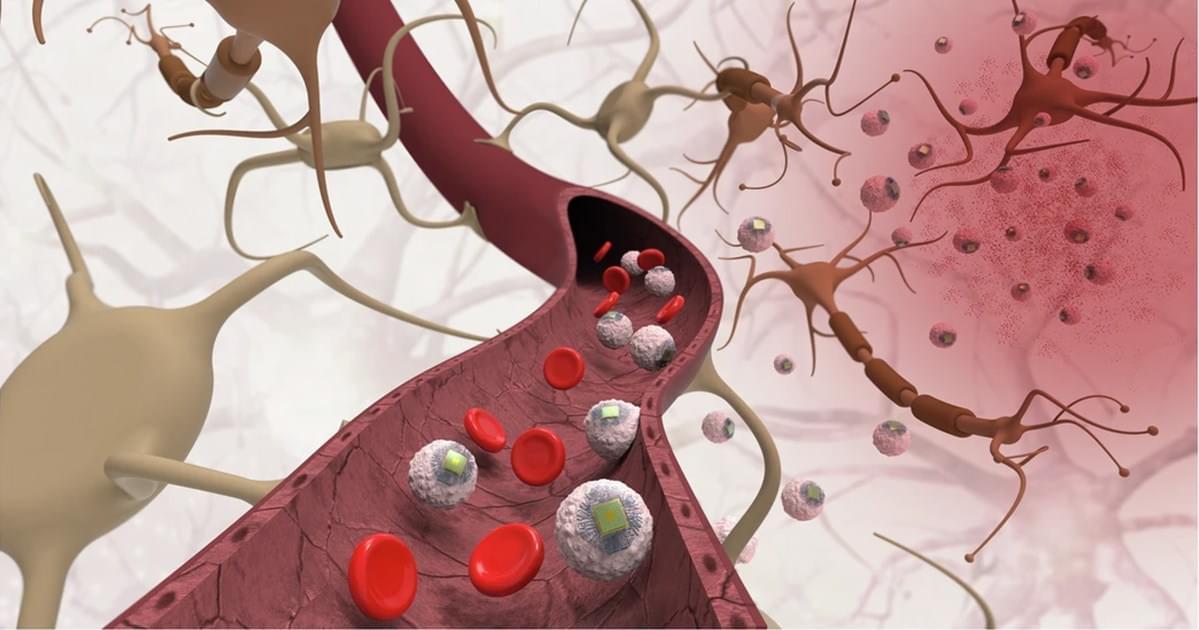

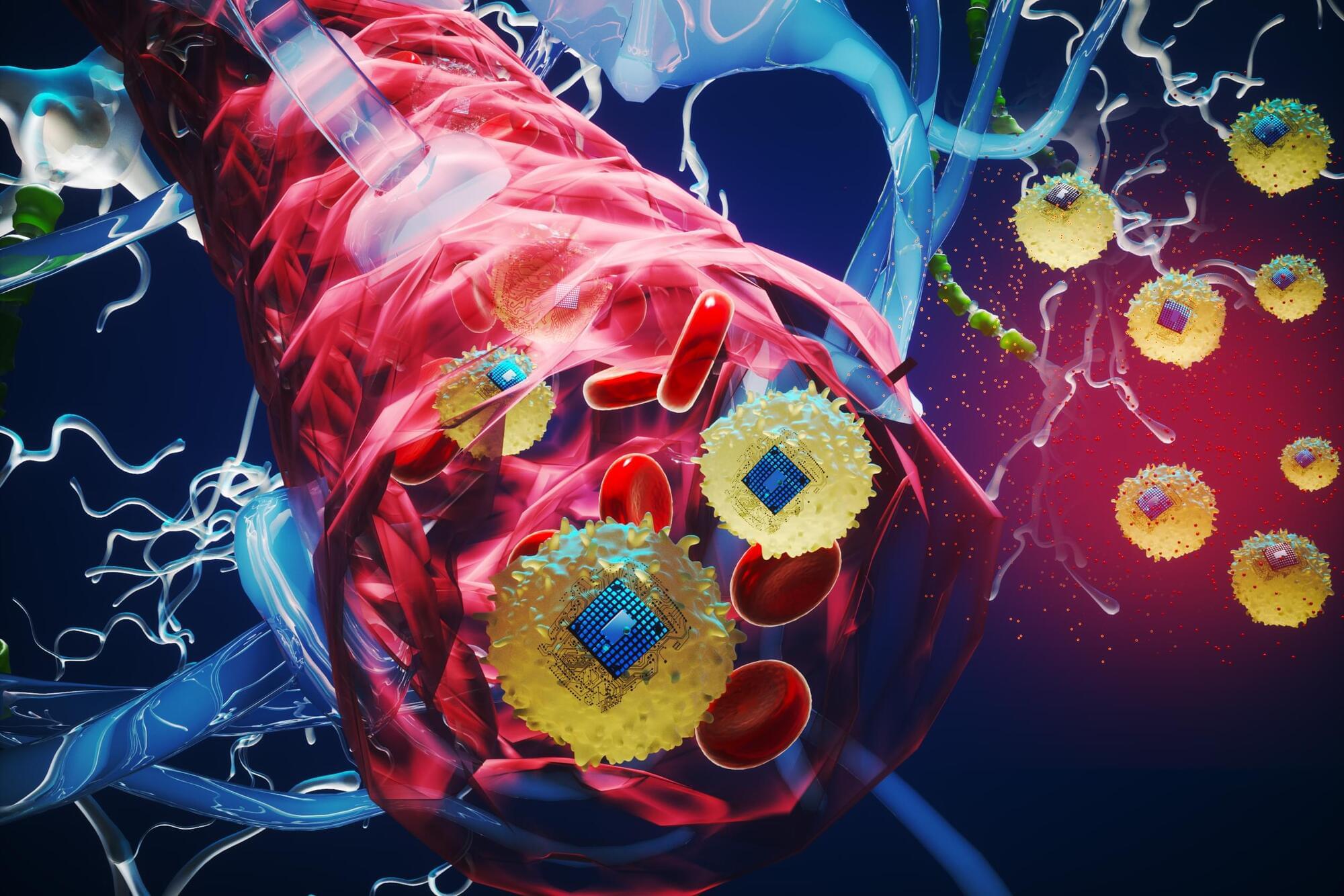

They describe the system, called Circulatronics, as more of a treatment platform than a one-off brain chip. Working with researchers from Wellesley College and Harvard University, the MIT team recently released a paper on the new technology, which they describe as an autonomous bioelectronic implant.

As New Atlas points out, the Circulatronics platform starts with an injectable swarm of sub-cellular sized wireless electronic devices, or “SWEDs,” which can travel into inflamed regions of the patient’s brain after being injected into the bloodstream. They do so by fusing with living immune cells, called monocytes, forming a sort of cellular cyborg.

Ever wondered what ancient languages sounded like?

Microrobots—tiny robots less than a millimeter in size—are useful in a variety of applications that require tasks to be completed at scales far too small for other tools, such as targeted drug-delivery or micro-manufacturing. However, the researchers and engineers designing these robots have run into some limitations when it comes to navigation. A new study, published in Nature, details a novel solution to these limitations—and the results are promising.

The biggest problem when dealing with microrobots is the lack of space. Their tiny size limits the use of components needed for onboard computation, sensing and actuation, making traditional control methods hard to implement. As a result, microrobots can’t be as “smart” as their larger cousins.

Researchers have tried to cover this limitation already. In particular, two methods have been studied. One method of microrobot control uses external feedback from an auxiliary system, usually with something like optical tweezers or electromagnetic fields. This has yielded precise and adaptable control of small numbers of microrobots, beneficial for complex, multi-step tasks or those requiring high accuracy, but scaling the method for controlling large numbers of independent microrobots has been less successful.

Lockheed Martin is partnering with Google Public Sector to integrate Google’s generative AI technologies, including the Gemini models, into its AI Factory.

Google’s AI tools will be introduced within Lockheed Martin’s secure, on-premises, air-gapped environments, making them accessible to personnel throughout the company.

In a move that could redefine the web, Google is testing AI-powered, UI-based answers for its AI mode.

Up until now, Google AI mode, which is an optional feature, has allowed you to interact with a large language model using text or images.

When you use Google AI mode, Google responds with AI-generated content that it scrapes from websites without their permission and includes a couple of links.

Microscopic bioelectronic devices could one day travel through the body’s circulatory system and autonomously self-implant in a target region of the brain. These “circulatronics” can be wirelessly powered to provide focused electrical stimulation to a precise region of the brain, which could be used to treat diseases like Alzheimer’s, multiple sclerosis, and cancer.