DeepSeek V4 to challenge OpenAI GPT and Anthropic Claude with coding breakthroughs

Category: robotics/AI – Page 26

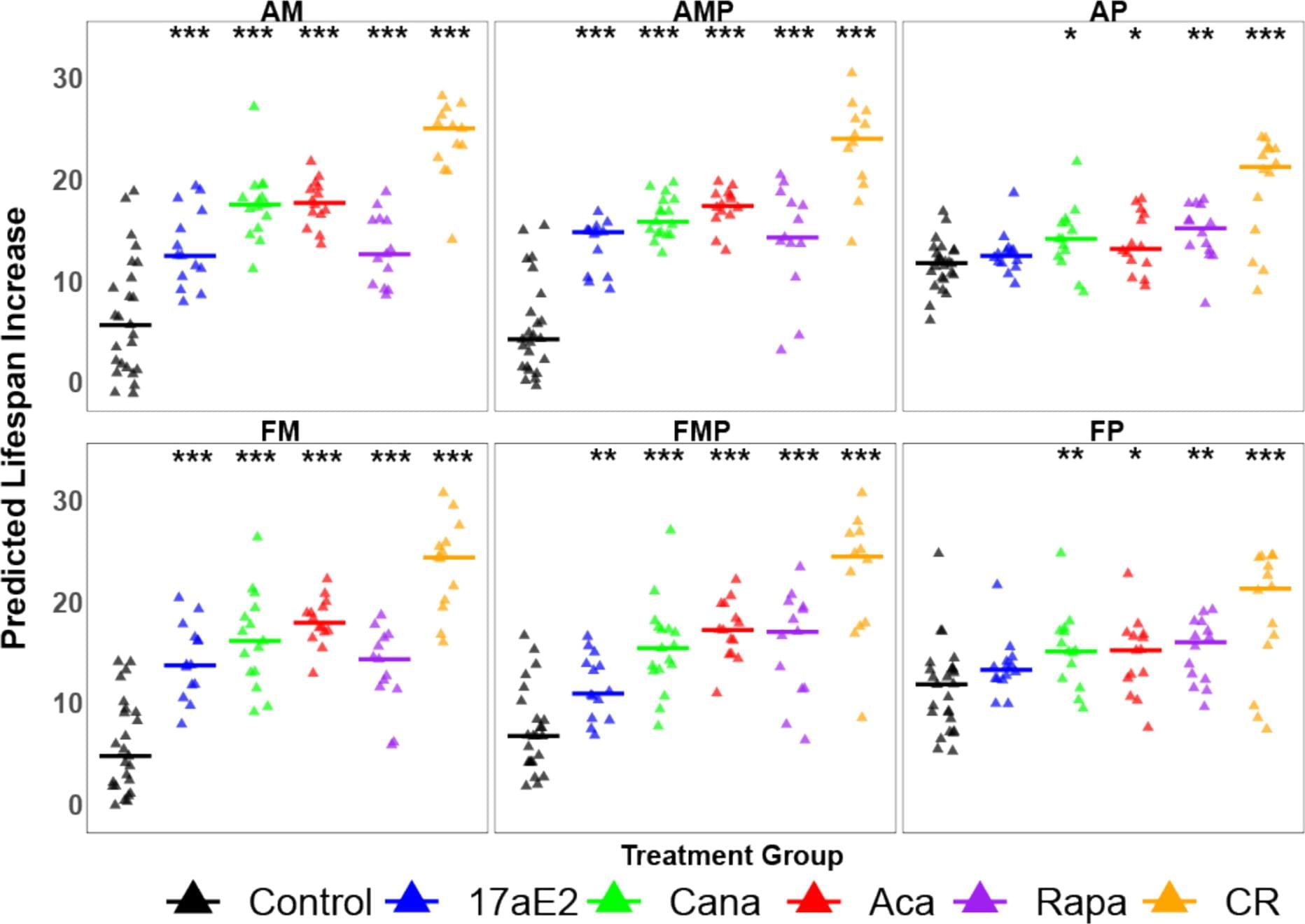

Discrimination of normal from slow-aging mice by plasma metabolomic and proteomic features

Tests that can predict whether a drug is likely to extend mouse lifespan could speed up the search for anti-aging drugs. We have applied a machine learning algorithm, XGBoost regression, to seek sets of plasma metabolites (n = 12,000) and peptides (n = 17,000) that can discriminate control mice from mice treated with one of five anti-aging interventions (n = 278 mice). When the model is trained on any four of these five interventions, it predicts significantly higher lifespan extension in mice exposed to the intervention which was not included in the training set. Plasma peptide data sets also succeed at this task. Models trained on drug-treated normal mice also discriminate long-lived mutant mice from their respective controls, and models trained on males can discriminate drug-treated from control females.

Cybercab Game Changers Tesla & Elon Kept Under Wraps

Questions to inspire discussion.

Design & Manufacturing Innovation.

🔧 Q: Why were conventional door locks added despite weight concerns? A: Conventional door locks on frameless doors were likely required for global market compliance, though positioning locks closer to the pivot point reduces the work required compared to weight at the door’s end.

Tire Optimization for Taxi Operations.

🛞 Q: What tire specifications optimize Cybercab for taxi service? A: 20–22 inch tires with thicker sidewalls provide smoother ride at lower speeds typical of taxi use, require less frequent changes, and achieve 6–7 miles per kilowatt hour efficiency through reduced rolling resistance.

Accessibility Features.

Anthropic joins federal Genesis Mission on AI-driven research

Byte News Daily

AI, Autonomy, and Scale: Why Elon Musk’s Timeline Will Break Society

Questions to inspire discussion.

🎯 Q: How should retail investors approach AI and robotics opportunities? A: Focus on technology leaders like Palantir, Tesla, and Nvidia that demonstrate innovation through speed of introducing revolutionary, scalable products rather than attempting venture capital strategies requiring $1M bets across 100 companies.

💼 Q: What venture capital strategy do elite firms use for AI investments? A: Elite VCs like A16Z (founded by Marc Andreessen) invest $1M each in 100 companies, expecting 1–10 to become trillion-dollar successes that make all other bets profitable.

🛡️ Q: Which defense sector companies are disrupting established contractors? A: Companies like Anduril are disrupting the five prime contractors by introducing innovative technologies like drones, which have become dominant in recent conflicts due to lack of innovation in the sector.

⚖️ Q: What mindset should investors maintain when evaluating AI opportunities? A: Be a judicious skeptic, balancing optimism with skepticism to avoid getting carried away by hype and marketing, which is undervalued but crucial for informed investment decisions.

Tesla’s Competitive Advantages.

Why SpaceX Is Worth Trillions With Only $15B of Revenue

SpaceX’s valuation has the potential to reach $1.5 trillion due to its innovative technologies, including reusable rockets, Starship, and Starlink, which could revolutionize the space industry and unlock massive growth opportunities in areas such as satellite connectivity, data centers, and computing ## Questions to inspire discussion.

Starship Production & Economics.

🚀 Q: What is SpaceX’s Starship production target and cost reduction goal? A: SpaceX plans to manufacture 1,000 Starships per year by 2030 (with aspirational goals of 10,000 per year), reducing launch costs to $10/kg through fully reusable vehicles achieving 99% reliability and 30 flights per booster.

🎯 Q: When will Starship begin commercial payload launches? A: Starship is currently in testing phase with proven relighting, PEZ dispenser deployment, and large payload capacity, expected to achieve commercial readiness as reliability approaches 99% through iterative flight testing.

Starlink V3 Revenue Model.

💰 Q: What revenue will Starlink V3 generate for SpaceX? A: Starlink V3 constellation will generate $250B revenue with 50% profit margins, representing 90–95% of SpaceX’s revenue over the next 5 years according to Mach33 and ARK Invest modeling.

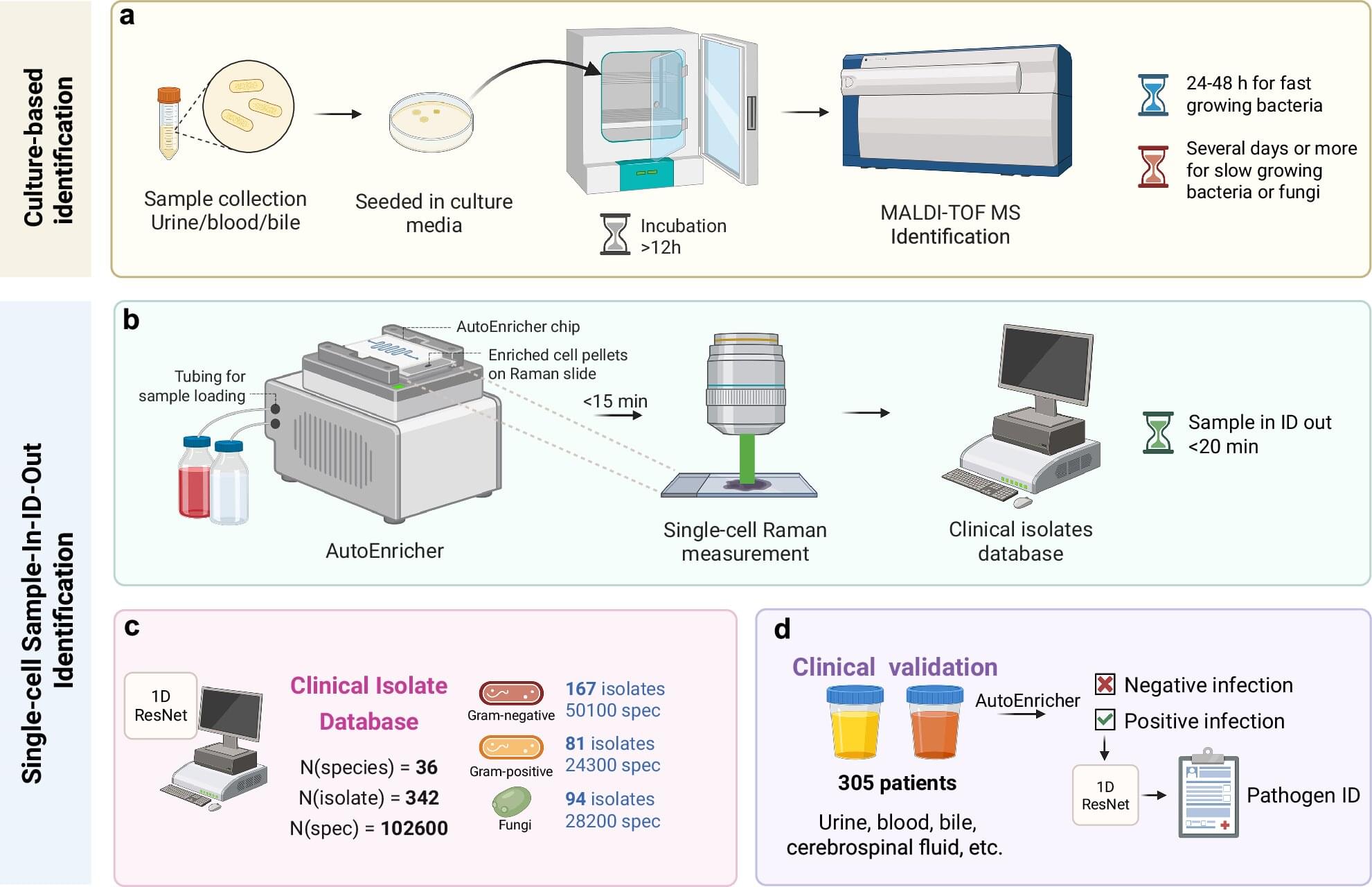

System can diagnose infections in 20 minutes, aiding fight against drug resistance

A new technique which slashes the time taken to diagnose microbial infections from days to minutes could help save lives and open up a new front in the battle against antibiotic resistance, researchers say.

Engineers and clinicians from the UK and China are behind the breakthrough system, called AutoEnricher. It combines microfluidic technology with sophisticated analysis and machine learning to enable the diagnosis of pathogens in just 20 minutes.

The team’s paper, titled “Rapid culture-free diagnosis of clinical pathogens via integrated microfluidic-Raman micro-spectroscopy,” is published in Nature Communications.

One image is all robots need to find their way

While the capabilities of robots have improved significantly over the past decades, they are not always able to reliably and safely move in unknown, dynamic and complex environments. To move in their surroundings, robots rely on algorithms that process data collected by sensors or cameras and plan future actions accordingly.

Researchers at Skolkovo Institute of Science and Technology (Skoltech) have developed SwarmDiffusion, a new lightweight Generative AI model that can predict where a robot should go and how it should move relying on a single image. SwarmDiffusion, introduced in a paper pre-published on the server arXiv, relies on a diffusion model, a technique that gradually adds noise to input data and then removes it to attain desired outputs.

“Navigation is more than ‘seeing,” a robot also needs to decide how to move, and this is where current systems still feel outdated,” Dzmitry Tsetserukou, senior author of the paper, told Tech Xplore.

Gmail’s new AI Inbox uses Gemini, but Google says it won’t train AI on user emails

Google says it’s rolling out a new feature called ‘AI Inbox,’ which summarizes all your emails, but the company promises it won’t train its models on your emails.

On Thursday, Google announced a new era of Gmail where Gemini will be taking over your default inbox screen.

Google argues that email has changed since 2004, as users are now bombarded with hundreds of emails every week, and volume keeps rising.