Spot is an agile mobile robot that you can customize for a wide range of applications. The base platform provides rough-terrain mobility, 360 degree obstacle avoidance, and various levels of navigation, remote control and autonomy. You can customize Spot by adding specialized sensors, software and other payloads. Early customers are already testing Spot to monitor construction sites, provide remote inspection at gas, oil and power installations, and in public safety. Spot is in mass production and currently shipping to select early adopters. Find out more about using Spot in your application by visiting us at https://www.BostonDynamics.com/Spot.

Category: robotics/AI – Page 2,229

Can artificial intelligence help transform education?

For all the talk about how artificial intelligence could transform what happens in the classroom, AI hasn’t yet lived up to the hype.

AI involves creating computer systems that can perform tasks that typically require human intelligence. It’s already being experimented with to help automate grading, tailor lessons to students’ individual needs and assist English language learners. We heard about a few promising ideas at a conference I attended last week on artificial intelligence hosted by Teachers College, Columbia University. (Disclosure: The Hechinger Report is an independent unit of Teachers College.)

Shipeng Li, corporate vice president of iFLYTEK, talked about how the Chinese company is working to increase teachers’ efficiency by individualizing homework assignments. Class time can be spent on the problems that are tripping up the largest numbers of students, and young people can use their homework to focus on their particular weaknesses. Margaret Price, a principal design strategist with Microsoft, mentioned a PowerPoint plug-in that provides subtitles in students’ native languages – useful for a teacher leading a class filled with young people from many different places. Sandra Okita, an associate professor at Teachers College, talked about how AI could be used to detect over time why certain groups of learners are succeeding or failing.

NASA’s rolling Shapeshifter robot concept splits into two drones

When NASA’s Cassini performed more than 100 flybys of Saturn’s moon Titan, scientists piecing together the data began forming a picture of a pretty treacherous environment, with liquid methane rain, cold rivers and icy volcanoes all potentially part of the mix. The agency’s scientists are already at work developing vehicles that will one day be used to explore such surrounds, with its newly revealed Shapeshifter robot another interesting example.

The Shapeshifter is a developmental concept at this early stage, and is designed to change its shape depending on the type of alien terrain it encounters. The team at NASA’s Jet Propulsion Laboratory have 3D printed a prototype of the robot that is already capable of some impressive maneuvers.

NASA’s thinking with the Shapeshifter is that it will actually be made up of a number of smaller robots, which can self-assemble into a larger machine and disassemble again as the mission calls for it.

‘Lucky’ observation: Scientists watch a black hole shredding a star

A NASA satellite searching space for new planets gave astronomers an unexpected glimpse at a black hole ripping a star to shreds.

It is one of the most detailed looks yet at the phenomenon, called a tidal disruption event (or TDE), and the first for NASA’s Transiting Exoplanet Survey Satellite (more commonly called TESS.)

The milestone was reached with the help of a worldwide network of robotic telescopes headquartered at The Ohio State University called ASAS-SN (All-Sky Automated Survey for Supernovae). Astronomers from the Carnegie Observatories, Ohio State and others published their findings today in The Astrophysical Journal.

Leonard Susskind: Quantum Mechanics, String Theory and Black Holes

Leonard Susskind is a professor of theoretical physics at Stanford University, and founding director of the Stanford Institute for Theoretical Physics. He is widely regarded as one of the fathers of string theory and in general as one of the greatest physicists of our time both as a researcher and an educator. This conversation is part of the Artificial Intelligence podcast.

INFO:

Podcast website:

https://lexfridman.com/ai

iTunes:

https://apple.co/2lwqZIr

Spotify:

https://spoti.fi/2nEwCF8

RSS:

https://lexfridman.com/category/ai/feed/

Full episodes playlist:

Clips playlist:

OUTLINE:

00:00 — Introduction

01:02 — Richard Feynman

02:09 — Visualization and intuition

06:45 — Ego in Science

09:27 — Academia

11:18 — Developing ideas

12:12 — Quantum computers

21:37 — Universe as an information processing system.

26:35 — Machine learning

29:47 — Predicting the future

30:48 — String theory

37:03 — Free will

39:26 — Arrow of time

46:39 — Universe as a computer

49:45 — Big bang

50:50 — Infinity

51:35 — First image of a black hole

54:08 — Questions within the reach of science.

55:55 — Questions out of reach of science.

CONNECT:

- Subscribe to this YouTube channel

- Twitter: https://twitter.com/lexfridman

- LinkedIn: https://www.linkedin.com/in/lexfridman

- Facebook: https://www.facebook.com/lexfridman

- Instagram: https://www.instagram.com/lexfridman

- Medium: https://medium.com/@lexfridman

- Support on Patreon: https://www.patreon.com/lexfridman

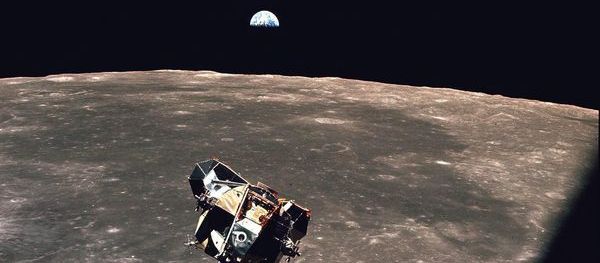

NASA Considers Robotic Lunar Pit Mission; Moon’s Subsurface Key To Long-Term Settlement

The Moon’s subsurface is the key to its longterm development and sustainability, says NASA scientist.

A view of the Apollo 11 lunar module “Eagle” as it returned from the surface of the moon to dock … [+] with the command module “Columbia”. A smooth mare area is visible on the Moon below and a half-illuminated Earth hangs over the horizon. Command module pilot Michael Collins took this picture.

Chatbots Lead To 80% Sales Decline, Satisfied Customers And Fewer Employees

Recent surveys, studies, forecasts and other quantitative assessments of the progress of AI, highlighted among other findings, disagreements about the impact of chatbots: Do purchase rates go down when people find out they are interacting with a chatbot? Or do chatbots actually increase customer satisfaction and loyalty? And are chatbots already successful in replacing human workers?

AI Learns To Play Hide And Seek

OpenAI have taught their AI agents to play hide and seek to show how they can develop their own complex and intelligent behaviours 🤖.

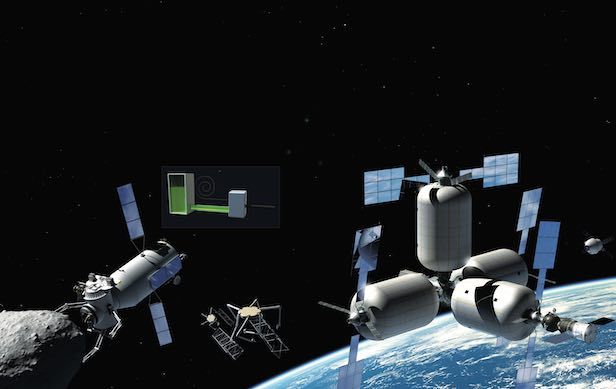

Future Tech: Spinning a Space Station

The ultimate way of building up space structures would be to use material sourced there, rather than launched from Earth. Once processed into finished composite material, the resin holds the carbon fibres together as a solid rather than a fabric. The beams can be used to construct more complex structures, antennae, or space station trusses. Image credit: All About Space/Adrian Mann.

The International Space Station is the largest structure in space so far. It has been painstakingly assembled from 32 launches over 19 years, and still only supports six crew in a little-under-a-thousand cubic metres of pressurised space. It’s a long way from the giant rotating space stations some expected by 2001. The problem is that the rigid aluminium modules all have to be launched individually, and assembled in space. Bigelow Aerospace will significantly improve on this with their inflatable modules that can be launched as a compressed bundle; but a British company has developed a system that could transform space flight, by building structures directly in space.

Magna Parva from Leicester are a space engineering consultancy, founded in 2005 by Andy Bowyer and Miles Ashcroft. Their team have worked on a range of space hardware, from methods to keep Martian solar panels clear of dust, to ultrasonic propellant sensors, to spacecraft windows. But their latest project is capable of 3D printing complete structures in space, using a process called pultrusion. Raw carbon fibres and epoxy resin are combined in a robotic tool to create carbon composite beams of unlimited length – like a spider creating a web much larger than itself. Building structures in space has a range of compounding virtues, it is more compact than even inflatables, as only bulk fibre and resin need to be launched. Any assembled hardware that has to go through a rocket launch has to be made much stronger than needed in space to survive the launch, printed structures can be designed solely for their in space application, using less material still.

Top 10 Artificial Intelligence (AI) Technologies In 2019

The effects of Artificial Intelligence (AI) technologies have already been experienced by the people globally and the increasing utilization of technology has proven a revolutionary advancement in the current scenario of digitization. Knowledge reasoning, planning, machine learning, robotics, computer vision, and graphics are few of the most commonly used areas where the AI has exposed the potential to ease the result oriented operations. In addition to the above, the most common machinery i.e. smartphones are even now available with high-end AI rich features and functionalities. AI enabled smartphones can now be noticed in terms of learning the continued user behavior and applying the same itself, improved security of rich features and enhanced voice assistants exhibiting AI rich functionalities. However, the exposure of Artificial Intelligence like broadest technologies must not be limited up to improved machinery rich features along with process optimization and automation. There are the other best set of fields predicted to get benefit under the global hug of AI worldwide which are hereby mentioned below.