Monstrous AI

Pichai suggests the internet and electricity are also small potatoes compared to AI.

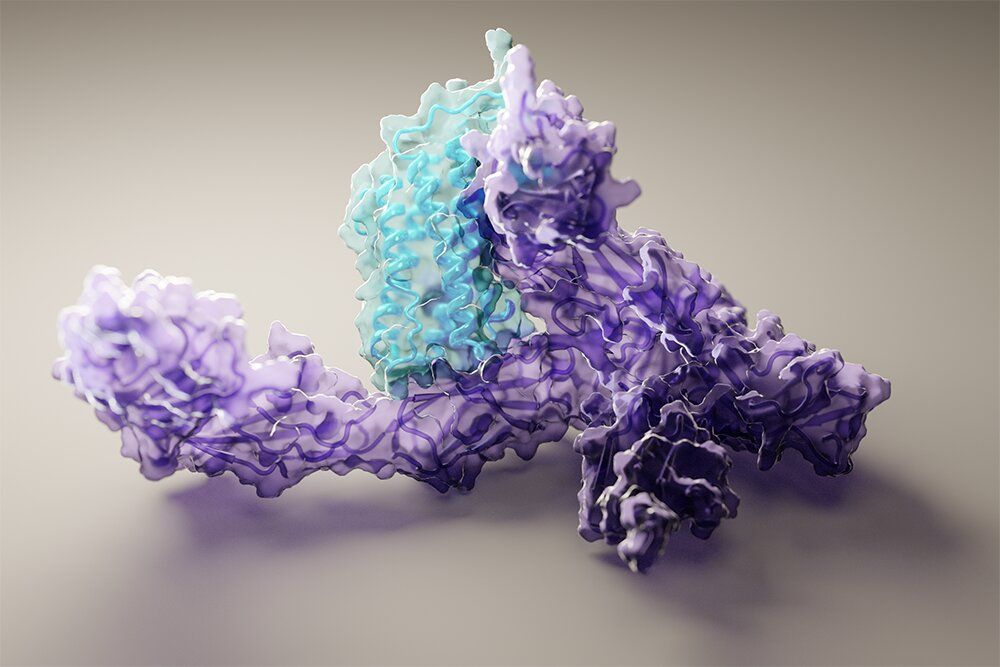

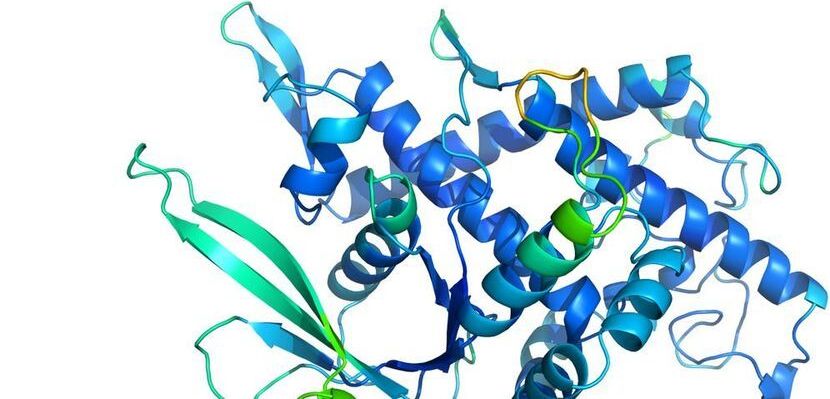

Scientists have waited months for access to highly accurate protein structure prediction since DeepMind presented remarkable progress in this area at the 2020 Critical Assessment of Structure Prediction, or CASP14, conference. The wait is now over.

Researchers at the Institute for Protein Design at the University of Washington School of Medicine in Seattle have largely recreated the performance achieved by DeepMind on this important task. These results will be published online by the journal Science on Thursday, July 15.

Unlike DeepMind, the UW Medicine team’s method, which they dubbed RoseTTAFold, is freely available. Scientists from around the world are now using it to build protein models to accelerate their own research. Since July, the program has been downloaded from GitHub by over 140 independent research teams.

The Google Quantum AI team has found that adding logical qubits to the company’s quantum computer reduced the logical qubit error rate exponentially. In their paper published in the journal Nature, the group describes their work with logical qubits as an error correction technique and outline what they have learned so far.

One of the hurdles standing in the way of the creation of usable quantum computers is figuring out how to either prevent errors from occurring or fixing them before they are used as part of a computation. On traditional computers, the problem is mostly solved by adding a parity bit—but that approach will not work with quantum computers because of the different nature of qubits—attempts to measure them destroy the data. Prior research has suggested that one possible solution to the problem is to group qubits into clusters called logical qubits. In this new effort, the team at AI Quantum has tested this idea on Google’s Sycamore quantum computer.

Sycamore works with 54 physical qubits, in their work, the researchers created logical qubits of different sizes ranging from five to 21 qubits to see how each would work. In so doing, they found that adding qubits reduced error rates exponentially. They were able to measure the extra qubits in a way that did not involve collapsing their state, but that still provided enough information for them to be used for computations.

Today, in a peer-reviewed paper published in the prestigious scientific journal Nature, DeepMind offered further details of how exactly its A.I. software was able to perform so well. It has also open-sourced the code it used to create AlphaFold 2 for other researchers to use.

But it’s still not clear when researchers and drug companies will have easy access to AlphaFold’s structure predictions.

Once studied by Charles Darwin, the Venus flytrap is perhaps the most famous plant that moves at high speed. But as Daniel Rayneau-Kirkhope explains, researchers are still unearthing new scientific insights into plant motion, which could lead to novel, bio-inspired robotic structures.

“In the absence of any other proof,” Isaac Newton is once said to have proclaimed, “the thumb alone would convince me of God’s existence.” With 29 bones, 123 ligaments and 34 muscles pulling the strings, the human hand is indeed a feat of nature’s engineering. It lets us write, touch, hold, feel and interact in exquisite detail with the world around us.

To replicate the wonders of the human hand, researchers in the field of “soft robotics” are trying to design artificial structures made from flexible, compliant materials that can be controlled and programmed by computers. Trouble is, the hand is such a complex structure that it needs lots of computing power to be properly controlled. That’s a problem when developing prosthetic hands for people who have lost an arm in, say, an accident or surgery.

Timestamps:

0:00 How the Rose lab more than doubled the lifespan of Drosophila.

17:20 Use of machine learning (ML) and multi-‘omics to characterize aging, and use of ML to develop interventions.

37:04 Adherence to an ancestral diet in Drosophila extends healthspan relative to their evolutionary recent diet.

40:35 The importance of measuring objective markers of health to determine if one’s diet is best for them.

44:04 Does aging stop, and use of biomarker testing to help decipher/optimize that.

53:33 The importance of characterizing aging for both Drosophila and its co-associated microbiome.

1:00:35 Why a massive, wide-scale, Manhattan-project approach for increasing human lifespan is necessary.

The “show” starts with a robot grabbing a handful of dough and depositing it on a pan, where another bot flattens it, a third applies tomato sauce, etc. From dough-grabbing to inserting in the oven, preparing a pizza takes just 45 seconds. The oven can bake 6 pizzas at a time, yielding about 80 pizzas per hour. Once a pizza is baked to gooey perfection, a robot slices it and places it in a box, and it’s then transferred (by a robot, of course) to a numbered cubby from which the customer can retrieve it.

It’s a shame the pizzeria didn’t open during the height of the pandemic, as its revenues likely would have gone through the roof given that there’s zero person-to-person contact required for you to get a fresh, custom-made pizza in your hands (and more importantly, your belly!).

Pazzi’s creators spent eight years researching and developing the pizza bots, and they say the hardest part was getting the bots to work effectively with the raw dough. Since it’s made with yeast, the dough is sensitive to changes in temperature, humidity, and other factors, and for optimal results it needs to be rolled out and baked with very precise timing.