In an era of diminished religious affiliation, faith leaders are turning to technology to help usher worshippers back into the flock.

For us at the OEC promoting STEM Education and Artificial Intelligence as well as preparing students with future job skills has been our focus for the past 5 years. We would not relent as we know that the robots are not just coming to take over our jobs but they are coming to be our Bosses and many in Africa are not aware of this hence OEC is poised to change the narrative by engaging in Talk shows, workshops, boot camps, seminars, etc. The job is huge but we say thank you to our wonderful partners that have also been there for us each time we call for support. These awards are clarion calls to do more and we would continue to push to see that my dear continent does not lose out in the fourth industrial revolution powered by intelligent machines.

Over the past few years, the business world has increasingly turned towards intelligent solutions to help cope with the changing digital landscape. Artificial intelligence (AI) enables devices and things to perceive, reason and act intuitively—mimicking the human brain, without being hindered by human subjectivity, ego and routine interruptions. The technology has the potential to greatly expand our capabilities, bringing added speed, efficiency and precision for tasks both complex and mundane.

To get a picture of the momentum behind AI, the global artificial intelligence market was valued at $62.35 billion in 2020 and is expected to expand at a compound annual growth rate (CAGR) of 40.2% from 2021 to 2028. Given this projection, it’s not surprising that tech giants such as AWS, IBM, Google and Qualcomm have all made significant investments into AI research, development, disparate impact testing and auditing.

My coverage area of expertise, fintech (financial technology), is no exception to this trend. The AI market for fintech alone is valued at an estimated $8 Billion and is projected to reach upwards of $27 Billion in the next five years. AI and machine learning (ML) have penetrated almost every facet of the space, from customer-facing functions to back-end processes. Let’s take a closer look at these changing dynamics.

Janet Adams, an expert in robotics and artificial intelligence, says superhuman robots are likely coming in the next couple of decades.

A team from the University of Zurich has trained an artificial intelligence system to fly a drone in a virtual environment full of obstacles before setting it loose in the real world, where it was able to weave around obstacles at 40 kph (25 mph), three times as fast as the previous best piloting software. Lead researcher Davide Scaramuzza, Director of the Robotics and Perception Group, says the work, carried out in partnership with Intel, could revolutionize robotics by enabling machines to learn virtually.

A paper describing the project, Learning high-speed flight in the wild, was published this month in the journal Science Robotics.

“Our approach is a stepping stone toward the development of autonomous systems that can navigate at high speeds through previously unseen environments with only on-board sensing and computation,” the paper concludes.

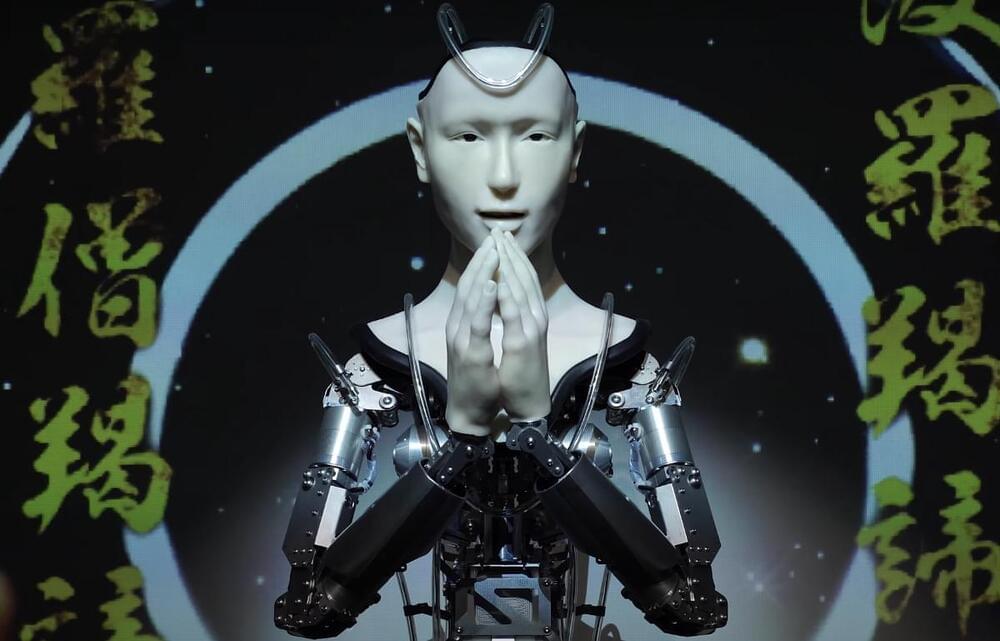

Recent advancements in biotechnology have immense potential to help address many global problems; climate change, an aging society, food security, energy security, and infectious diseases.

Biotechnology is not to be confused with the closely related field of biosciences. While biosciences refer to all the sciences that study and understand life, biology, and biological organisms, biotechnology refers to the application of the knowledge of biosciences and other technologies to develop tech and commercial products. Biotechnology is the application of innovation to biosciences in a bid to solve real-world medical problems.

Throw Artificial Intelligence into the mix and we suddenly have a really interesting pot of broth. Several AI trends have already proven beneficial to the development of biotechnology. Dr. Nathan S. Bryan, an inventor, biochemist and professor, who made a name for himself as an innovator and pioneer in nitric oxide drug discovery, commercialization, and molecular medicine, offers his insights on these contributions.

Hey, dude, where’s my car?

That was the question on my mind when I walked out to the parking lot to get into my car and it was not there. Given that Halloween was just a few days away, I naturally suspected that perhaps a ghost had decided to take my car for a spin. Seems like those ghosts don’t get much of a chance to spirit away an everyday car.

I put aside the ghost theory and sought to find something more down-to-earth as an explanation for where my car was.

This particular parking lot was quite expansive and there wasn’t any numbering system associated with the parking spots. Thus, I had to remember where my car was supposed to be as based entirely on my own mental “global positioning” brain ware, and absent of having any tangible and more reliable form of tracing.

Full Story:

Researchers report the human brain may use next word prediction to drive language processing.

Source: MIT

In the past few years, artificial intelligence models of language have become very good at certain tasks. Most notably, they excel at predicting the next word in a string of text; this technology helps search engines and texting apps predict the next word you are going to type.