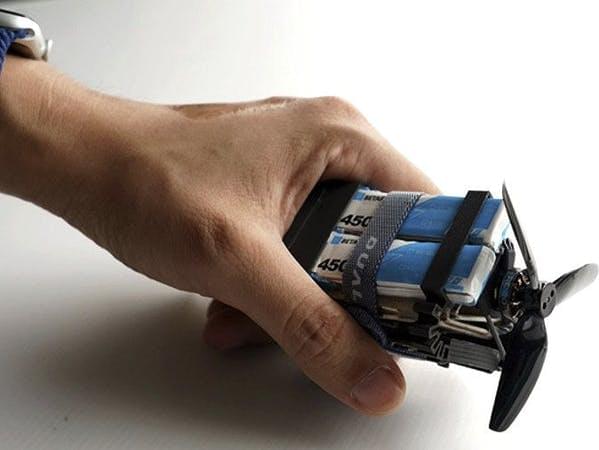

Driven by a SparkFun ESP32 MicroMod Processor, this laser-cut drone wraps up tight for ease of storage and transportation.

Universal Basic Income is soon going to become a necessity due to Robots becoming exponentially more capable of doing jobs which previously could only be performed by humans. This has become especially apparent with Elon Musk’s new venture in the form of Tesla Robot Optimus which is aimed to be shown off in 2022. Whether or not Elon Musk’s predictions will turn out to be true is yet to be seen, but it’s clear that we will need some kind of Passive income in the form of UBI.

In this video I will show you how you can use that knowledge to prepare yourself for a world with Universal Basic Income, how to make more money than anyone else and even double your income in the end.

–

If you enjoyed this video, please consider rating this video and subscribing to our channel for more frequent uploads. Thank you! smile

–

TIMESTAMPS:

00:00 How Robots will change Society.

01:32 What is happening right now.

03:04 The Future of Employment.

05:05 Example of how this is happening.

06:47 The Negatives.

07:38 Last Words.

–

#robots #ubi #jobs

On Wednesday, November 10 at 9:03 p.m. EST, 2:03 UTC on November 11 SpaceX and NASA launched Dragon’s third long-duration operational crew mission (Crew-3) to the International Space Station from historic Launch Complex 39A (LC-39A) at NASA’s Kennedy Space Center in Florida. Following stage separation, Falcon 9’s first stage landed on the “A Shortfall of Gravitas” droneship.

On Thursday, November 11 at approximately 7:10 p.m. EST, 00:10 UTC on November 12 Dragon will autonomously dock with the space station. Follow Dragon and the Crew-3 astronauts during their flight to the International Space Station at spacex.com/launches.

_____

Crew-3 Mission | Launch: https://youtu.be/WZvtrnFItNs.

Crew-3 Mission | Coast: https://youtu.be/aknndyovSKs.

Crew-3 Mission | Approach & Docking: https://youtu.be/dZ5YAPZ8i3s

A long-term fallout of the Covid crisis has been the rise of the contactless enterprise, in which customers, and likely employees, interact with systems to get what they need or request. This means a pronounced role for artificial intelligence and machine learning, or conversational AI, which add the intelligence needed to deliver superior customer or employee experience.

Deloitte analysts recently analyzed patents in the area of conversational AI to assess the direction of the technology and the market — and the technology has been fast developing. “Rapid adoption of conversational AI will likely be underpinned by innovations in the various steps of chatbot development that have the potential to hasten the creation and training of chatbots and enable them to efficiently handle complex requests — with a personal touch,” the analyst team, led by Deloitte’s Sherry Comes, observes.

Conversational AI is a ground-breaking application for AI, agrees Chris Hausler, director of data science for Zendesk. “Organizations saw a massive 81% increase in customer interactions with automated bots last year, and no doubt these will continue to be key to delivering great experiences.”

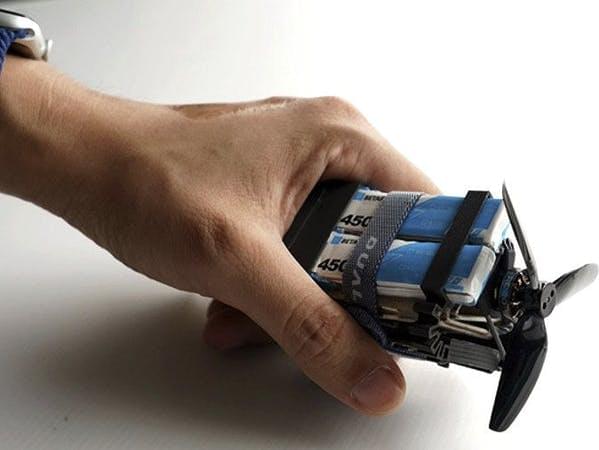

Princeton researchers have invented bubble casting, a new way to make soft robots using “fancy balloons” that change shape in predictable ways when inflated with air.

The new system involves injecting bubbles into a liquid polymer, letting the material solidify and inflating the resulting device to make it bend and move. The researchers used this approach to design and create hands that grip, a fishtail that flaps and slinky-like coils that retrieve a ball. They hope that their simple and versatile method, published Nov. 10 in the journal Nature, will accelerate the development of new types of soft robots.

Traditional rigid robots have multiple uses, such as in manufacturing cars. “But they will not be able to hold your hands and allow you to move somewhere without breaking your wrist,” said Pierre-Thomas Brun, an assistant professor of chemical and biological engineering and the lead researcher on the study. “They’re not naturally geared to interact with the soft stuff, like humans or tomatoes.”

As robots are introduced in an increasing number of real-world settings, it is important for them to be able to effectively cooperate with human users. In addition to communicating with humans and assisting them in everyday tasks, it might thus be useful for robots to autonomously determine whether their help is needed or not.

Researchers at Franklin & Marshall College have recently been trying to develop computational tools that could enhance the performance of socially assistive robots, by allowing them to process social cues given by humans and respond accordingly. In a paper pre-published on arXiv and presented at the AI-HRI symposium 2021 last week, they introduced a new technique that allows robots to autonomously detect when it is appropriate for them to step in and help users.

“I am interested in designing robots that help people with everyday tasks, such as cooking dinner, learning math, or assembling Ikea furniture,” Jason R. Wilson, one of the researchers who carried out the study, told TechXplore. “I’m not looking to replace people that help with these tasks. Instead, I want robots to be able to supplement human assistance, especially in cases where we do not have enough people to help.”

The virtual sphere of digital collaboration is growing.

And while the soon-to-be-defunct Facebook pivots to Meta’s Metaverse in a bid to pivot operations into the virtual world, Nvidia is expanding its Omniverse, designed to enhance workflows in the new media environment, according to a pre-brief of the GTC 2021 event that IE attended.

While the scope and scale of Nvidia’s new suite of artificial intelligence, avatar interfaces, and supercomputing prowess were impressive, perhaps the most notable development is the firm’s new digital twin.

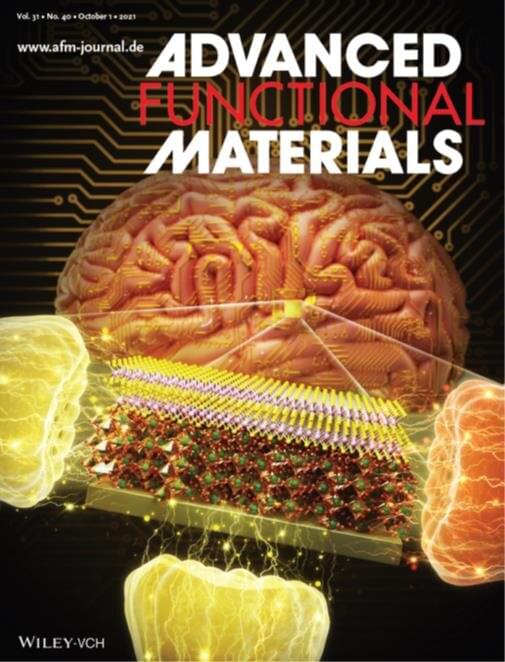

Researchers in Korea succeeded in developing a core material for the next-generation neuromorphic (neural network imitation) semiconductor for the first time in the country. This is a result of a research team led by Dr. Jung-dae Kwon and Yong-hun Kim of the Department of Energy and Electronic Materials of the Korea Institute of Materials Science, together with Professor Byungjin Cho’s research team at Chungbuk National University. KIMS is a government-funded research institute under the Ministry of Science and ICT.

This new concept memtransistor uses a two-dimensional nanomaterial with a thickness of several nanometers. By reproducibly imitating the electrical plasticity of nerve synapses with more than 1,000 electrical stimulations, the researchers succeeded in obtaining a high pattern recognition rate of about 94.2% (98% of simulation-based pattern recognition rate).

Molybdenum sulfur (MoS2), widely used as a semiconductor material, works on the principle that defects in a single crystal are moved by an external electric field, which makes it difficult to precisely control the concentration or shape of the defect. To solve the problem, the research team sequentially stacked an oxidic layer of niobium oxide (Nb2O5) and a molybdenum sulfur material and succeeded in developing an artificial synaptic device having a memtransistor structure with high electrical reliability by an external electric field. In addition, they have demonstrated that the resistance switching characteristics can be freely controlled by changing the thickness of the niobium oxidic layer, and that brain information related to memory and forgetting can be processed with a very low energy of 10 PJ (picojoule).

Neuroscience biweekly vol. 45 27th October — 10th November.

The brain uses a shared mechanism for combining words from a single language and for combining words from two different languages, a team of neuroscientists has discovered. Its findings indicate that language switching is natural for those who are bilingual because the brain has a mechanism that does not detect that the language has switched, allowing for a seamless transition in comprehending more than one language at once.

“Our brains are capable of engaging in multiple languages,” explains Sarah Phillips, a New York University doctoral candidate and the lead author of the paper, which appears in the journal eNeuro. “Languages may differ in what sounds they use and how they organize words to form sentences. However, all languages involve the process of combining words to express complex thoughts.”

“Bilinguals show a fascinating version of this process — their brains readily combine words from different languages together, much like when combining words from the same language,” adds Liina Pylkkänen, a professor in NYU’s Department of Linguistics and Department of Psychology and the senior author of the paper.

Let’s take a look at a highly abstracted neuron. It’s like a tootsie roll, with a bulbous middle section flanked by two outward-reaching wrappers. One side is the input—an intricate tree that receives signals from a previous neuron. The other is the output, blasting signals to other neurons using bubble-like ships filled with chemicals, which in turn triggers an electrical response on the receiving end.

Here’s the crux: for this entire sequence to occur, the neuron has to “spike.” If, and only if, the neuron receives a high enough level of input—a nicely built-in noise reduction mechanism—the bulbous part will generate a spike that travels down the output channels to alert the next neuron.

But neurons don’t just use one spike to convey information. Rather, they spike in a time sequence. Think of it like Morse Code: the timing of when an electrical burst occurs carries a wealth of data. It’s the basis for neurons wiring up into circuits and hierarchies, allowing highly energy-efficient processing.