A research team from MIT, Columbia University, Harvard University and University of Waterloo proposes a neural network that can solve university-level mathematics problems via program synthesis.

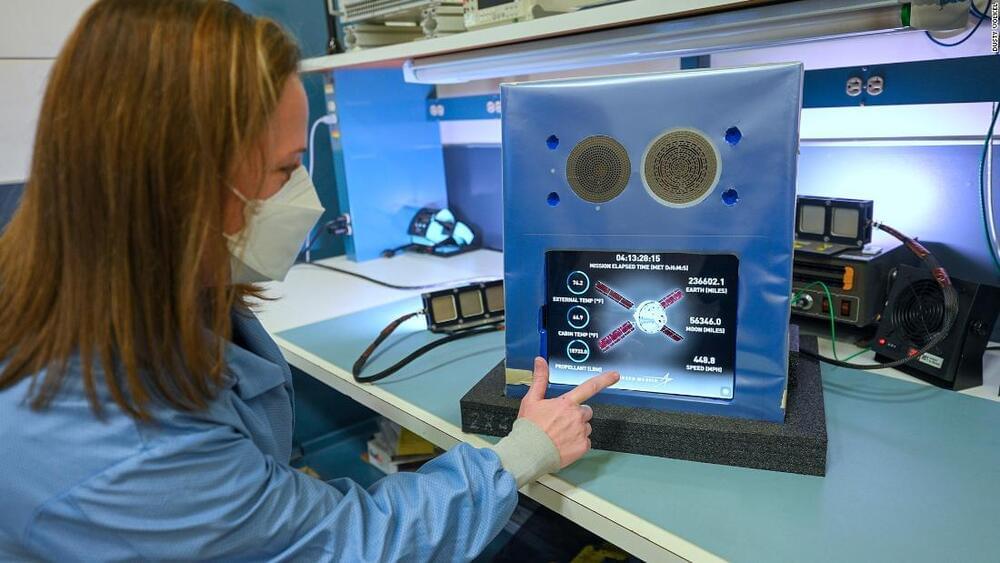

NASA’s Artemis 1 mission, slated for take off as soon as this March, aims to send an Orion spacecraft around the moon. The cabin will be largely empty, save for an a interactive tablet that has been dubbed “Callisto,” which will sit propped up to face an astronaut mannequin. Callisto is essentially a touch-screen device that features reconfigured versions of Alexa, Amazon’s voice assistant, and Cisco’s teleconferencing platform WebEx.

Among the many aspects that will be closely watched on the ground — at least by the group behind this Alexa experiment — is how the virtual assistant performs in space. And if nothing else, it’ll be some well-placed advertising.

It’s all part of a collaboration between Amazon, (AMZN) Cisco (CSCO) 0, and Lockheed Martin (LMT) 0, which built the Orion capsule for NASA. Lockheed approached the other two companies with the idea of developing a virtual assistant about three years ago, the companies said, and they are paying the full cost of including the virtual assistant on the Artemis 1 mission. Lockheed is also reimbursing NASA for any help the agency has lent on this project through an arrangement called a Space Act Agreement, which allows the space agency to be compensated for expertise or resources it gives to companies working on certain space-related projects.

An evolution of its 2018 ‘Valkyrie’ hypersonic airliner concept.

If you work out of an office, you know that the coffee machine is the favorite spot in the office to hang out or have conversations at. From giving us the first cup of the day to keeping us awake for late-night meetings, that machine is a lifesaver. But just for a day, try not getting your coffee from the coffee machine. Don’t skip coffee entirely, but instead, go out to your local coffee shop that doesn’t use coffee machines or make yourself a flask at home. You will realize that hand-made coffee is inherently better than the one that is made from a machine. Not just making coffee, but highly creative jobs–like designing an outfit or writing a book–are considered best left to human creators. Many do not think that machines could emulate them. But with the takeover of artificial intelligence, this belief is steadily being challenged. Creativity and AI are together transforming many spaces that were traditionally reserved for the “artists.” In this article, we’ll be exploring these spaces and how AI is making a significant impact on them.

Creativity and AI Are Literally Changing the World When you think “creative,” the first things that come to mind are music, poetry and novels. The best works in these three areas of art have been the results of human imagination and innovation. Every significant progress in these fields has challenged traditional ways of creating art and presented a new side to human creativity. For example, there was a time when classical music was considered to be the peak of musical art, but today, we see hip-hop and K-pop taking over the world, their styles and structures very different from classical music.

Full Story:

The first-generation AI systems did not address these needs, which led to a low adoption rate. But the second-generation AI systems are focused on a single subject – improving patients’ clinical outcomes. The digital pills combine a personalised second-generation AI system along with the branded or generic drug and improve the patient response as it increases adherence and overcomes the loss of response to chronic medications. It works on improving the effectiveness of drugs and therefore reducing healthcare costs and increasing end-user adoption.

There are many examples to prove that there is a partial or complete loss of response to chronic medications. Cancer drug resistance is a major obstacle for the treatment of multiple malignancies, one-third of epileptics develop resistance to anti-epileptic drugs; also, a similar percentage of patients with depression develop resistance to anti-depressants. Other than the loss of response to chronic medications, low adherence is also a common problem for many NCDs. A little less than 50% of severely asthmatic patients adhere to inhaled treatments, while 40% of hypertensive patients show non-adherence.

The second-generation systems are aimed at improving outcomes and reducing side effects. To overcome the hurdle of biases induced by big data, these systems implement an n = 1 concept in a personalised therapeutic regimen. This focus of the algorithm improves the clinically meaningful outcome for an individual subject. The personalised closed-loop system used by the second-generation system is designed to improve the end-organ function and overcome tolerance and loss of effectiveness.

Artificial intelligence drug design company Iktos, and South Korean clinical research biotech Astrogen announced today a collaboration with the goal of discovering small-molecule pre-clinical drug candidates for a specific, undisclosed, marker of Parkinson’s disease (PD).

Under the terms of the agreement, whose value was not disclosed, Iktos will apply its generative learning algorithms which seek to identify new molecular structures with the potential address the target in PD. Astrogen, which has a focus of the development of therapeutics for “intractable neurological diseases,” will provide in-vitro and in-vivo screening of lead compounds and pre-clinical compounds. While both companies will contribute to the identification of new small-molecule candidates, Astrgoen will lead the drug development process from the pre-clinical stages.

“Our objective is to expedite drug discovery and achieve time and cost efficiencies for our global collaborators by using Iktos’s proprietary AI platform and know-how,” noted Yann Gaston-Mathé, president and CEO of Paris-based Iktos in a press release. “We are confident that together we will be able to identify promising novel chemical matter for the treatment of intractable neurological diseases. Our strategy has always been to tackle challenging problems alongside our collaborators where we can demonstrate value generation for new and on-going drug discovery projects.”

The robot not only monitored the worker’s brain waves, but also collected electric signals from muscles, as it worked seamlessly together to assemble a complex product, according to its developers at China Three Gorges University’s Intelligent Manufacturing Innovation Technology Centre.

The co-worker did not need to say or do anything when they needed a tool or a component, as the robot would recognise the intention almost instantly, picking up the object and putting it on the workstation, according to the developers.

Trained robot monitored co-worker’s brain waves and muscle signals to predict needs, China Three Gorges University team says in domestic peer-reviewed paper.

Tractors that steer themselves are nothing new to Minnesota farmer Doug Nimz. But then four years ago, John Deere brought a whole new kind of machine to his 2,000-acre corn and soybean farm. That tractor could not only steer itself but also didn’t even need a farmer in the cab to operate it.

It turns out the 44,000-pound machine was John Deere’s first fully autonomous tractor, and Nimz was one of the first people in the world to try it out. His farm served as a testing ground that allowed John Deere’s engineers to make continuous changes and improvements over the last few years. On Tuesday, the rest of the world got to see the finished tractor as the centerpiece of the company’s CES 2022 press conference.

“It takes a while to get comfortable because … first of all, you’re just kind of amazed just watching it,” said Nimz, who on a windy October afternoon described himself as “very, very interested” but also a “little suspicious” of autonomous technology before using John Deere’s machine on his farm. “When I actually saw it drive … I said, ‘Well, goll, this is really going to happen. This really will work.’”

These self-powered robots, which were inspired by water-walking insects, delivered chemicals while partially submerged in a solution.

An artificial intelligence (AI) system that can identify diabetic retinopathy (DR) without physician assistance, including the most serious form that puts patients at risk of blindness, has outperformed expectations in a clinical trial. The commercial system successfully detected the presence and severity of the disease in 97% of eyes analysed. Deployment of such AI systems in primary care facilities for use by non-specialists could significantly increase access to eye exams that include DR evaluation, aiding in the diagnosis and treatment of the disease.

An artificial intelligence system that simplifies diabetes retinal screening could help save the vision of millions of people around the world.