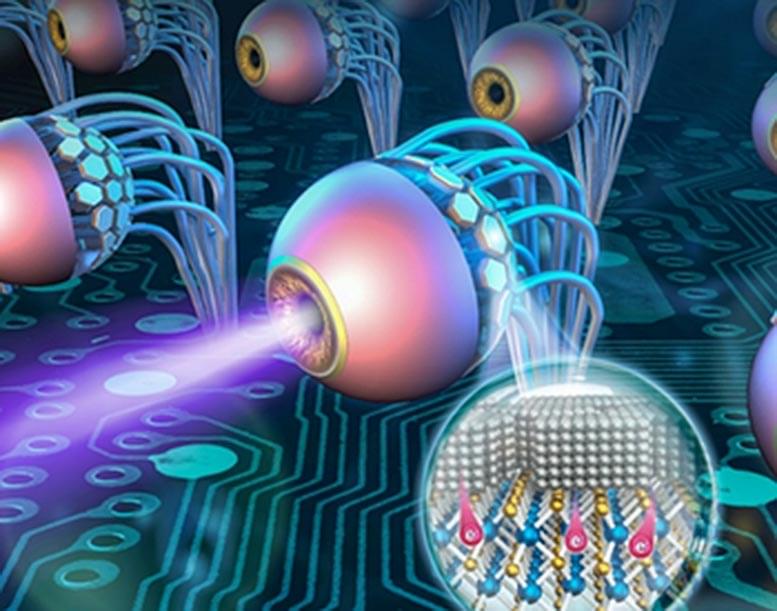

One possible application of interest to researchers is bone healing. The idea is that the soft material, powered by the electroactive polymer, will be able to maneuver in spaces of complicated bone fractures and expand. When the material hardens, it can form the basis for building new bones. In their study, the researchers demonstrate that the material can wrap itself around chicken bones, and the artificial bone that develops later grows along with the animal’s bone. The developed biohybrid variable-stiffness actuators can be used in soft (micro-)robotics and as potential tools for bone repair or bone tissue engineering.

“By controlling how the material turns, we can make the microrobot move in different ways, and also affect how the material unfurls in broken bones. We can embed these movements into the material’s structure, making complex programs for steering these robots unnecessary”, says Edwin Jager.