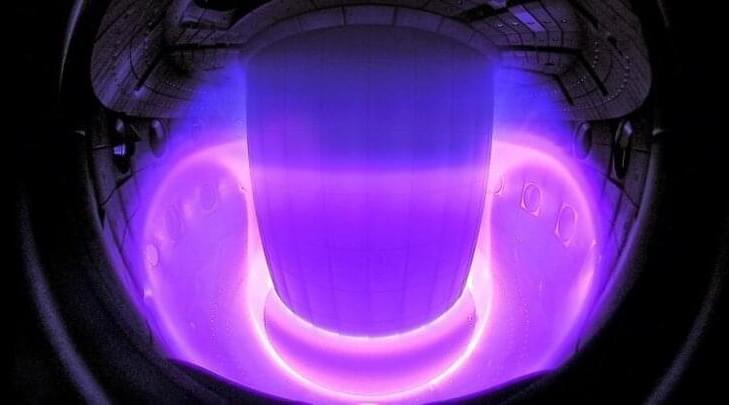

There are few places in the universe that invite as much curiosity—and terror—as the interior of a black hole. These extreme objects exert such a powerful gravitational pull that not even light can escape them, a feature that has left many properties of black holes unexplained.

Now, a team led by Enrico Rinaldi, a research scientist at the University of Michigan, have used quantum computing and deep learning to probe the bizarre innards of black holes under the framework of a mind-boggling idea called holographic duality. This idea posits that black holes, or even the universe itself, might be holograms.