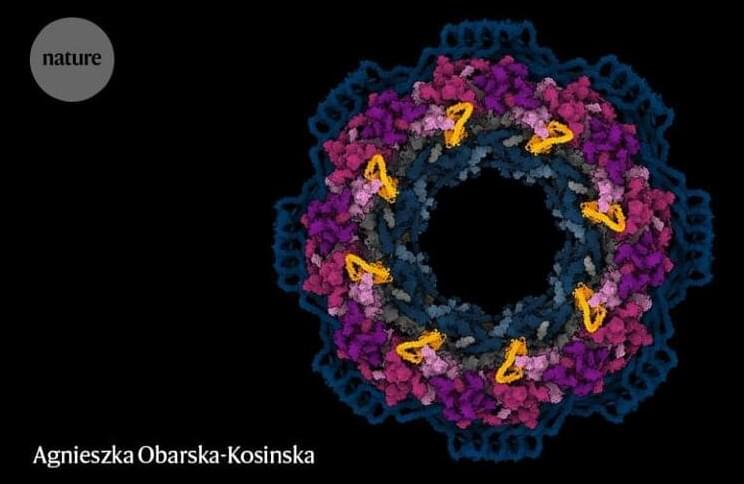

DeepMind software that can predict the 3D shape of proteins is already changing biology.

When Dr. Shiran Barber-Zucker joined the lab of Prof. Sarel Fleishman as a postdoctoral fellow, she chose to pursue an environmental dream: breaking down plastic waste into useful chemicals. Nature has clever ways of decomposing tough materials: Dead trees, for example, are recycled by white-rot fungi, whose enzymes degrade wood into nutrients that return to the soil. So why not coax the same enzymes into degrading man-made waste?

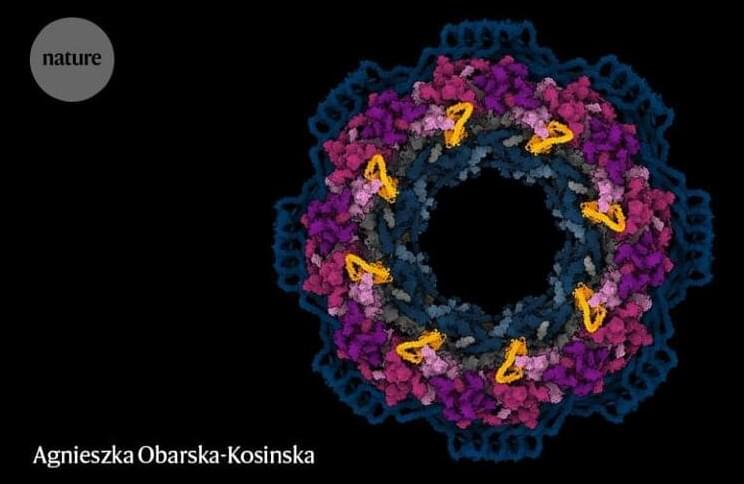

Barber-Zucker’s problem was that these enzymes, called versatile peroxidases, are notoriously unstable. “These natural enzymes are real prima donnas; they are extremely difficult to work with,” says Fleishman, of the Biomolecular Sciences Department at the Weizmann Institute of Science. Over the past few years, his lab has developed computational methods that are being used by thousands of research teams around the world to design enzymes and other proteins with enhanced stability and additional desired properties. For such methods to be applied, however, a protein’s precise molecular structure must be known. This typically means that the protein must be sufficiently stable to form crystals, which can be bombarded with X-rays to reveal their structure in 3D. This structure is then tweaked using the lab’s algorithms to design an improved protein that doesn’t exist in nature.

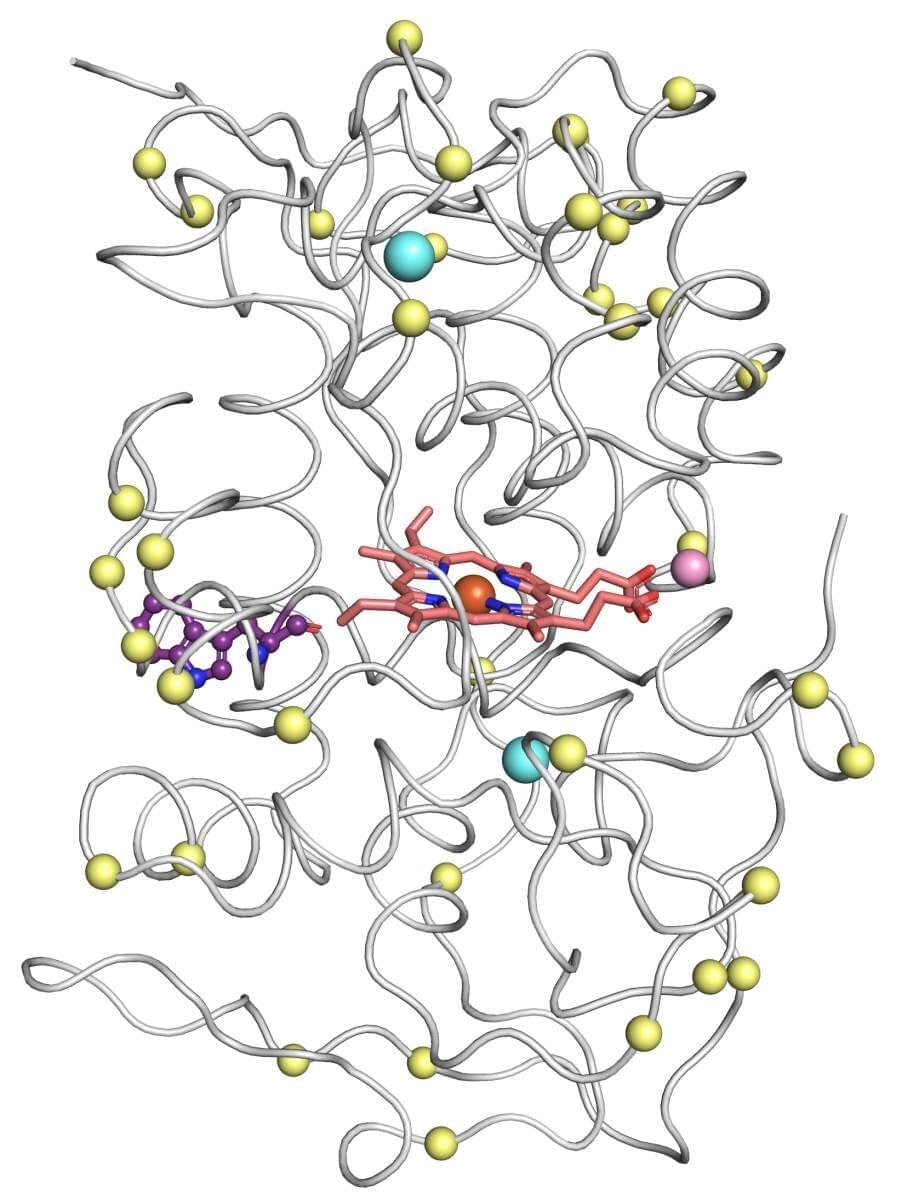

Perovskites are a family of materials that are currently the leading contender to potentially replace today’s silicon-based solar photovoltaics. They hold the promise of panels that are far thinner and lighter, that could be made with ultra-high throughput at room temperature instead of at hundreds of degrees, and that are cheaper and easier to transport and install. But bringing these materials from controlled laboratory experiments into a product that can be manufactured competitively has been a long struggle.

Manufacturing perovskite-based solar cells involves optimizing at least a dozen or so variables at once, even within one particular manufacturing approach among many possibilities. But a new system based on a novel approach to machine learning could speed up the development of optimized production methods and help make the next generation of solar power a reality.

The system, developed by researchers at MIT and Stanford University over the last few years, makes it possible to integrate data from prior experiments, and information based on personal observations by experienced workers, into the machine learning process. This makes the outcomes more accurate and has already led to the manufacturing of perovskite cells with an energy conversion efficiency of 18.5 percent, a competitive level for today’s market.

What would Aliens look like? We should not expect aliens to look like us — they might even be completely mechanical.

See my blog on BigThink.com.

Link to go directly to the article.

We should not expect aliens to look anything like us. Creatures that resemble octopuses or birds or even robots are legitimate possibilities.

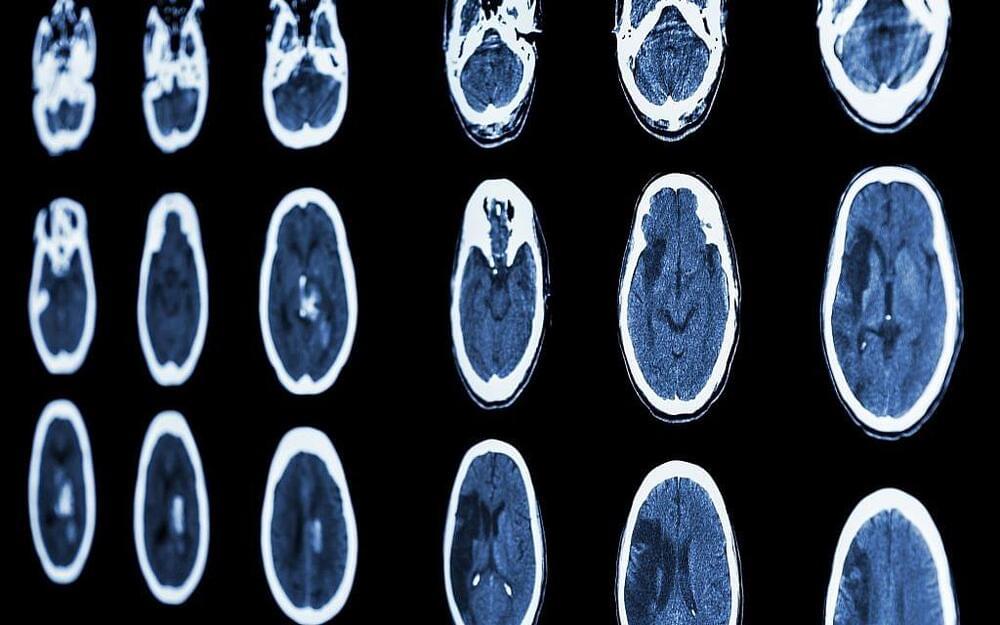

Sending miniature robots deep inside the human skull to treat brain disorders has long been the stuff of science fiction—but it could soon become reality, according to a California start-up.

Bionaut Labs plans its first clinical trials on humans in just two years for its tiny injectable robots, which can be carefully guided through the brain using magnets.

“The idea of the micro robot came about way before I was born,” said co-founder and CEO Michael Shpigelmacher.

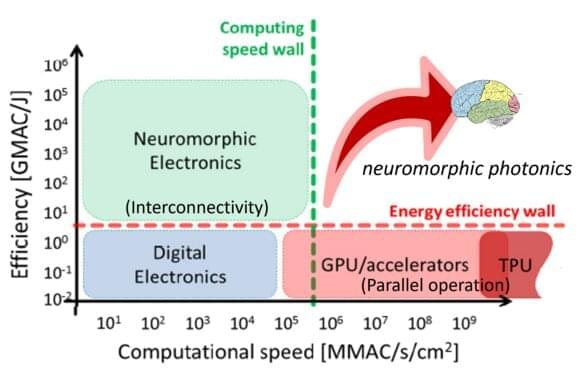

Supercomputers are extremely fast, but also use a lot of power. Neuromorphic computing, which takes our brain as a model to build fast and energy-efficient computers, can offer a viable and much-needed alternative. The technology has a wealth of opportunities, for example in autonomous driving, interpreting medical images, edge AI or long-haul optical communications. Electrical engineer Patty Stabile is a pioneer when it comes to exploring new brain-and biology-inspired computing paradigms. “TU/e combines all it takes to demonstrate the possibilities of photon-based neuromorphic computing for AI applications.”

Patty Stabile, an associate professor in the department of Electrical Engineering, was among the first to enter the emerging field of photonic neuromorphic computing.

“I had been working on a proposal to build photonic digital artificial neurons when in 2017 researchers from MIT published an article describing how they developed a small chip for carrying out the same algebraic operations, but in an analog way. That is when I realized that synapses based on analog technology were the way to go for running artificial intelligence, and I have been hooked on the subject ever since.”

Medical tech company Viz.ai, a developer of an AI-powered stroke detection and care platform, has pulled in a new investment of $100 million at a valuation of $1.2 billion, making it Israel’s newest unicorn (a private company valued at over $1 billion).

The company said Thursday that the Series D funding will be used to expand the Viz platform to detect and triage additional diseases and grow its customer base globally.

Viz.ai’s newest round was led by Tiger Global Management, a New York-based investment firm focused on software and financial tech, and Insight Partners, a VC and private equity firm also based in New York. Tiger Global has invested in Israeli companies such as cybersecurity companies Snyk and SentinelOne as well as payroll tech companies Papaya Global and HoneyBook. Insight Partners is a very active foreign investor in Israeli companies, with at least 76 local portfolio startups to its name including privacy startup PlainID, bee tech startup Beewise, and music tech startup JoyTunes.

We are already living in the future. Our everyday lives are influenced by robots in many ways, whether we’re at home or at work. As artificial intelligence, open source algorithms, and cloud technology have been developed in recent years, they have contributed to creating favorable conditions for robot revolution, which is closer than you might expect.