Consciousness defines our existence. It is, in a sense, all we really have, all we really are, The nature of consciousness has been pondered in many ways, in many cultures, for many years. But we still can’t quite fathom it.

Consciousness Cannot Have Evolved Read more Consciousness is, some say, all-encompassing, comprising reality itself, the material world a mere illusion. Others say consciousness is the illusion, without any real sense of phenomenal experience, or conscious control. According to this view we are, as TH Huxley bleakly said, ‘merely helpless spectators, along for the ride’. Then, there are those who see the brain as a computer. Brain functions have historically been compared to contemporary information technologies, from the ancient Greek idea of memory as a ‘seal ring’ in wax, to telegraph switching circuits, holograms and computers. Neuroscientists, philosophers, and artificial intelligence (AI) proponents liken the brain to a complex computer of simple algorithmic neurons, connected by variable strength synapses. These processes may be suitable for non-conscious ‘auto-pilot’ functions, but can’t account for consciousness.

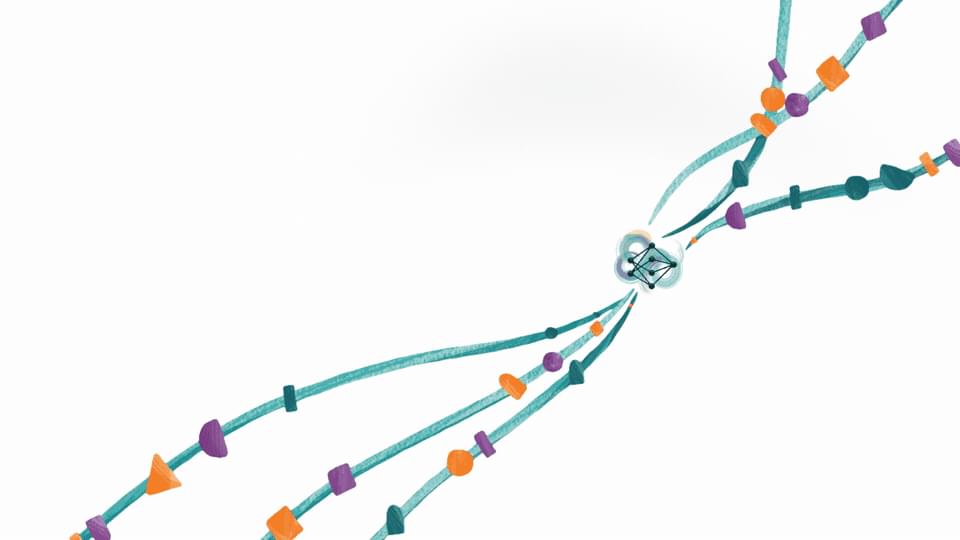

Finally there are those who take consciousness as fundamental, as connected somehow to the fine scale structure and physics of the universe. This includes, for example Roger Penrose’s view that consciousness is linked to the Objective Reduction process — the ‘collapse of the quantum wavefunction’ – an activity on the edge between quantum and classical realms. Some see such connections to fundamental physics as spiritual, as a connection to others, and to the universe, others see it as proof that consciousness is a fundamental feature of reality, one that developed long before life itself.