Category: robotics/AI – Page 1,666

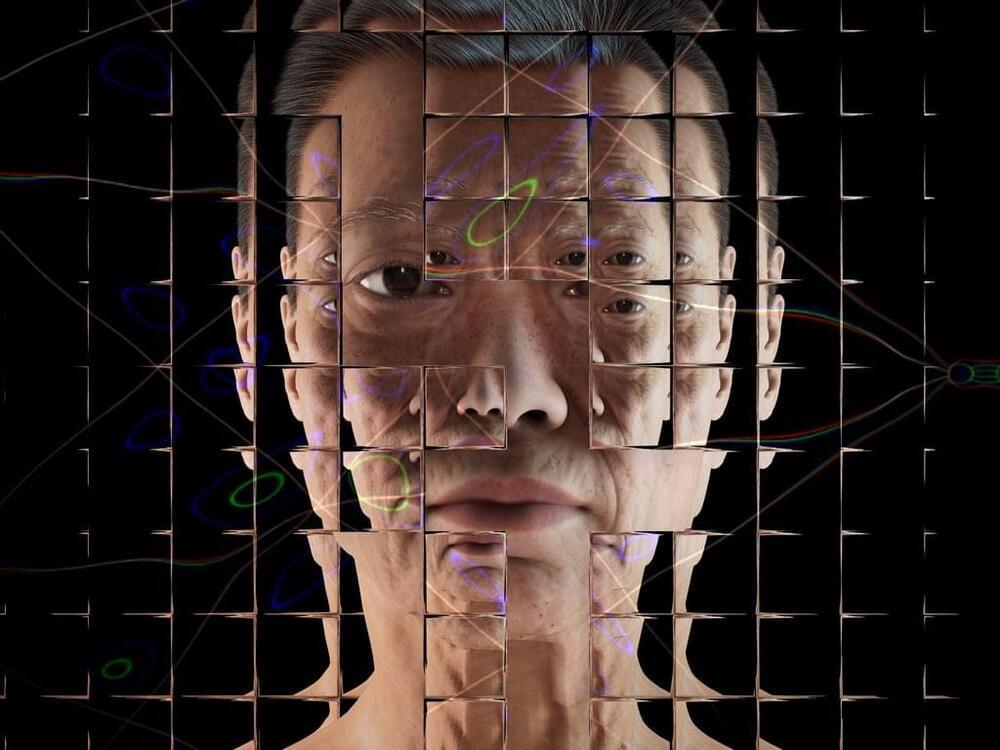

‘The Game is Over’: Google’s DeepMind says it is on verge of achieving human-level AI

Human-level artificial intelligence is close to finally being achieved, according to a lead researcher at Google’s DeepMind AI division.

Dr Nando de Freitas said “the game is over” in the decades-long quest to realise artificial general intelligence (AGI) after DeepMind unveiled an AI system capable of completing a wide range of complex tasks, from stacking blocks to writing poetry.

Described as a “generalist agent”, DeepMind’s new Gato AI needs to just be scaled up in order to create an AI capable of rivalling human intelligence, Dr de Freitas said.

You can practice for a job interview with Google AI

Never mind reading generic guides or practicing with friends — Google is betting that algorithms can get you ready for a job interview. The company has launched an Interview Warmup tool that uses AI to help you prepare for interviews across various roles. The site asks typical questions (such as the classic “tell me a bit about yourself”) and analyzes your voiced or typed responses for areas of improvement. You’ll know when you overuse certain words, for instance, or if you need to spend more time talking about a given subject.

Interview Warmup is aimed at Google Career Certificates users hoping to land work, and most of its role-specific questions reflect this. There are general interview questions, though, and Google plans to expand the tool to help more candidates. The feature is currently only available in the US.

AI has increasingly been used in recruitment. To date, though, it has mainly served companies during their selection process, not the potential new hires. This isn’t going to level the playing field, but it might help you brush up on your interview skills.