Category: robotics/AI – Page 1,649

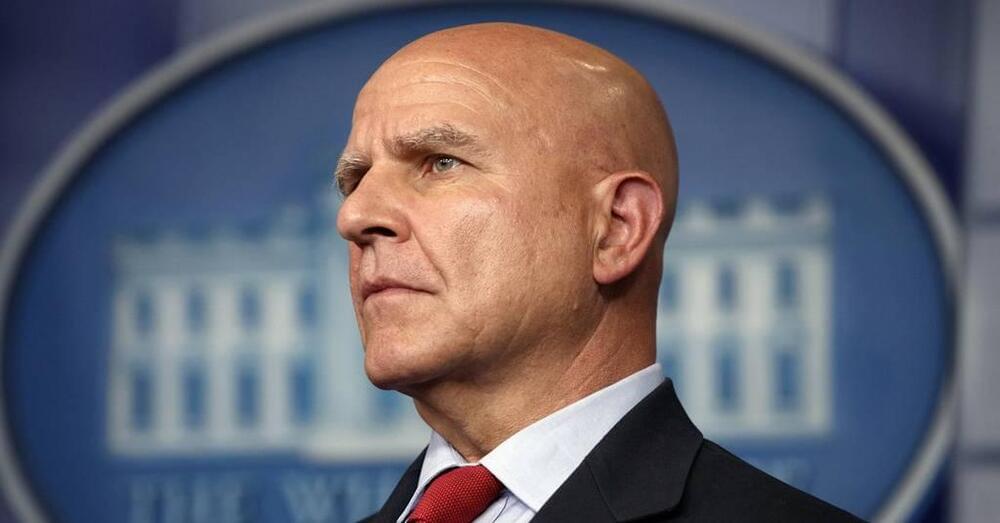

McMaster says AI can help beat adversaries, overcome ‘critical challenges’

WASHINGTON — Artificial intelligence and related digital tools can help warn of natural disasters, combat global warming and fast-track humanitarian aid, according to retired Army Lt. Gen. H.R. McMaster, a onetime Trump administration national security adviser.

It can also help preempt fights, highlight incoming attacks and expose weaknesses the world over, he said May 17 at the Nexus 22 symposium.

The U.S. must “identify aggression early to deter it,” McMaster told attendees of the daylong event focused on autonomy, AI and the defense policy that underpins it. “This applies to our inability to deter conflict in Ukraine, but also the need to deter conflict in other areas, like Taiwan. And, of course, we have to be able to respond to it quickly and to maintain situational understanding, identify patterns of adversary and enemy activity, and perhaps more importantly, to anticipate pattern breaks.”

CAPSTONE Launch to the Moon (Official NASA Broadcast)

Watch the launch from New Zealand of CAPSTONE, a new pathfinder CubeSat that will explore a unique orbit around the Moon!

The Cislunar Autonomous Positioning System Technology Operations and Navigation Experiment, or CAPSTONE, will be the first spacecraft to fly a near rectilinear halo orbit (NRHO) around the Moon, where the pull of gravity from Earth and the Moon interact to allow for a nearly-stable orbit. CAPSTONE’s test of this orbit will lead the way for our future Artemis lunar outpost called Gateway.

CAPSTONE is targeted to launch at 5:55 a.m. EDT (9:55 UTC) Tuesday, June 28 on Rocket Lab’s Electron rocket from the company’s Launch Complex 1 in New Zealand.

Messenger chatbots now used to steal Facebook accounts

A new phishing attack is using Facebook Messenger chatbots to impersonate the company’s support team and steal credentials used to manage Facebook pages.

Chatbots are programs that impersonate live support people and are commonly used to provide answers to simple questions or triage customer support cases before they are handed off to a live employee.

In a new campaign discovered by TrustWave, threat actors use chatbots to steal credentials for managers of Facebook pages, commonly used by companies to provide support or promote their services.

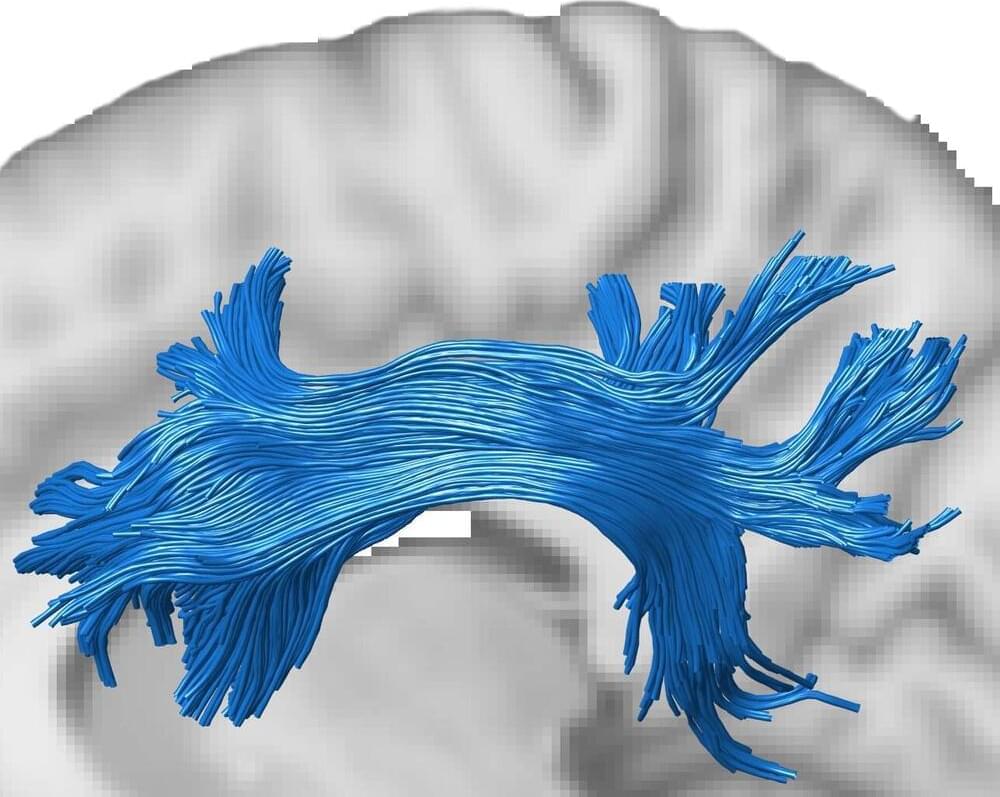

Researchers use GPUs to evaluate human brain connectivity

A new GPU-based machine learning algorithm developed by researchers at the Indian Institute of Science (IISc) can help scientists better understand and predict connectivity between different regions of the brain.

The algorithm, called Regularized, Accelerated, Linear Fascicle Evaluation, or ReAl-LiFE, can rapidly analyze the enormous amounts of data generated from diffusion Magnetic Resonance Imaging (dMRI) scans of the human brain. Using ReAL-LiFE, the team was able to evaluate dMRI data over 150 times faster than existing state-of-the-art algorithms.

“Tasks that previously took hours to days can be completed within seconds to minutes,” says Devarajan Sridharan, Associate Professor at the Centre for Neuroscience (CNS), IISc, and corresponding author of the study published in the journal Nature Computational Science.

An Autonomous Ship Used AI to Cross the Atlantic Without a Human Crew

The differences? The new Mayflower—logically dubbed the Mayflower 400—is a 50-foot-long trimaran (that’s a boat that has one main hull with a smaller hull attached on either side), can go up to 10 knots or 18.5 kilometers an hour, is powered by electric motors that run on solar energy (with diesel as a backup if needed), and required a crew of… zero.

That’s because the ship was navigated by an on-board AI. Like a self-driving car, the ship was tricked out with multiple cameras (6 of them) and sensors (45 of them) to feed the AI information about its surroundings and help it make wise navigation decisions, such as re-routing around spots with bad weather. There’s also onboard radar and GPS, as well as altitude and water-depth detectors.

The ship and its voyage were a collaboration between IBM and a marine research non-profit called ProMare. Engineers trained the Mayflower 400’s “AI Captain” on petabytes of data; according to an IBM overview about the ship, its decisions are based on if/then rules and machine learning models for pattern recognition, but also go beyond these standards. The algorithm “learns from the outcomes of its decisions, makes predictions about the future, manages risks, and refines its knowledge through experience.” It’s also able to integrat e far more inputs in real time than a human is capable of.

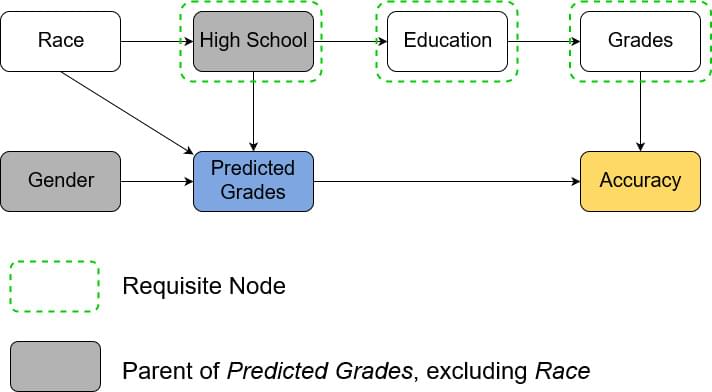

Spotting Unfair or Unsafe AI using Graphical Criteria

How to use causal influence diagrams to recognize the hidden incentives that shape an AI agent’s behavior.

There is rightfully a lot of concern about the fairness and safety of advanced Machine Learning systems. To attack the root of the problem, researchers can analyze the incentives posed by a learning algorithm using causal influence diagrams (CIDs). Among others, DeepMind Safety Research has written about their research on CIDs, and I have written before about how they can be used to avoid reward tampering. However, while there is some writing on the types of incentives that can be found using CIDs, I haven’t seen a succinct write up of the graphical criteria used to identify such incentives. To fill this gap, this post will summarize the incentive concepts and their corresponding graphical criteria, which were originally defined in the paper Agent Incentives: A Causal Perspective.

A causal influence diagram is a directed acyclic graph where different types of nodes represent different elements of an optimization problem. Decision nodes represent values that an agent can influence, utility nodes represent the optimization objective, and structural nodes (also called change nodes) represent the remaining variables such as the state. The arrows show how the nodes are causally related with dotted arrows indicating the information that an agent uses to make a decision. Below is the CID of a Markov Decision Process, with decision nodes in blue and utility nodes in yellow:

The first model is trying to predict a high school student’s grades in order to evaluate their university application. The model uses the student’s high school and gender as input and outputs the predicted GPA. In the CID below we see that predicted grade is a decision node. As we train our model for accurate predictions, accuracy is the utility node. The remaining, structural nodes show how relevant facts about the world relate to each other. The arrows from gender and high school to predicted grade show that those are inputs to the model. For our example we assume that a student’s gender doesn’t affect their grade and so there is no arrow between them. On the other hand, a student’s high school is assumed to affect their education, which in turn affects their grade, which of course affects accuracy. The example assumes that a student’s race influences the high school they go to. Note that only high school and gender are known to the model.