As demonstrated by breakthroughs in various fields of artificial intelligence (AI), such as image processing, smart health care, self-driving vehicles and smart cities, this is undoubtedly the golden period of deep learning. In the next decade or so, AI and computing systems will eventually be equipped with the ability to learn and think the way humans do—to process continuous flow of information and interact with the real world.

However, current AI models suffer from a performance loss when they are trained consecutively on new information. This is because every time new data is generated, it is written on top of existing data, thus erasing previous information. This effect is known as “catastrophic forgetting.” A difficulty arises from the stability-plasticity issue, where the AI model needs to update its memory to continuously adjust to the new information, and at the same time, maintain the stability of its current knowledge. This problem prevents state-of-the-art AI from continually learning from real world information.

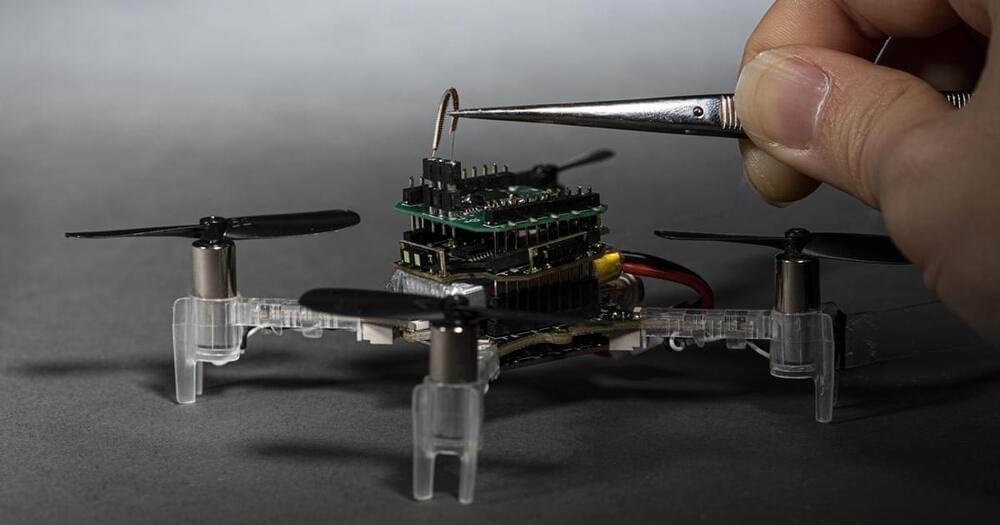

Edge computing systems allow computing to be moved from the cloud storage and data centers to near the original source, such as devices connected to the Internet of Things (IoTs). Applying continual learning efficiently on resource limited edge computing systems remains a challenge, although many continual learning models have been proposed to solve this problem. Traditional models require high computing power and large memory capacity.