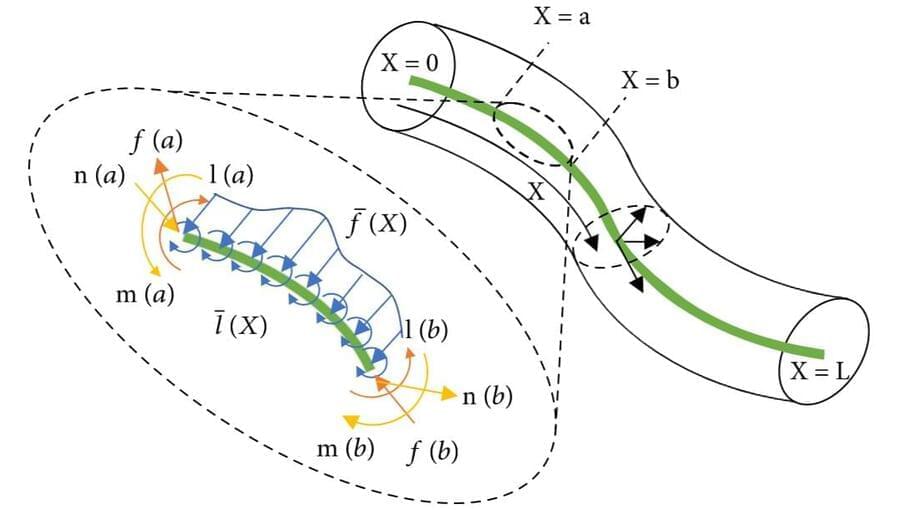

A review paper by scientists at Zhejiang University summarized the development of continuum robots from the aspects of design, actuation, modeling and control. The new review paper, published on Jul. 26 in the journal Cyborg and Bionic Systems, provided an overview of the classic and advanced technologies of continuum robots, along with some prospects urgently to be solved.

“Some small-scale continuum robots with new actuation methods are being widely investigated in the field of interventional surgical treatment or endoscopy, however, the characterization of mechanical properties of them is still different problem,” explained study author Haojian Lu, a professor at the Zhejiang University.

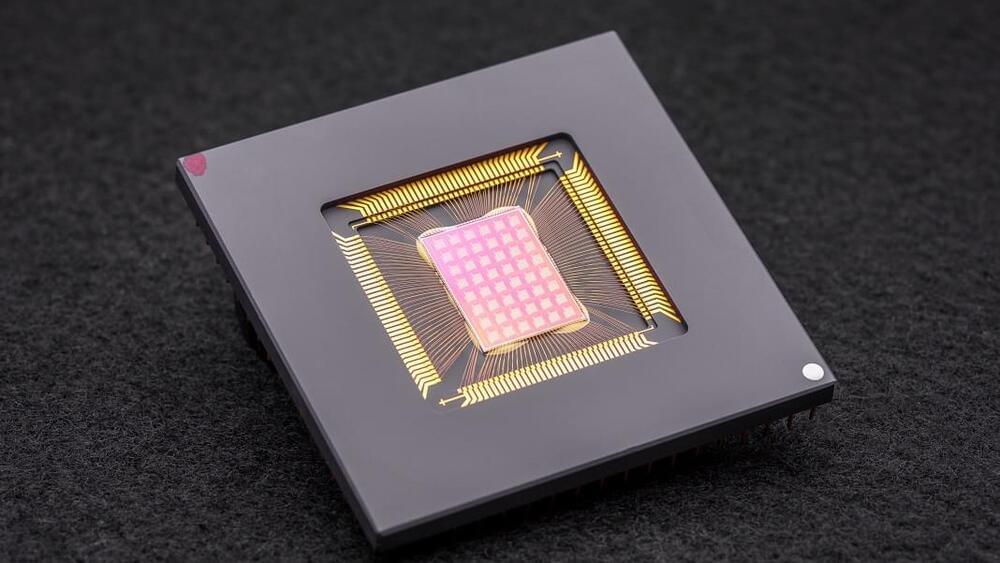

In order to realize the miniaturization of continuum robots, many cutting-edge materials have been developed and used to realize the actuation of robots, showing unique advantages. The continuum robots embedded with micromagnet or made of ferromagnetic composite material have accurate steering ability under an external controllable magnetic field; Magnetically soft continuum robots, on the other hand, can achieve small diameters, up to the micron scale, which ensures their ability to conduct targeted therapy in bronchi or in cerebral vessels.