We’re on a journey to advance and democratize artificial intelligence through open source and open science.

We’ve all heard the arguments – “AI will supercharge the economy!” versus “No, AI is going to steal all our jobs!” The reality lies somewhere in between. Generative AI1 is a powerful tool that will boost productivity, but it won’t trigger mass unemployment overnight, and it certainly isn’t Skynet (if you know, you know). The International Monetary Fund (IMF) estimates that “AI will affect almost 40% of jobs around the world, replacing some and complementing others”. In practice, that means a large portion of workers will see some tasks automated by AI, but not necessarily lose their entire job. However, even jobs heavily exposed to AI still require human-only inputs and oversight: AI might draft a report, but you’ll still need someone to fine-tune the ideas and make the decisions.

From an economic perspective, AI will undoubtedly be a game changer. Nobel laureate Michael Spence wrote in September 2024 that AI “has the potential not only to reverse the downward productivity trend, but over time to produce a major sustained surge in productivity.” In other words, AI could usher in a new era of faster growth by enabling more output from the same labour and capital. Crucially, AI often works best in collaboration with existing worker skillsets; in most industries AI has the potential to handle repetitive or time-consuming work (like basic coding or form-filling), letting people concentrate on higher-value-add aspects. In short, AI can raise output per worker without making workers redundant en masse. This, in turn, has the potential to raise GDP over time; if this occurs in a non-inflationary environment it could outpace the growth in US debt for example.

Some jobs will benefit more than others. Knowledge workers who harness AI – e.g. an analyst using AI to sift data – can become far more productive (and valuable). New roles (AI auditors, prompt engineers) are already emerging. Conversely, jobs heavy on routine information processing are already under pressure. The job of a translator is often cited as the most at risk; for example, today’s AI can already handle c.98% of a translator’s typical tasks, and is gradually conquering more technically challenging real-time translation.

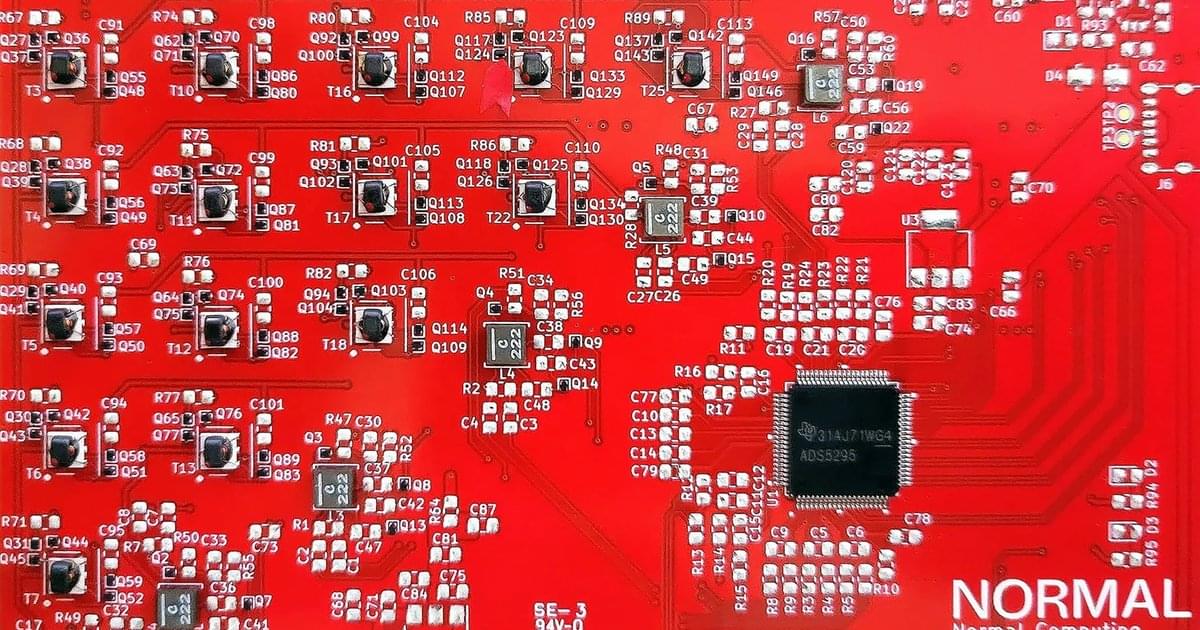

According to the company, by using natural dynamics such as fluctuations, dissipation, and stochasticity – otherwise known as a lack of predictability — the chips are able to compute far more efficiently than traditional semiconductors.

CN101 specifically targets two tasks necessary for supporting AI workloads: solving linear algebra and matrix operations, and stochastic sampling with lattice random walk, and represents the first step on Normal Computing’s roadmap towards commercializing thermodynamic computing at scale and enabling significantly more AI performance per watt, rack, and dollar, the company says.

Chip startup Normal Computing has announced the successful tape-out of the world’s first thermodynamic semiconductor, CN101.

Designed to support AI and HPC workloads, Normal Computing describes the CN101 as a “physics-based ASIC” that harnesses the “intrinsic dynamics of physical systems… achieving up to 1,000x energy consumption efficiency.”

Encoding quantum information within bosonic modes offers a promising direction for hardware-efficient and fault-tolerant quantum information processing. However, achieving high-fidelity universal control over bosonic encodings using native photonic hardware remains a significant challenge. We establish a quantum control framework to prepare and perform universal logical operations on arbitrary multimode multi-photon states using a quantum photonic neural network. Central to our approach is the optical nonlinearity, which is realized through strong light-matter interaction with a three-level Λ atomic system. The dynamics of this passive interaction are asymptotically confined to the single-mode subspace, enabling the construction of deterministic entangling gates and overcoming limitations faced by many nonlinear optical mechanisms. Using this nonlinearity as the element-wise activation function, we show that the proposed architecture is able to deterministically prepare a wide array of multimode multi-photon states, including essential resource states. We demonstrate universal code-agnostic control of bosonic encodings by preparing and performing logical operations on symmetry-protected error-correcting codes. Our architecture is not constrained by symmetries imposed by evolution under a system Hamiltonian such as purely χ and χ processes, and is naturally suited to implement non-transversal gates on photonic logical qubits. Additionally, we propose an error-correction scheme based on non-demolition measurements that is facilitated by the optical nonlinearity as a building block. Our results pave the way for near-term quantum photonic processors that enable error-corrected quantum computation, and can be achieved using present-day integrated photonic hardware.

Basani, J.R., Niu, M.Y. & Waks, E. Universal logical quantum photonic neural network processor via cavity-assisted interactions. npj Quantum Inf 11, 142 (2025). https://doi.org/10.1038/s41534-025-01096-9

Altruism, the tendency to behave in ways that benefit others even if it comes at a cost to oneself, is a valuable human quality that can facilitate cooperation with others and promote meaningful social relationships. Behavioral scientists have been studying human altruism for decades, typically using tasks or games rooted in economics.

Two researchers based at Willamette University and the Laureate Institute for Brain Research recently set out to explore the possibility that large language models (LLMs), such as the model underpinning the functioning of the conversational platform ChatGPT, can simulate the altruistic behavior observed in humans. Their findings, published in Nature Human Behavior, suggest that LLMs do in fact simulate altruism in specific social experiments, offering a possible explanation for this.

“My paper with Nick Obradovich emerged from my longstanding interest in altruism and cooperation,” Tim Johnson, co-author of the paper, told Tech Xplore. “Over the course of my career, I have used computer simulation to study models in which agents in a population interact with each other and can incur a cost to benefit another party. In parallel, I have studied how people make decisions about altruism and cooperation in laboratory settings.

Nature, the master engineer, is coming to our rescue again. Inspired by scorpions, scientists have created new pressure sensors that are both highly sensitive and able to work across a wide variety of pressures.

Pressure sensors are key components in an array of applications, from medical devices and industrial control systems to robotics and human-machine interfaces. Silicon-based piezoresistive sensors are among the most common types used today, but they have a significant limitation. They can’t be super sensitive to changes and work well across a range of pressures at the same time. Often, you have to choose one over the other.

In a major step toward intelligent and collaborative microrobotic systems, researchers at the Research Center for Materials, Architectures and Integration of Nanomembranes (MAIN) at Chemnitz University of Technology have developed a new generation of autonomous microrobots—termed smartlets—that can communicate, respond, and work together in aqueous environments.

These tiny devices, each just a millimeter in size, are fully integrated with onboard electronics, sensors, actuators, and energy systems. They are able to receive and transmit optical signals, respond to stimuli with motion, and exchange information with other microrobots in their vicinity.

The findings are published in Science Robotics, in a paper titled “Si chiplet–controlled 3D modular microrobots with smart communication in natural aqueous environments.”

A line of engineering research seeks to develop computers that can tackle a class of challenges called combinatorial optimization problems. These are common in real-world applications such as arranging telecommunications, scheduling, and travel routing to maximize efficiency.

Unfortunately, today’s technologies run into limits for how much processing power can be packed into a computer chip, while training artificial-intelligence models demands tremendous amounts of energy.

Researchers at UCLA and UC Riverside have demonstrated a new approach that overcomes these hurdles to solve some of the most difficult optimization problems. The team designed a system that processes information using a network of oscillators, components that move back and forth at certain frequencies, rather than representing all data digitally. This type of computer architecture, called an Ising machine, has special power for parallel computing, which makes numerous, complex calculations simultaneously. When the oscillators are in sync, the optimization problem is solved.