But treat your physarum polycephalum well, or it could die.

The Irish mathematician and physicist William Rowan Hamilton, who was born 220 years ago last month, is famous for carving some mathematical graffiti into Dublin’s Broome Bridge in 1843.

But in his lifetime, Hamilton’s reputation rested on work done in the 1820s and early 1830s, when he was still in his twenties. He developed new mathematical tools for studying light rays (or “geometric optics”) and the motion of objects (“mechanics”).

Intriguingly, Hamilton developed his mechanics using an analogy between the path of a light ray and that of a material particle.

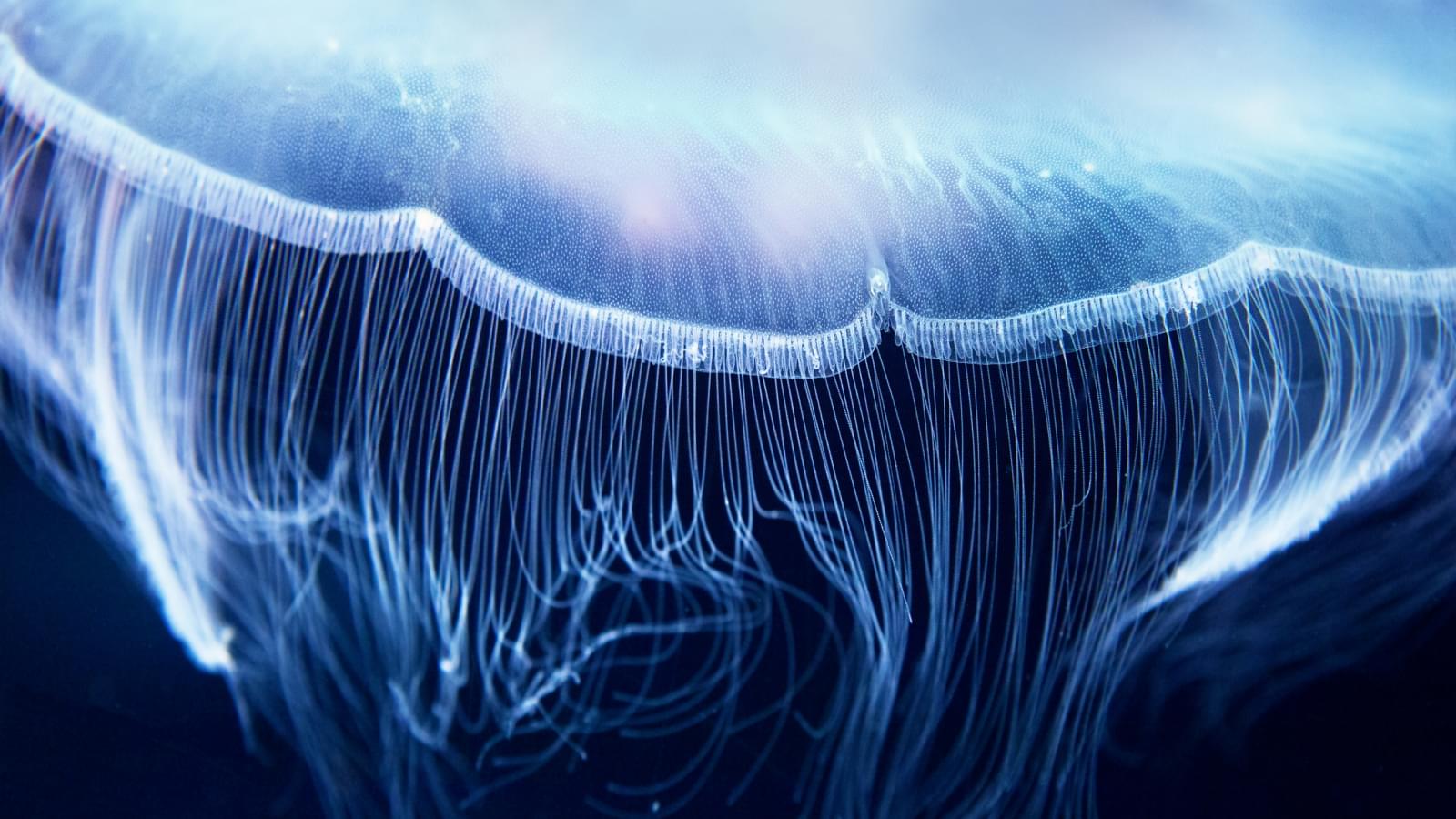

Fluorescent proteins, which can be found in a variety of marine organisms, absorb light at one wavelength and emit it at another, longer wavelength; this is, for instance, what gives some jellyfish the ability to glow. As such, they are used by biologists to tag cells through the genetic encoding and in the fusing of proteins.

The researchers found that the fluorophore in these proteins, which enables the immittance of light, can be used as qubits due to their ability to have a metastable triplet state. This is where a molecule absorbs light and transitions into an excited state with two of its highest-energy electrons in a parallel spin. This lasts for a brief period before decaying. In quantum mechanical terms, the molecule is in a superposition of multiple states at once until directly observed or disrupted by an external interference.

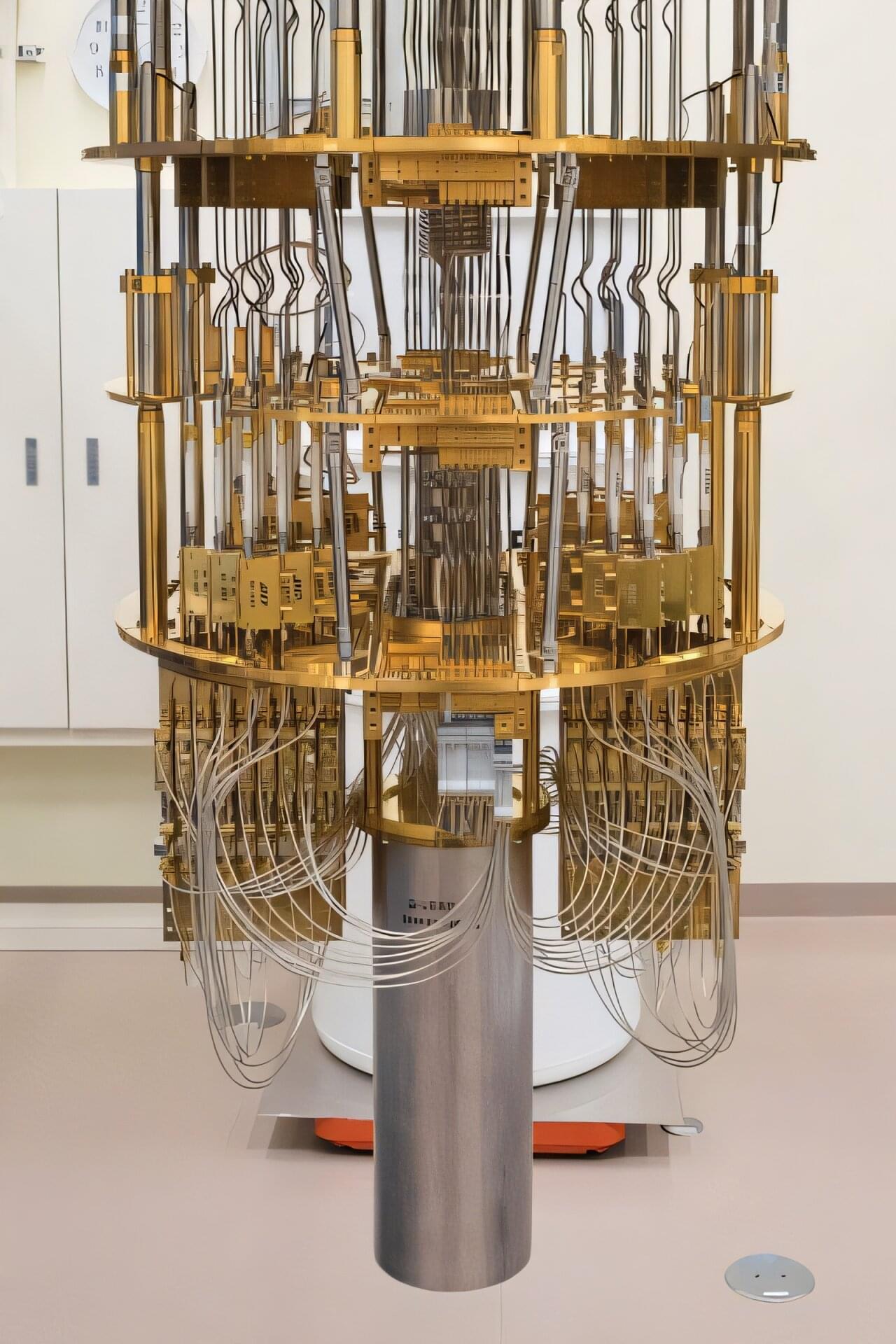

Quantum computers will need large numbers of qubits to tackle challenging problems in physics, chemistry, and beyond. Unlike classical bits, qubits can exist in two states at once—a phenomenon called superposition. This quirk of quantum physics gives quantum computers the potential to perform certain complex calculations better than their classical counterparts, but it also means the qubits are fragile. To compensate, researchers are building quantum computers with extra, redundant qubits to correct any errors. That is why robust quantum computers will require hundreds of thousands of qubits.

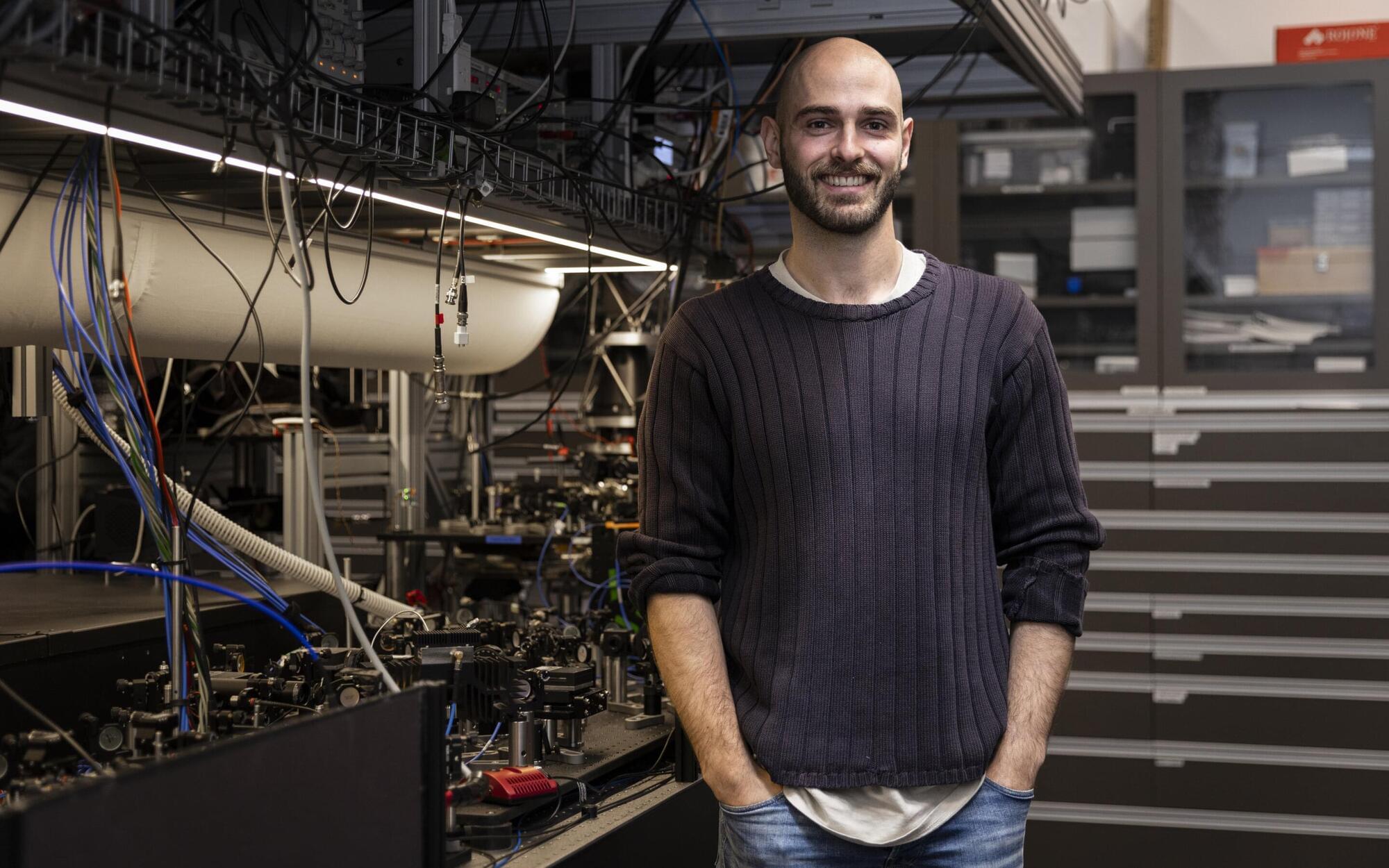

Now, in a step toward this vision, Caltech physicists have created the largest qubit array ever assembled: 6,100 neutral-atom qubits trapped in a grid by lasers. Previous arrays of this kind contained only hundreds of qubits.

This milestone comes amid a rapidly growing race to scale up quantum computers. There are several approaches in development, including those based on superconducting circuits, trapped ions, and neutral atoms, as used in the new study.

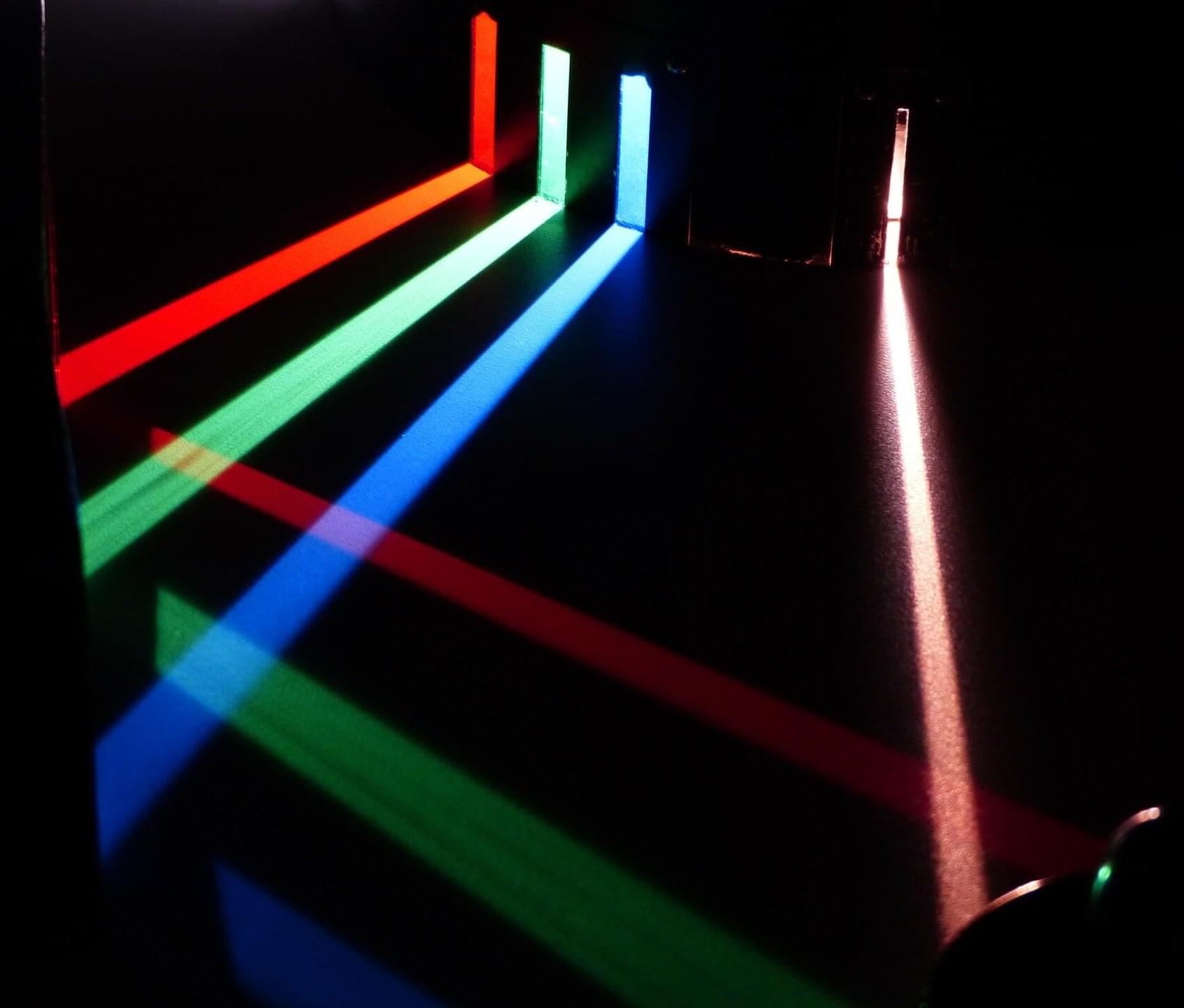

When you shine a flashlight into a glass of water, the beam bends. That simple observation, familiar since ancient times, hides one of the oldest puzzles in physics: what really happens to the momentum of light when it enters a medium?

In quantum physics, light is not just a wave—it also behaves like a particle, carrying energy and momentum. For more than a century, scientists have debated whether light’s momentum inside matter is larger or smaller than in empty space. The two competing answers are known as the Minkowski momentum, which is larger and seems to explain how light bends, and the Abraham momentum, which is smaller and matches the actual push or pull that light exerts on the medium.

The controversy never went away because experiments seemed to confirm both sides. Some setups measured the larger Minkowski value, others supported Abraham, leaving physicists with a paradox.

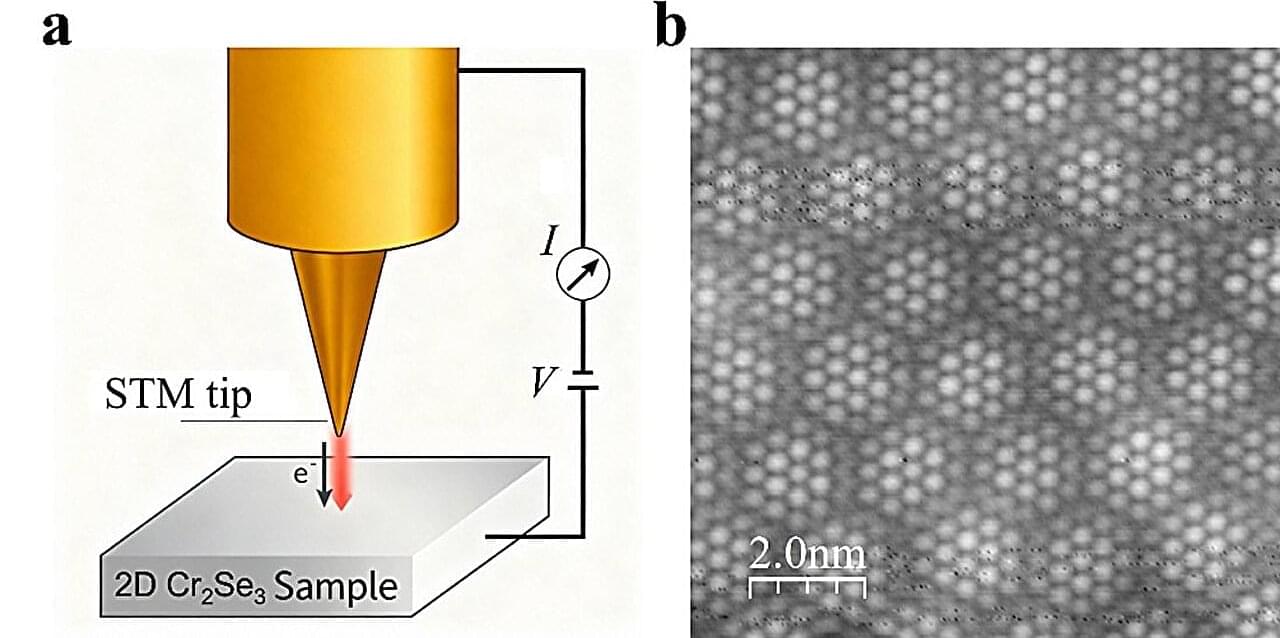

Researchers at the National University of Singapore (NUS) have observed a doping-tunable charge density wave (CDW) in a single-layer semiconductor, Chromium(III) selenide (Cr2Se3), extending the CDW phenomenon from metals to doped semiconductors.

CDWs are intriguing electronic patterns widely observed in metallic two-dimensional (2D) transition metal chalcogenides (TMCs). The study of CDW provides insights into emergent orders in quantum materials, where electron correlations play a non-negligible role. However, most reported TMCs exhibiting CDW are intrinsic metals, and tuning their carrier density is predominantly accomplished through intercalation or atomic substitution. These approaches may introduce impurities or defects that complicate the understanding of the underlying mechanisms.

A research team led by Professor Chen Wei from the Department of Physics and the Department of Chemistry at NUS, synthesized single-layer semiconducting Cr2Se3 and demonstrated the CDW phenomenon using scanning tunneling microscopy (STM).