How Apple’s M2 chip builds on the M1 to take on Intel and AMD.

The M1 is a great chip. Essentially an “X” variant of the A14 chip, it takes the iPhone and iPad processor and doubles the high-performance CPU cores, GPU cores, and memory bandwidth. The M1 chip is so good it’s equally amazing for tablets and thin-and-light laptops as it is for desktops, easily outperforming any competing chip with similar power draw and offering similar performance to processors that use at least twice as much energy.

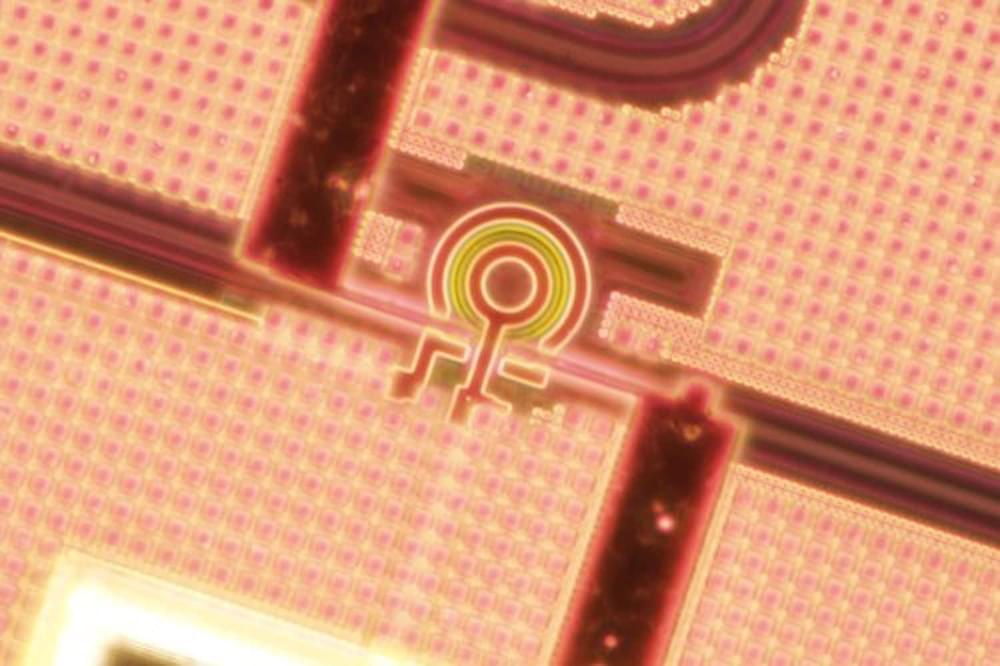

Now a year and a half later, and after delivering three more powerful variants of the M1 (M1 Pro, M1 Max, and M1 Ultra), it’s time for the next generation. Announced at WWDC and appearing first in the new MacBook Air and 13-inch MacBook Pro, the M2 is essentially the system-on-chip we predicted it would be: what the M1 is to the A14, the M2 is to the A15. It’s made of 20 billion transistors, 25 percent more than M1, and while it’s still built using a 5nm manufacturing process, it’s a new enhanced “second-generation” 5nm process.

Here are the most significant ways the M2 is improved over the M1.