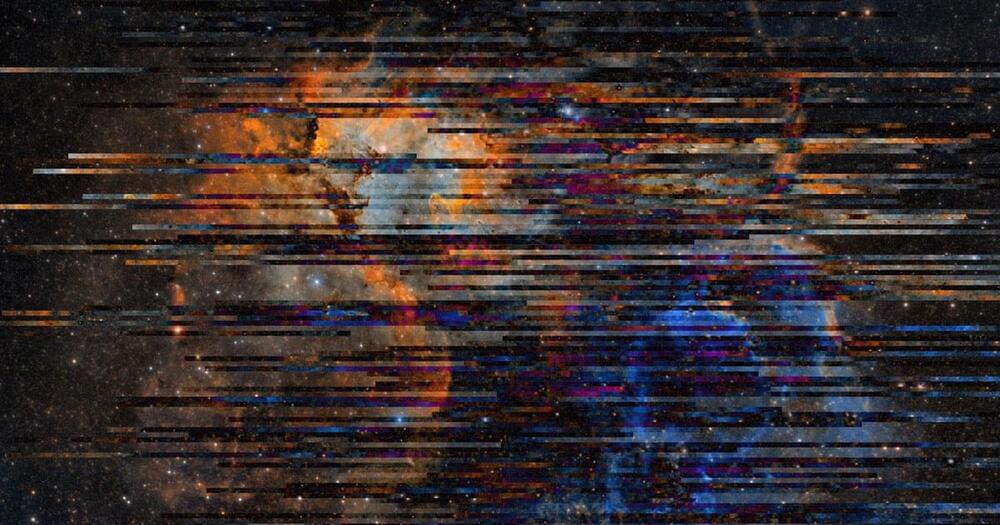

Ever wonder what happens when you fall into a black hole? Now, thanks to a new, immersive visualization produced on a NASA supercomputer, viewers can plunge into the event horizon, a black hole’s point of no return.

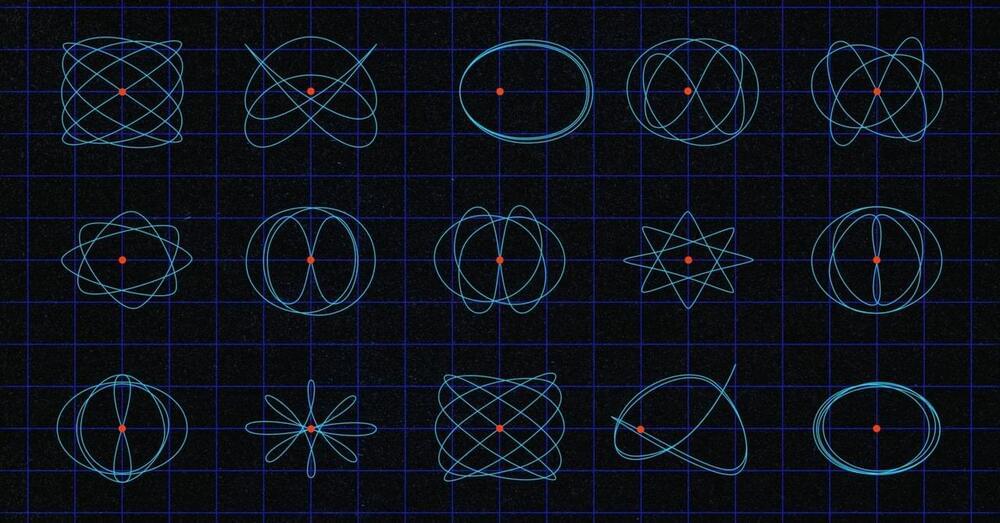

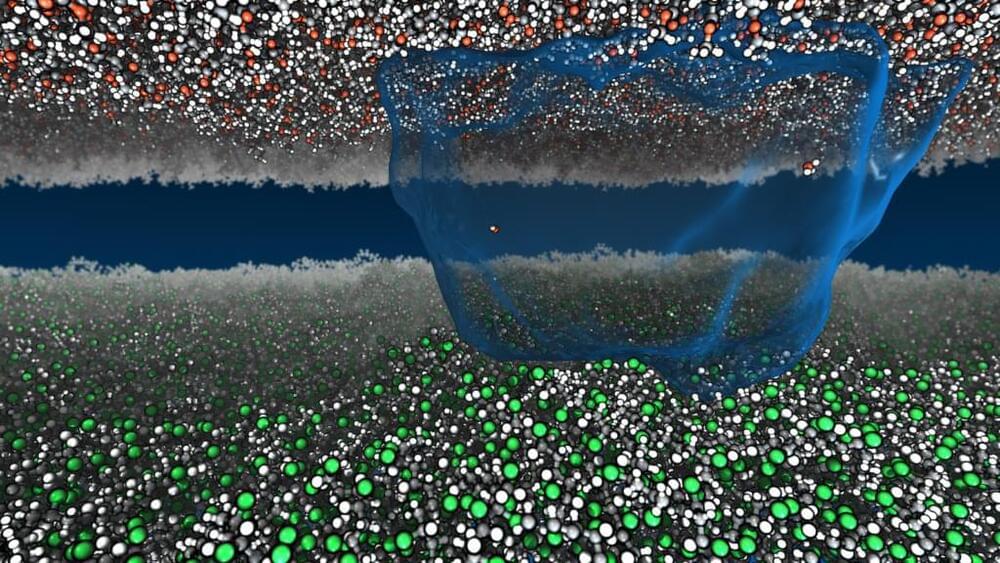

In this visualization of a flight toward a supermassive black hole, labels highlight many of the fascinating features produced by the effects of general relativity along the way. Produced on a NASA supercomputer, the simulation tracks a camera as it approaches, briefly orbits, and then crosses the event horizon — the point of no return — of a monster black hole much like the one at the center of our galaxy. (Video: NASA’s Goddard Space Flight Center/J. Schnittman and B. Powell)

“People often ask about this, and simulating these difficult-to-imagine processes helps me connect the mathematics of relativity to actual consequences in the real universe,” said Jeremy Schnittman, an astrophysicist at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, who created the visualizations. “So I simulated two different scenarios, one where a camera — a stand-in for a daring astronaut — just misses the event horizon and slingshots back out, and one where it crosses the boundary, sealing its fate.”