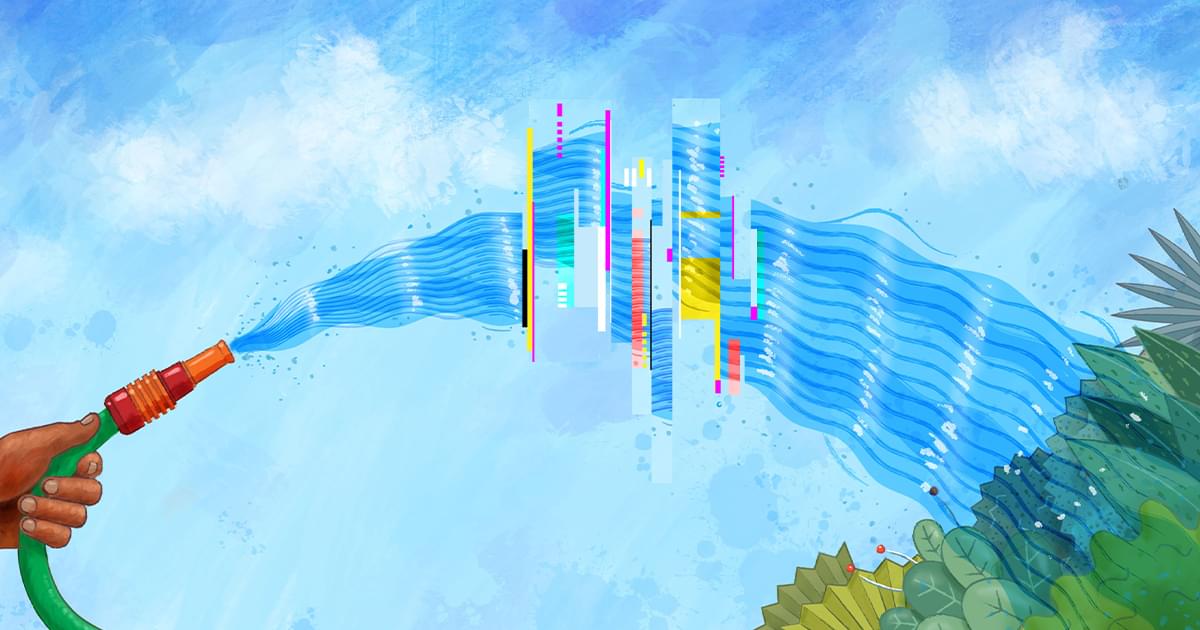

Physicists like Sean Carroll propose not only that quantum mechanics is not only a valuable way of interpreting the world, but actually describes reality, and that the wave function – the centre equation of quantum mechanics – describes a real object.

But, in this article, philosophers Raoni Arroyo and Jonas R. Becker Arenhart argue that the case for wave function realism is deeply confused. While it is a useful component within quantum theory, this alone doesn’t justify treating it as literally real.

Tap the link to read more.

Physicists like Sean Carroll argue not only that quantum mechanics is not only a valuable way of interpreting the world, but actually describes reality, and that the central equation of quantum mechanics – the wave function – describes a real object in the world. But philosophers Raoni Arroyo and Jonas R. Becker Arenhart warn that the arguments for wave-function realism are deeply confused. At best, they show only that the wave function is a useful element inside the theoretical framework of quantum mechanics. But this goes no way whatsoever to showing that this framework should be interpreted as true or that its elements are real. The wavefunction realists are confusing two different levels of debate and lack any justification for their realism. The real question is: does a theory need to be true to be useful?

1. Wavefunction realism

Quantum mechanics is probably our most successful scientific theory. So, if one wants to know what the world is made of, or how the world looks at the fundamental level, one is well-advised to search for the answers in this theory. What does it say about these problems? Well, that is a difficult question, with no single answer. Many interpretative options arise, and one quickly ends up in a dispute about the pros and cons of the different views. Wavefunction realists attempt to overcome those difficulties by looking directly at the formalism of the theory: the theory is a description of the behavior of a mathematical entity, the wavefunction, so why not think that quantum mechanics is, fundamentally, about wavefunctions? The view that emerges is, as Alyssa Ney puts it, that.