Bach was a musical master of mathematical manipulation.

Humans are known to make mental associations between various real-world stimuli and concepts, including colors. For example, red and orange are typically associated with words such as “hot” or “warm,” blue with “cool” or “cold,” and white with “clean.”

Interestingly, some past psychology studies have shown that even if some of these associations arise from people’s direct experience of seeing colors in the world around them, many people who were born blind still make similar color-adjective associations. The processes underpinning the formation of associations between colors and specific adjectives have not yet been fully elucidated.

Researchers at the University of Wisconsin-Madison recently carried out a study to further investigate how language contributes to how we learn about color, using mathematical and computational tools, including Open AI’s GPT-4 large language model (LLM). Their findings, published in Communications Psychology, suggest that color-adjective associations are rooted in the structure of language itself and are thus not only learned through experience.

But now, a bold new idea is challenging this tidy system. Scientists at Rice University in Texas believe there may be a third kind of particle—one that doesn’t follow the rules of fermions or bosons. They’ve developed a mathematical model showing how these unusual entities, called paraparticles, could exist in real materials without breaking the laws of physics.

“We determined that new types of particles we never knew of before are possible,” says Kaden Hazzard, one of the researchers behind the study. Along with co-author Zhiyuan Wang, Hazzard used advanced math to explore this idea.

Their work, published in Nature, suggests that paraparticles might arise in special systems and act differently than anything scientists have seen before.

An avalanche is caused by a chain reaction of events. A vibration or a change in terrain can have a cascading and devastating impact.

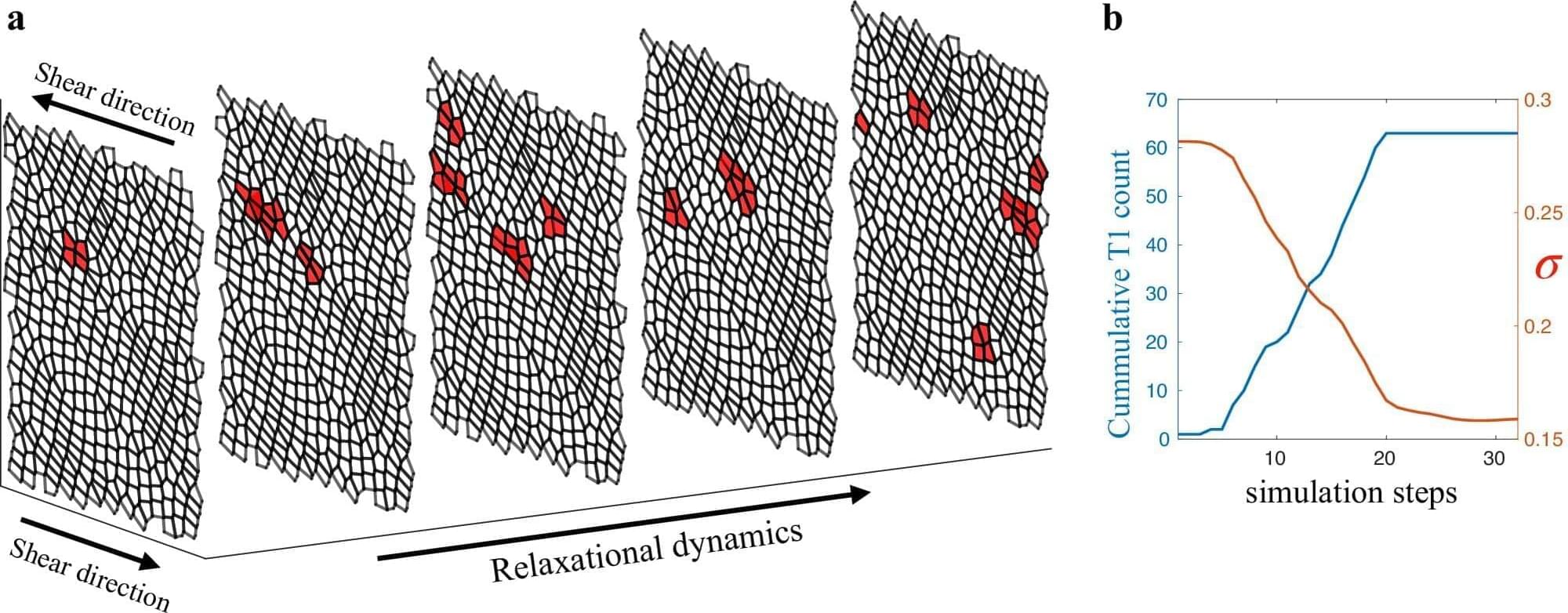

A similar process may happen when living tissues are subject to being pushed or pulled, according to new research published in Nature Communications, by Northeastern University doctoral student Anh Nguyen and supervised by Northeastern physics professor Max Bi.

As theoretical physicists, Bi and Nguyen use computational modeling and mathematics to understand the mechanical processes that organisms undergo on a cellular level. With this more recent work, they have observed that when subjected to sufficient stress, tissues can “suddenly and dramatically rearrange themselves,” similar to how avalanches are formed in the wild.

Curt Jaimungal and Graham Priest sit down to discuss various philosophical themes including the nature of truth, logic and paradoxes, the philosophy of mathematics, concepts of nothingness and existence, and the influence of Eastern philosophy on Western logical traditions.

Consider signing up for TOEmail at https://www.curtjaimungal.org.

Support TOE:

- Patreon: https://patreon.com/curtjaimungal (early access to ad-free audio episodes!)

- Crypto: https://tinyurl.com/cryptoTOE

- PayPal: https://tinyurl.com/paypalTOE

- TOE Merch: https://tinyurl.com/TOEmerch.

Follow TOE:

- NEW Get my ‘Top 10 TOEs’ PDF + Weekly Personal Updates: https://www.curtjaimungal.org.

- Instagram: https://www.instagram.com/theoriesofeverythingpod.

- TikTok: https://www.tiktok.com/@theoriesofeverything_

- Twitter: https://twitter.com/TOEwithCurt.

- Discord Invite: https://discord.com/invite/kBcnfNVwqs.

- iTunes: https://podcasts.apple.com/ca/podcast/better-left-unsaid-wit…1521758802

- Pandora: https://pdora.co/33b9lfP

- Spotify: https://open.spotify.com/show/4gL14b92xAErofYQA7bU4e.

- Subreddit r/TheoriesOfEverything: https://reddit.com/r/theoriesofeverything.

Join this channel to get access to perks:

https://www.youtube.com/channel/UCdWIQh9DGG6uhJk8eyIFl1w/join.

Timestamps:

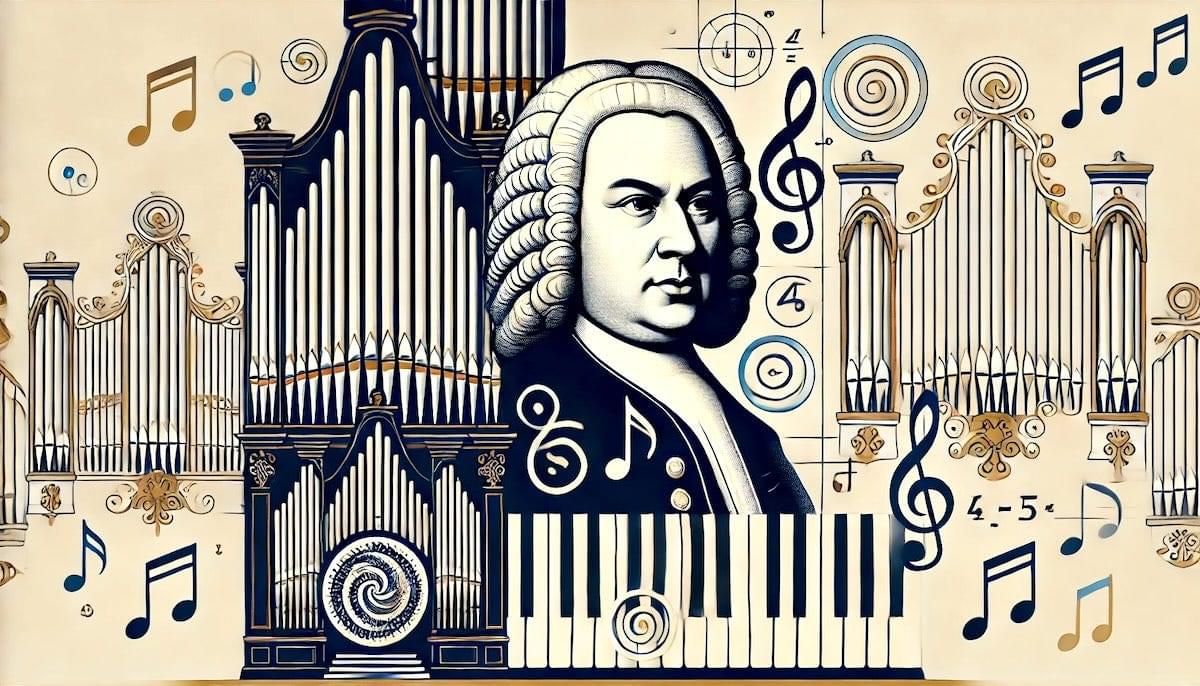

An exact expression for a key process needed in many quantum technologies has been derived by a RIKEN mathematical physicist and a collaborator. This could help to guide advances in quantum technologies.

Many emerging quantum technologies such as quantum computing and quantum communication rely on entanglement.

Entanglement is the mysterious phenomenon whereby two or more particles become so closely interconnected that, no matter how great the distance between them, they exhibit quantum correlations that far exceed the mutual relations achievable in classical systems.

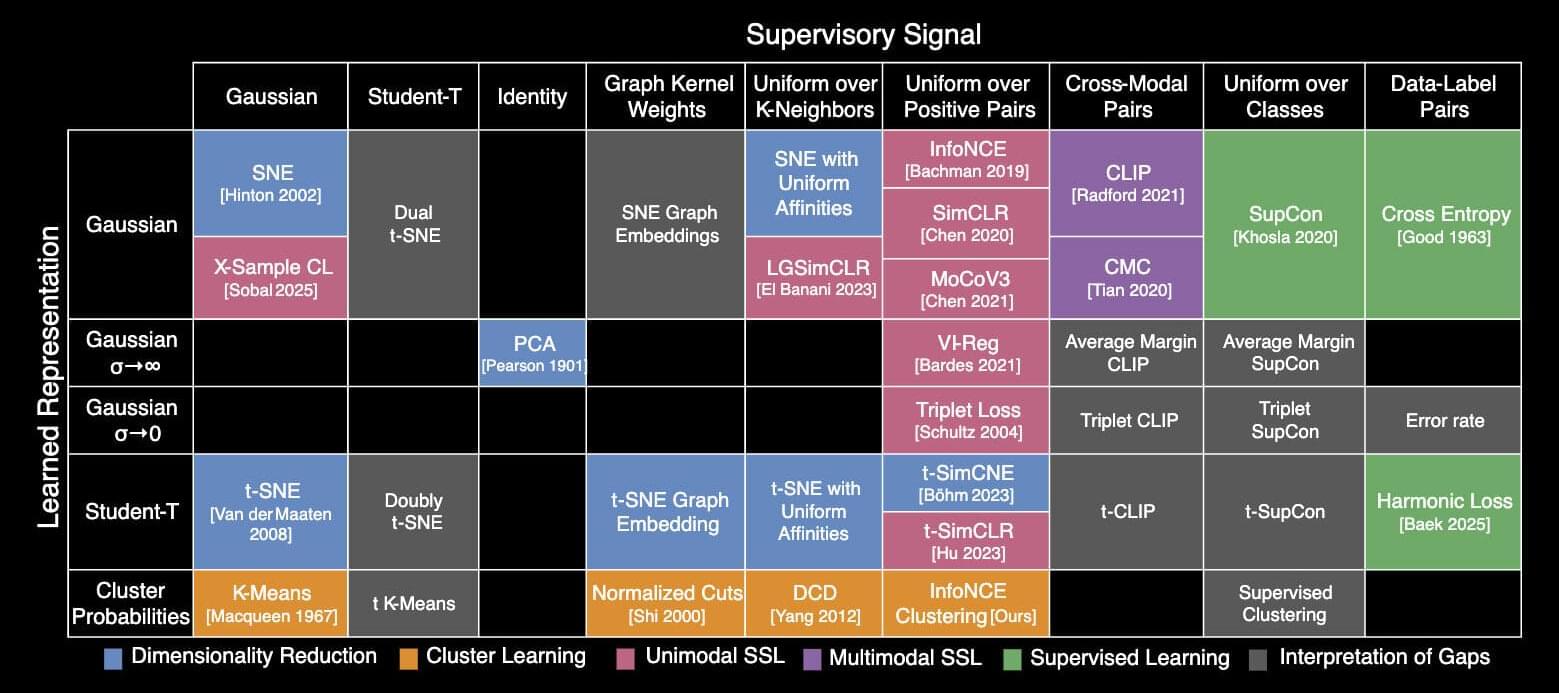

MIT researchers have created a periodic table that shows how more than 20 classical machine-learning algorithms are connected. The new framework sheds light on how scientists could fuse strategies from different methods to improve existing AI models or come up with new ones.

For instance, the researchers used their framework to combine elements of two different algorithms to create a new image-classification algorithm that performed 8% better than current state-of-the-art approaches.

The periodic table stems from one key idea: All these algorithms learn a specific kind of relationship between data points. While each algorithm may accomplish that in a slightly different way, the core mathematics behind each approach is the same.

Eric Lerner is a popular science writer, plasma physicist, and long-time collaborator of the late Nobel laureate Hannes Alfvén. He’s also one of the featured speakers at our Beyond the Big Bang event this summer in Sesimbra, Portugal. For over thirty years, Lerner has been a leading voice in plasma cosmology and a critic of Big Bang cosmology, who argued for a non-expanding, steady state universe as the central claim of his landmark book The Big Bang Never Happened (affiliate link to puchase: https://amzn.to/4jEez8H). Our conversation dives into the history and foundations of plasma cosmology, from early Birkeland current experiments in the Arctic that revealed the electrical nature of the aurora, to the role of plasma dynamics in the shape and behavior of galaxies. All the hits are in here — from Halton Arp, whose Atlas of Peculiar Galaxies seriously challenged the way that mainstream cosmologists interpret redshift, to the electromagnetic forces often overlooked in mainstream cosmology, to the filamentary plasma structures and cosmic-scale currents that strain the limits of the standard model. This is a deep exploration of alternative cosmology, electric universe theories, and the scientists behind them — from Fred Hoyle to Anthony Peratt — who refused to patch a dying theory and instead asked instead if the universe might be eternal.

MAKE HISTORY WITH US THIS SUMMER:

https://demystifysci.com/demysticon-2025

PATREON

/ demystifysci.

PARADIGM DRIFT

https://demystifysci.com/paradigm-dri… Go! 00:05:59 – The Cosmological Pendulum 00:14:09 – Knowledge and Societal Progress 00:22:18 – Big Bang Origins & Ideological Influence 00:29:04 – Technological Stagnation Since the 1970s 00:32:02 – Ideology Replacing Empiricism in Science 00:36:14 – Gravity vs. Electromagnetism in Cosmology 00:41:02 – Evolution of Scientific Funding Models 00:51:11 – Birkeland Currents & Plasma Discoveries 00:56:25 – Centralization of Postwar Science 01:00:18 – Orthodoxy in Medicine 01:00:59 – Decline of Fundamental Research 01:04:39 – Plasma Cosmology vs. Dark Matter 01:08:52 – Capitalism and Research Priorities 01:14:22 – Sustaining Independent Science 01:15:16 – Birkeland, Alfvén & Plasma History 01:22:24 – Einstein’s Rise & Cultural Legacy 01:31:05 – General Relativity and Elitism 01:34:44 – Chapman’s Math vs. Empirics 01:37:12 – Alfvén’s Plasma Breakthroughs 01:43:39 – Plasma Physics vs. Big Bang Theories 01:47:39 – Nobel Prize & MHD’s Hidden Flaws 01:58:00 – Filamentary Plasmas vs. MHD 02:00:47 – Pseudoplasmas and MHD Limits 02:02:58 – Defining Plasma as a State of Matter 02:06:00 – Where Plasma Exists in Nature 02:11:17 – The Myth of Mathematical Models 02:21:10 – Scientific vs. Aesthetic Cosmology 02:29:55 – Failed Big Bang Predictions 02:36:01 – Alternatives to the Expanding Universe 02:43:09 – Large-Scale Structures and Cosmic Age 02:50:10 – Public Science and Open Debate 02:55:16 – Toward a New Cosmological Paradigm #plasma #bigbang #darkmatter #electricuniverse, #cosmology, #astrophysics, #scientificrevolution, #fusionenergy, #philosophypodcast, #sciencepodcast, #longformpodcast ABOUS US: Anastasia completed her PhD studying bioelectricity at Columbia University. When not talking to brilliant people or making movies, she spends her time painting, reading, and guiding backcountry excursions. Shilo also did his PhD at Columbia studying the elastic properties of molecular water. When he’s not in the film studio, he’s exploring sound in music. They are both freelance professors at various universities. PATREON: get episodes early + join our weekly Patron Chat https://bit.ly/3lcAasB MERCH: Rock some DemystifySci gear : https://demystifysci.myspreadshop.com… AMAZON: Do your shopping through this link: https://amzn.to/3YyoT98 DONATE: https://bit.ly/3wkPqaD SUBSTACK: https://substack.com/@UCqV4_7i9h1_V7h… BLOG: http://DemystifySci.com/blog RSS: https://anchor.fm/s/2be66934/podcast/rss MAILING LIST: https://bit.ly/3v3kz2S SOCIAL:

MUSIC:-Shilo Delay: https://g.co/kgs/oty671

00:00 Go!

00:05:59 – The Cosmological Pendulum.

00:14:09 – Knowledge and Societal Progress.

00:22:18 – Big Bang Origins & Ideological Influence.

00:29:04 – Technological Stagnation Since the 1970s.

00:32:02 – Ideology Replacing Empiricism in Science.

00:36:14 – Gravity vs. Electromagnetism in Cosmology.

00:41:02 – Evolution of Scientific Funding Models.

00:51:11 – Birkeland Currents & Plasma Discoveries.

00:56:25 – Centralization of Postwar Science.

01:00:18 – Orthodoxy in Medicine.

01:00:59 – Decline of Fundamental Research.

01:04:39 – Plasma Cosmology vs. Dark Matter.

01:08:52 – Capitalism and Research Priorities.

01:14:22 – Sustaining Independent Science.

01:15:16 – Birkeland, Alfvén & Plasma History.

01:22:24 – Einstein’s Rise & Cultural Legacy.

01:31:05 – General Relativity and Elitism.

01:34:44 – Chapman’s Math vs. Empirics.

01:37:12 – Alfvén’s Plasma Breakthroughs.

01:43:39 – Plasma Physics vs. Big Bang Theories.

01:47:39 – Nobel Prize & MHD’s Hidden Flaws.

01:58:00 – Filamentary Plasmas vs. MHD

02:00:47 – Pseudoplasmas and MHD Limits.

02:02:58 – Defining Plasma as a State of Matter.

02:06:00 – Where Plasma Exists in Nature.

02:11:17 – The Myth of Mathematical Models.

02:21:10 – Scientific vs. Aesthetic Cosmology.

02:29:55 – Failed Big Bang Predictions.

02:36:01 – Alternatives to the Expanding Universe.

02:43:09 – Large-Scale Structures and Cosmic Age.

02:50:10 – Public Science and Open Debate.

02:55:16 – Toward a New Cosmological Paradigm.

#plasma #bigbang #darkmatter #electricuniverse, #cosmology, #astrophysics, #scientificrevolution, #fusionenergy, #philosophypodcast, #sciencepodcast, #longformpodcast.

ICLR 2025

Shaden Alshammari, John Hershey, Axel Feldmann, William T. Freeman, Mark Hamilton.

MIT, Microsoft, Google.

[ https://openreview.net/forum?id=WfaQrKCr4X](https://openreview.net/forum?id=WfaQrKCr4X

[ https://github.com/mhamilton723/STEGO](https://github.com/mhamilton723/STEGO