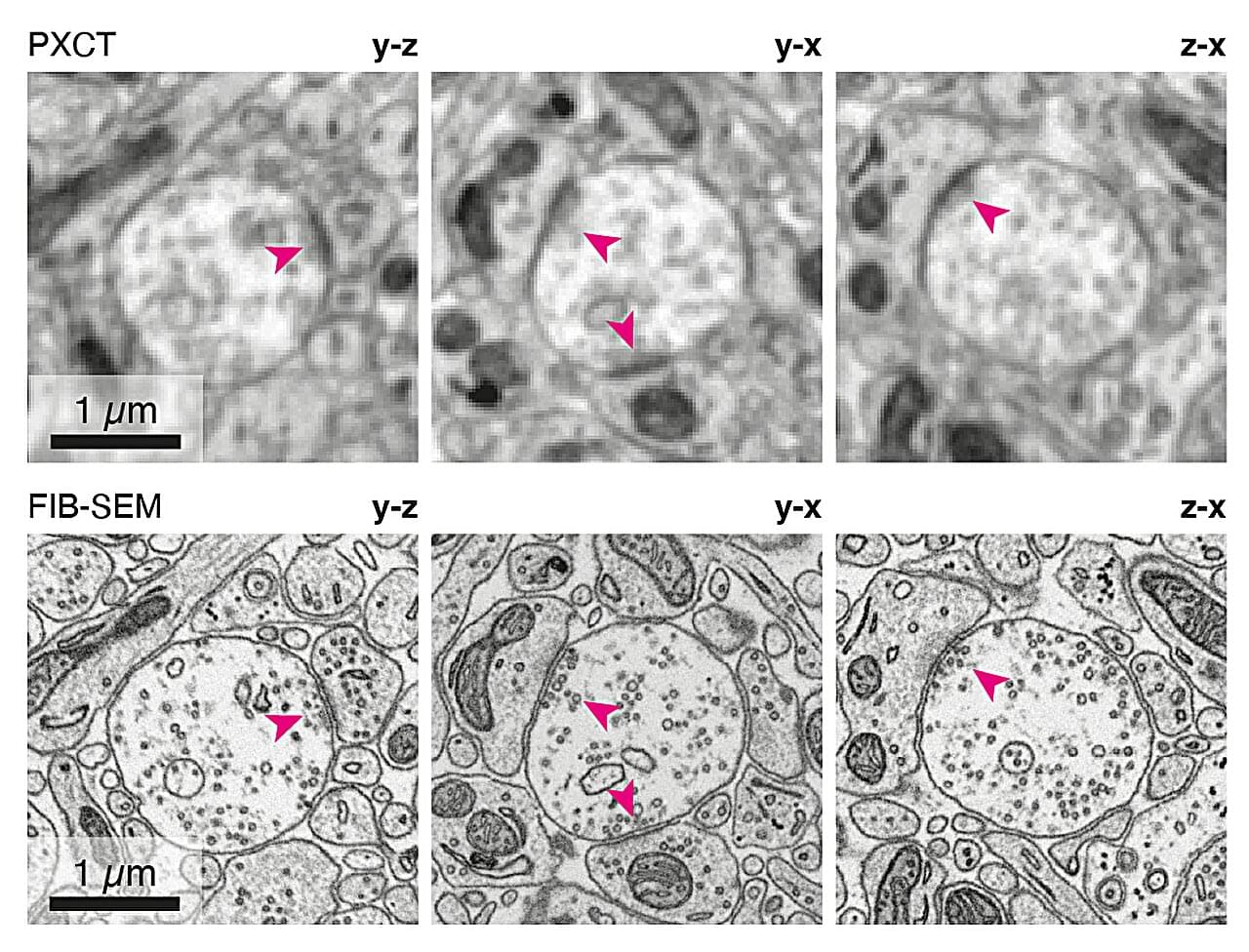

An international team of researchers led by the Francis Crick Institute, working with the Paul Scherrer Institute, has developed a new imaging protocol to capture mouse brain cell connections in precise detail. In work published in Nature Methods, they combined the use of X-rays with radiation-resistant materials sourced from the aerospace industry.

The images acquired using this technique allowed the team to see how nerve cells connect in the mouse brain, without needing to thinly slice biological tissue samples.

Volume electron microscopy (volume EM) has been the gold standard for imaging how nerve cells connect as ‘“circuitry” inside the brain. It has paved the way for scientists to create maps called connectomes, of entire brains, first in fruit fly larvae and then the adult fruit fly. This imaging involves cutting 10s of nm thin slices (tens of thousands per mm of tissue), imaging each slice and then building the images back into their 3D structure.