Prometheus.

Category: law – Page 3

A universal law could explain how large trades change stock prices

Financial markets are often seen as chaotic and unpredictable. Every day, traders around the world buy shares and sell assets in a whirlwind of activity. It looks like a system of total randomness—but is it really?

Scientists have long suspected that there is a hidden order under this noise, but it has been difficult to prove. Now, Yuki Sato and Kiyoshi Kanazawa of Kyoto University have provided some of the strongest evidence yet. By studying eight years of data from the Tokyo Stock Exchange (TSE), they have confirmed a long-standing hypothesis known as the square-root law (SRL) of price impact.

Texas sues TV makers for taking screenshots of what people watch

The Texas Attorney General sued five major television manufacturers, accusing them of illegally collecting their users’ data by secretly recording what they watch using Automated Content Recognition (ACR) technology.

The lawsuits target Sony, Samsung, LG, and China-based companies Hisense and TCL Technology Group Corporation. Attorney General Ken Paxton’s office also highlighted “serious concerns” about the two Chinese companies being required to follow China’s National Security Law, which could give the Chinese government access to U.S. consumers’ data.

According to complaints filed this Monday in Texas state courts, the TV makers can allegedly use ACR technology to capture screenshots of television displays every 500 milliseconds, monitor the users’ viewing activity in real time, and send this information back to the companies’ servers without the users’ knowledge or consent.

Cannabis Survey Shows Rising CBD Use in Pets

“The long-term use of CBD is associated with less intense aggressive behaviors in dogs.”

Can cannabidiol (CBD) help dogs in the same way it helps humans? This is what a recent study published in Frontiers in Veterinary Science hopes to address as a team of scientists investigated the benefits of incorporating CBD products into dog products. This study has the potential to help scientists, legislators, and the public better understand the health benefits of CBD for both humans and animals.

For the study, the researchers analyzed data obtained from the Dog Aging Project (DAP), which is an organization designed to gain insight into dog aging, lifestyle, diet, and environmental factors. Surveys were conducted from 47,444 dog owners between December 2019 and December 2023, with the first surveys being s baseline regarding a dog’s overall health status, while the second survey was used to ascertain the amount of CBD or hemp the owners fed their dogs while also assessing changes in behavior and/or health.

In the end, the researchers found that healthy dogs were less likely to use CBD, whereas dogs with limiting health conditions like dementia, epilepsy, or cancer were more likely to use CBD. Additionally, CBD-use dogs were found to exhibit less aggressive behavior compared to non-use dogs. Finally, the team found that states where CBD was legal had higher rates of dogs using CBD.

New universal law predicts how most objects shatter, from dropped bottles to exploding bubbles

When a plate drops or a glass smashes, you’re annoyed by the mess and the cost of replacing them. But for some physicists, the broken pieces are a source of fascination: Why does everything break into such a huge variety of sizes? Now, Emmanuel Villermaux at Aix-Marseille University in France and the University Institute of France has come up with a simple, elegant law for how objects shatter, whether they are brittle solids, liquid drops, or exploding bubbles.

Scientists have long suspected that there was something universal about fragmentation. If you count how many fragments fall into each size range and make a graph of that distribution, it would have the same shape regardless of the object that shattered.

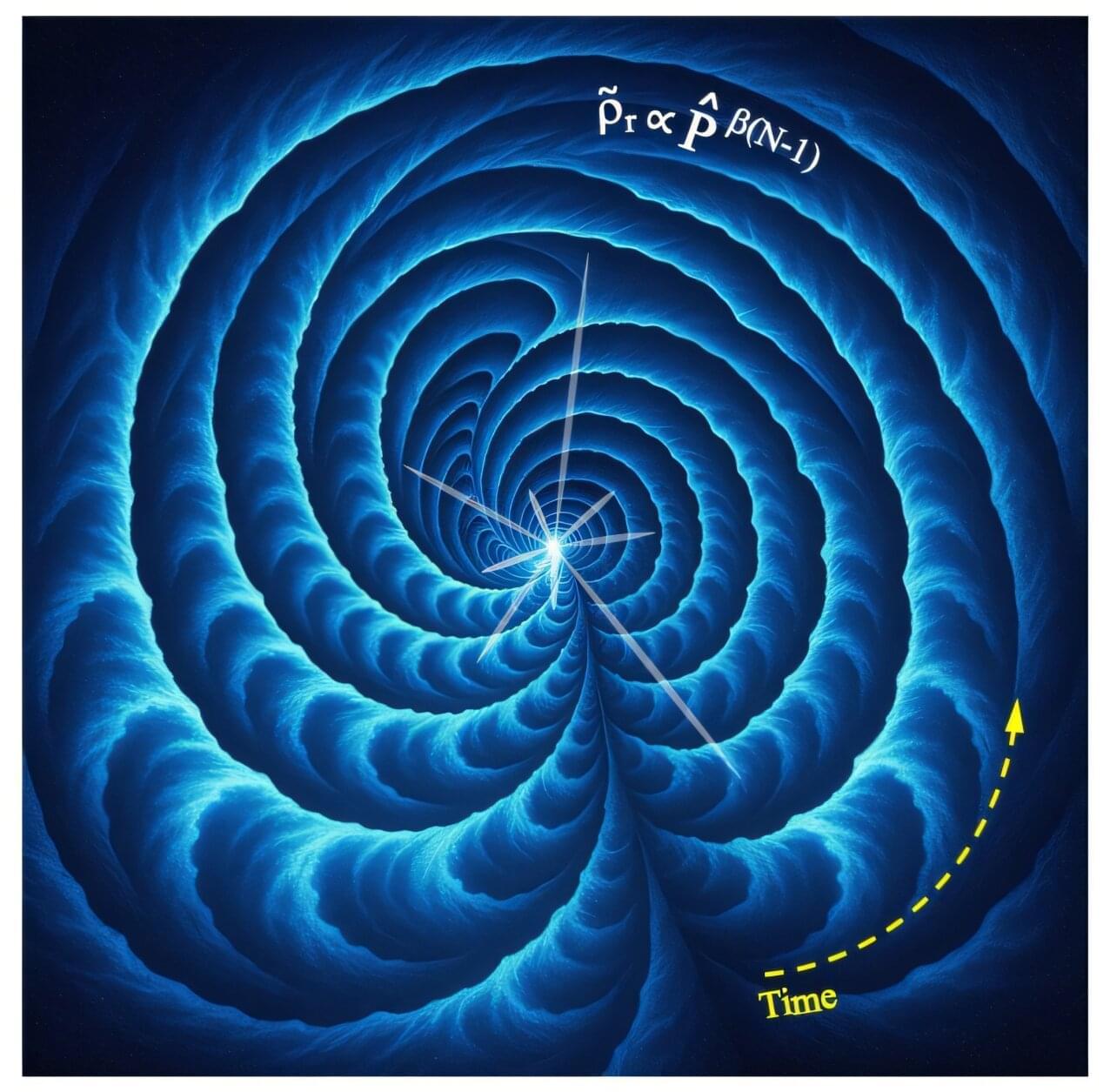

The hidden rule behind ignition: An analytic law governing multi-shock implosions for ultrahigh compression

Physicists at the University of Osaka have unveiled a breakthrough theoretical framework that uncovers the hidden physical rule behind one of the most powerful compression methods in laser fusion science—the stacked-shock implosion.

While multi-shock ignition has recently proven its effectiveness in major laser facilities worldwide, this new study identifies the underlying law that governs such implosions, expressed in an elegant and compact analytic form.

A team led by Professor Masakatsu Murakami has developed a framework called Stacked Converging Shocks (SCS), which extends the classical Guderley solution—a 1942 cornerstone of implosion theory—into the modern high-energy-density regime.

Scientists Call Age-25 Cannabis Rules a Myth

“This Perspective concludes that an MLA between 18–21 years is a scientifically supportable and socially coherent threshold for non-medical cannabis use.”

What should be the minimum legal age for recreational cannabis? This is what a recent study published in The American Journal on Drug and Alcohol Abuse hopes to address as a team of scientists investigated the benefits and challenges of raising the legal age for using recreational marijuana to 25, with the current age range being 18 to 21, depending on the country. This study has the potential to help researchers, legislators, and the public better understand the neuroscience behind the appropriate age for cannabis use.

For the study, the researchers examined brain development for individuals aged 18–25, specifically regarding brain maturation and whether this ceases before age 25. They note it depends on a myriad of factors, including sex, geographic region, and physiology. This study comes as Germany recently published several studies regarding legalizing recreational marijuana nationwide and marijuana use rates post-legalization. In the end, the researchers for this most recent study concluded that raising the minimum legal age for recreational cannabis use to 25 is unnecessary.

The study notes, “This Perspective concludes that an MLA between 18–21 years is a scientifically supportable and socially coherent threshold for non-medical cannabis use. Policy decisions should be informed not only by neurobiological evidence but also by legal, justice, sociocultural, psychological, and historical considerations.”

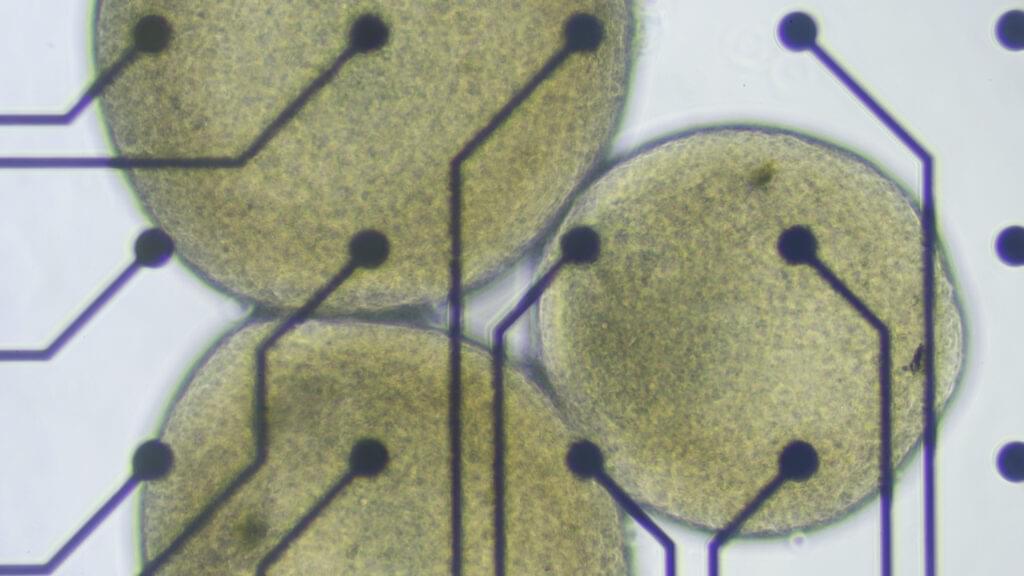

Brain organoid pioneers fear inflated claims about biocomputing could backfire

For the brain organoids in Lena Smirnova’s lab at Johns Hopkins University, there comes a time in their short lives when they must graduate from the cozy bath of the bioreactor, leave the warm, salty broth behind, and be plopped onto a silicon chip laced with microelectrodes. From there, these tiny white spheres of human tissue can simultaneously send and receive electrical signals that, once decoded by a computer, will show how the cells inside them are communicating with each other as they respond to their new environments.

More and more, it looks like these miniature lab-grown brain models are able to do things that resemble the biological building blocks of learning and memory. That’s what Smirnova and her colleagues reported earlier this year. It was a step toward establishing something she and her husband and collaborator, Thomas Hartung, are calling “organoid intelligence.”

Tead More

Another would be to leverage those functions to build biocomputers — organoid-machine hybrids that do the work of the systems powering today’s AI boom, but without all the environmental carnage. The idea is to harness some fraction of the human brain’s stunning information-processing superefficiencies in place of building more water-sucking, electricity-hogging, supercomputing data centers.

Despite widespread skepticism, it’s an idea that’s started to gain some traction. Both the National Science Foundation and DARPA have invested millions of dollars in organoid-based biocomputing in recent years. And there are a handful of companies claiming to have built cell-based systems already capable of some form of intelligence. But to the scientists who first forged the field of brain organoids to study psychiatric and neurodevelopmental disorders and find new ways to treat them, this has all come as a rather unwelcome development.

At a meeting last week at the Asilomar conference center in California, researchers, ethicists, and legal experts gathered to discuss the ethical and social issues surrounding human neural organoids, which fall outside of existing regulatory structures for research on humans or animals. Much of the conversation circled around how and where the field might set limits for itself, which often came back to the question of how to tell when lab-cultured cellular constructs have started to develop sentience, consciousness, or other higher-order properties widely regarded as carrying moral weight.