For example, the New York Times states: “The AI industry this year is set to be defined by one main characteristic: A remarkably rapid improvement of the technology as advancements build upon one another, enabling AI to generate new kinds of media, mimic human reasoning in new ways and seep into the physical world through a new breed of robot.”

Ethan Mollick, writing in his One Useful Thing blog, takes a similar view: “Most likely, AI development is actually going to accelerate for a while yet before it eventually slows down due to technical or economic or legal limits.”

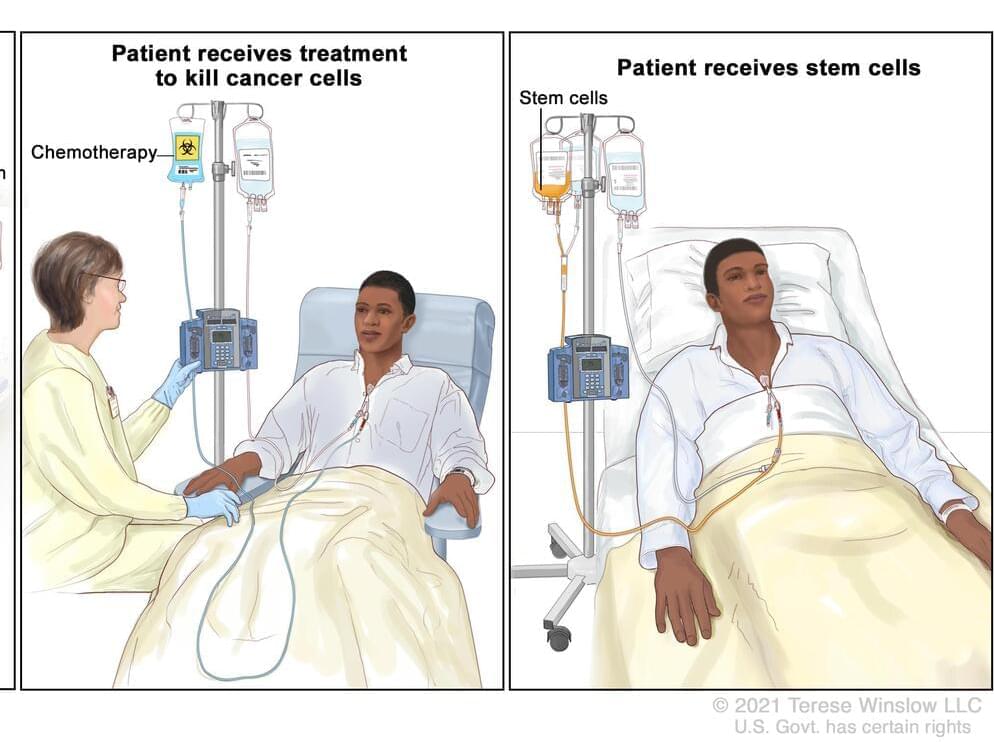

The year ahead in AI will undoubtedly bring dramatic changes. Hopefully, these will include advances that improve our quality of life, such as the discovery of life saving new drugs. Likely, the most optimistic promises will not be realized in 2024, leading to some amount of pullback in market expectations. This is the nature of hype cycles. Hopefully, any such disappointments will not bring about another AI winter.

עברית (Hebrew)

עברית (Hebrew)