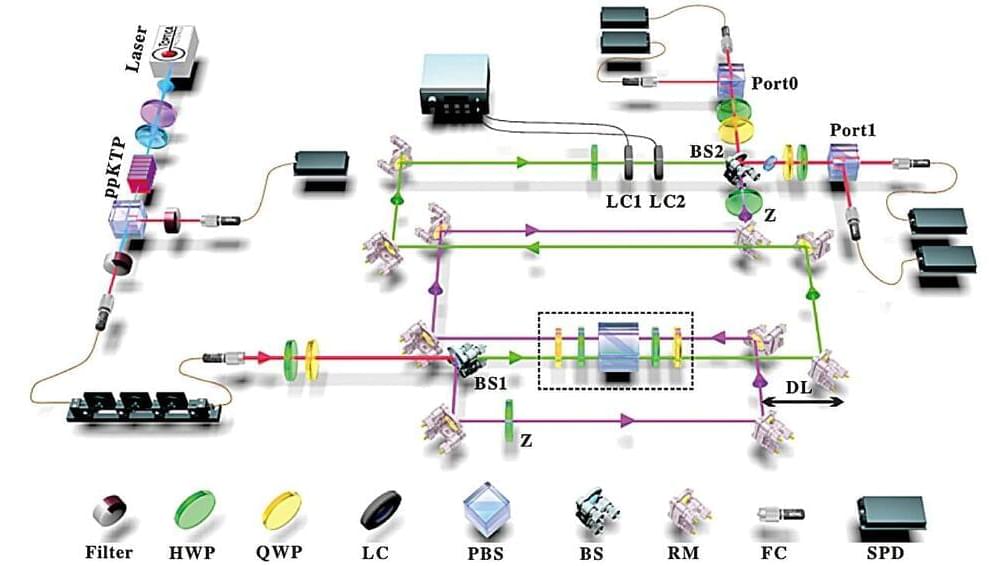

A research team has constructed a coherent superposition of quantum evolution with two opposite directions in a photonic system and confirmed its advantage in characterizing input-output indefiniteness. The study was published in Physical Review Letters.

The notion that time flows inexorably from the past to the future is deeply rooted in people’s mind. However, the laws of physics that govern the motion of objects in the microscopic world do not deliberately distinguish the direction of time.

To be more specific, the basic equations of motion of both classical and quantum mechanics are reversible, and changing the direction of the time coordinate system of a dynamical process (possibly along with the direction of some other parameters) still constitutes a valid evolution process.