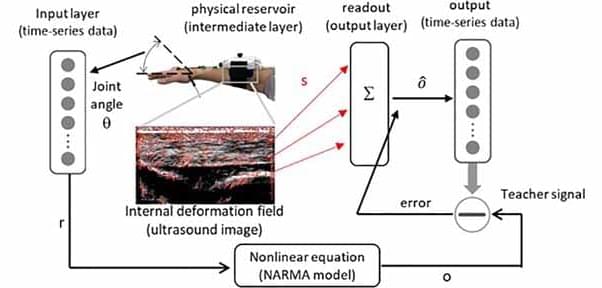

Physical reservoir computing refers to the concept of using nonlinear physical systems as computational resources to achieve complex information processing. This approach exploits the intrinsic properties of physical systems such as their nonlinearity and memory to perform computational tasks. Soft biological tissues possess characteristics such as stress-strain nonlinearity and viscoelasticity that satisfy the requirements of physical reservoir computing. This study evaluates the potential of human soft biological tissues as physical reservoirs for information processing. Particularly, it determines the feasibility of using the inherent dynamics of human soft tissues as a physical reservoir to emulate nonlinear dynamic systems. In this concept, the deformation field within the muscle, which is obtained from ultrasound images, represented the state of the reservoir. The findings indicate that the dynamics of human soft tissue have a positive impact on the computational task of emulating nonlinear dynamic systems. Specifically, our system outperformed the simple LR model for the task. Simple LR models based on raw inputs, which do not account for the dynamics of soft tissue, fail to emulate the target dynamical system (relative error on the order of <inline-formula xmlns:mml=“http://www.w3.org/1998/Math/MathML” xmlns:xlink=“http://www.w3.org/1999/xlink”> <tex-math notation=“LaTeX”>$10^{-2}$ </tex-math></inline-formula>). By contrast, the emulation results obtained using our system closely approximated the target dynamics (relative error on the order of <inline-formula xmlns:mml=“http://www.w3.org/1998/Math/MathML” xmlns:xlink=“http://www.w3.org/1999/xlink”> <tex-math notation=“LaTeX”>$10^{-3}$ </tex-math></inline-formula>). These results suggest that the soft tissue dynamics contribute to the successful emulation of the nonlinear equation. This study suggests that human soft tissues can be used as a potential computational resource. Soft tissues are found throughout the human body. Therefore, if computational processing is delegated to biological tissues, it could lead to a distributed computation system for human-assisted devices.