Poet who discovered Shor’s algorithm answers questions about quantum computers and other mysteries.

In the age of big data, we are quickly producing far more digital information than we can possibly store.

Last year, $20 billion was spent on new data centers in the US alone, doubling the capital expenditure on data center infrastructure from 2016.

And even with skyrocketing investment in data storage, corporations and the public sector are falling behind.

Cops would love to have a system that uses DNA from a crime scene to generate a picture of a suspect’s face, but that tech is still restricted to science fiction.

That technology may never exist, but a team of Belgian and American engineers just developed something similar. Using what they know about how DNA shapes the human face, the researchers built an algorithm that scans through a database of images and selects the faces that could be linked to the DNA found at a crime scene, according to research published Wednesday in the journal Nature Communications — a powerful crime-fighting tool, but also a terrifying new way to subvert privacy.

A mathematical equation has proven that controlling one of the two major changes in a cell—decay or cancerous growth—enhances the other, causing inevitable death.

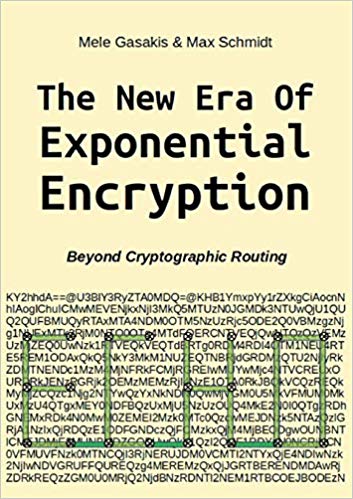

In their book “Era of Exponential Encryption — Beyond Cryptographic Routing” the authors provide a vision that can demonstrate an increasing multiplication of options for encryption and decryption processes: Similar to a grain of rice that doubles exponentially in every field of a chessboard, more and more newer concepts and programming in the area of cryptography increase these manifolds: both, encryption and decryption, require more session-related and multiple keys, so that numerous options even exist for configuring hybrid encryption: with different keys and algorithms, symmetric and asymmetrical methods, or even modern multiple encryption, with that ciphertext is converted again and again to ciphertext. It will be analyzed how a handful of newer applications like e.g. Spot-On and GoldBug E-Mail Client & Crypto Chat Messenger and other open source software programming implement these encryption mechanisms. Renewing a key several times — within the dedicated session with “cryptographic calling” — has forwarded the term of “perfect forward secrecy” to “instant perfect forward secrecy” (IPFS). But even more: if in advance a bunch of keys is sent, a decoding of a message has to consider not only one present session key, but over dozens of keys are sent — prior before the message arrives. The new paradigm of IPFS has already turned into the newer concept of these Fiasco Keys are keys, which provide over a dozen possible ephemeral keys within one session and define Fiasco Forwarding, the approach which complements and follows IPFS. And further: by adding routing- and graph-theory to the encryption process, which is a constant part of the so called Echo Protocol, an encrypted packet might take different graphs and routes within the network. This shifts the current status to a new age: The Era of Exponential Encryption, so the vision and description of the authors. If routing does not require destination information but is replaced by cryptographic in.

RSA Encryption is an essential safeguard for our online communications. It was also destined to fail even before the Internet made RSA necessary, thanks the work of Peter Shor, whose algorithm in 1994 proved quantum computers could actually be used to solve problems classical computers could not.

In recent years, forensics scientists, statisticians, and engineers have been working to put crime scene forensics on a stronger footing, with some classic techniques falling out of favor.

[Photos: OpenClipart-Vectors/Pixabay; Hunter Harritt/Unsplash; blickpixel/Pixabay].