To address the growing threat of cyberattacks on industrial control systems, a KAUST team including Fouzi Harrou, Wu Wang and led by Ying Sun has developed an improved method for detecting malicious intrusions.

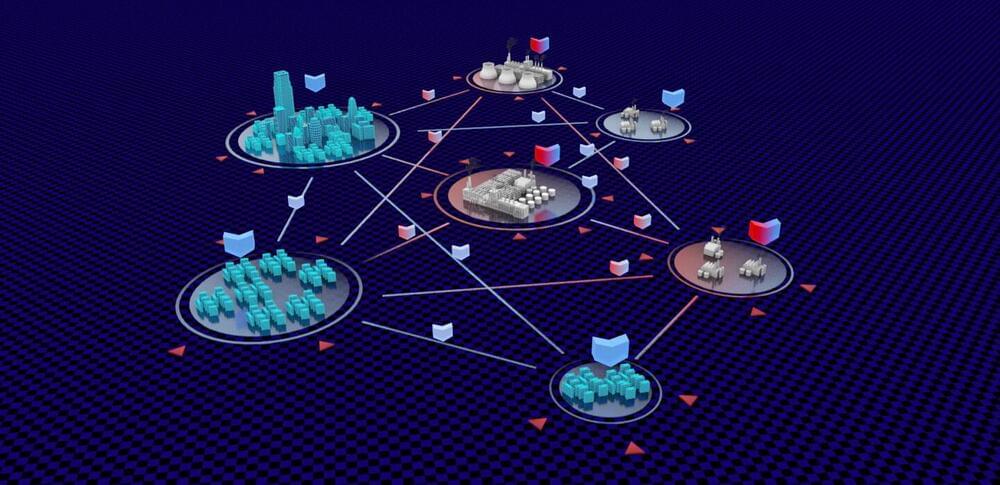

Internet-based industrial control systems are widely used to monitor and operate factories and critical infrastructure. In the past, these systems relied on expensive dedicated networks; however, moving them online has made them cheaper and easier to access. But it has also made them more vulnerable to attack, a danger that is growing alongside the increasing adoption of internet of things (IoT) technology.

Conventional security solutions such as firewalls and antivirus software are not appropriate for protecting industrial control systems because of their distinct specifications. Their sheer complexity also makes it hard for even the best algorithms to pick out abnormal occurrences that might spell invasion.