Topology in optics and photonics has been a hot topic since 1,890 where singularities in electromagnetic fields have been considered. The recent award of the Nobel prize for topology developments in condensed matter physics has led to renewed surge in topology in optics with most recent developments in implementing condensed matter particle-like topological structures in photonics. Recently, topological photonics, especially the topological electromagnetic pulses, hold promise for nontrivial wave-matter interactions and provide additional degrees of freedom for information and energy transfer. However, to date the topology of ultrafast transient electromagnetic pulses had been largely unexplored.

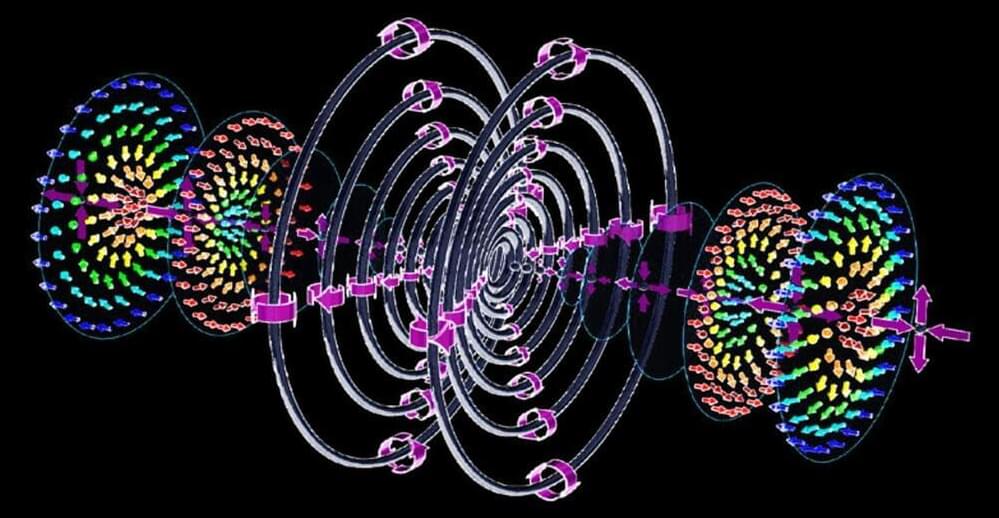

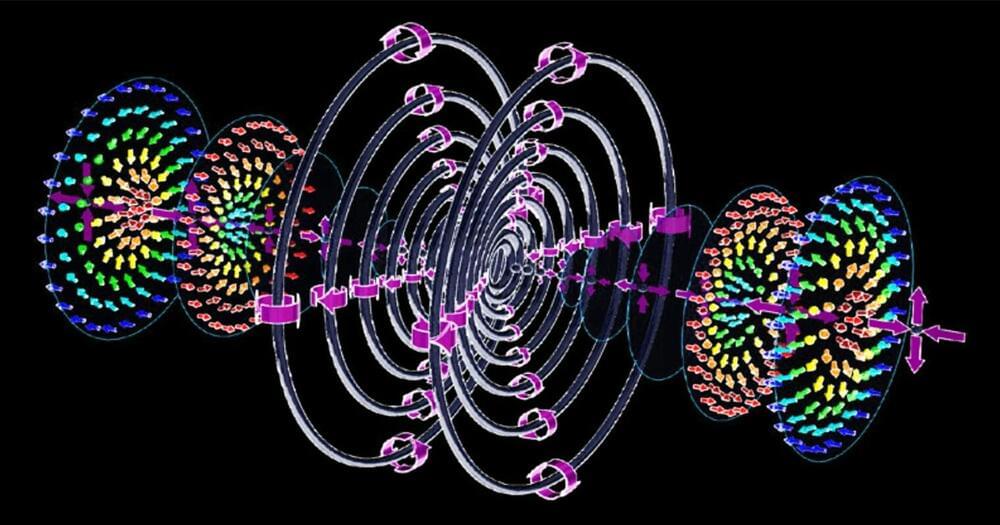

In their paper published in the journal Nature Communications, physicists in the UK and Singapore report a new family of electromagnetic pulses, the exact solutions of Maxwell’s equation with toroidal topology, in which topological complexity can be continuously controlled, namely supertoroidal topology. The electromagnetic fields in such supertoroidal pulses have skyrmionic structures as they propagate in free space with the speed of light.

Skyrmions, sophisticated topological particles originally proposed as a unified model of the nucleon by Tony Skyrme in 1,962 behave like nanoscale magnetic vortices with spectacular textures. They have been widely studied in many condensed matter systems, including chiral magnets and liquid crystals, as nontrivial excitations showing great importance for information storing and transferring. If skyrmions can fly, open up infinite possibilities for the next generation of informatics revolution.