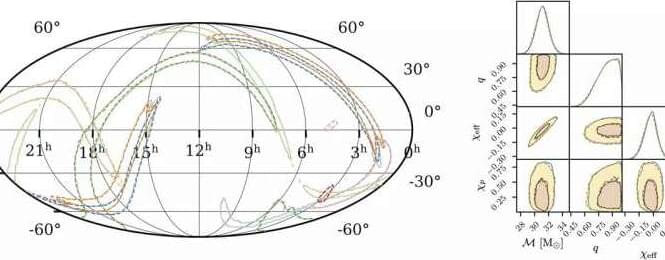

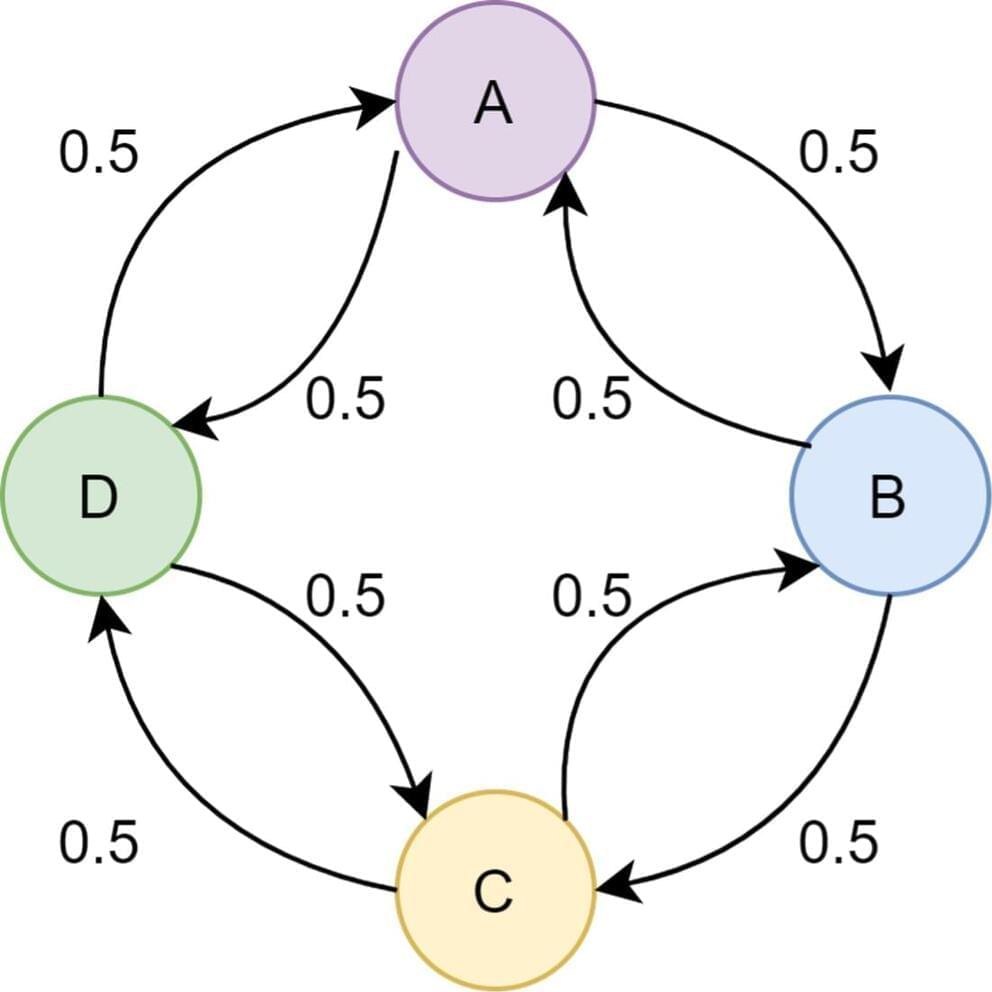

Black holes are one of the greatest mysteries of the universe—for example, a black hole with the mass of our sun has a radius of only 3 kilometers. Black holes in orbit around each other emit gravitational radiation—oscillations of space and time predicted by Albert Einstein in 1916. This causes the orbit to become faster and tighter, and eventually, the black holes merge in a final burst of radiation. These gravitational waves propagate through the universe at the speed of light, and are detected by observatories in the U.S. (LIGO) and Italy (Virgo). Scientists compare the data collected by the observatories against theoretical predictions to estimate the properties of the source, including how large the black holes are and how fast they are spinning. Currently, this procedure takes at least hours, often months.

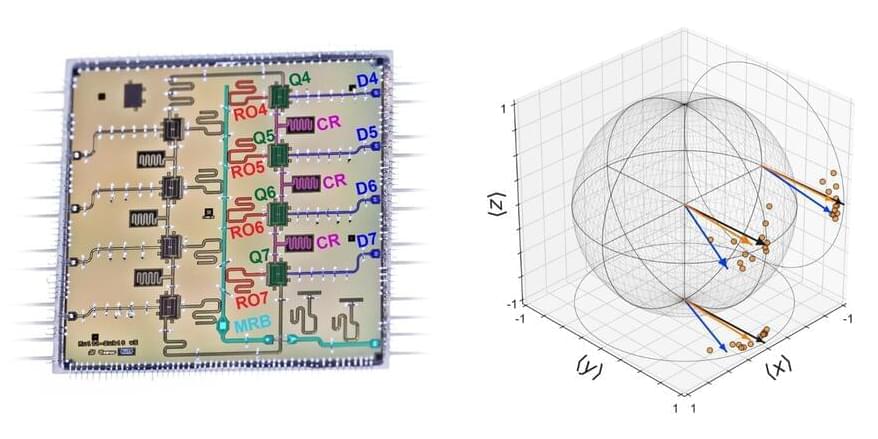

An interdisciplinary team of researchers from the Max Planck Institute for Intelligent Systems (MPI-IS) in Tübingen and the Max Planck Institute for Gravitational Physics (Albert Einstein Institute/AEI) in Potsdam is using state-of-the-art machine learning methods to speed up this process. They developed an algorithm using a deep neural network, a complex computer code built from a sequence of simpler operations, inspired by the human brain. Within seconds, the system infers all properties of the binary black-hole source. Their research results are published today in Physical Review Letters.

“Our method can make very accurate statements in a few seconds about how big and massive the two black holes were that generated the gravitational waves when they merged. How fast do the black holes rotate, how far away are they from Earth and from which direction is the gravitational wave coming? We can deduce all this from the observed data and even make statements about the accuracy of this calculation,” explains Maximilian Dax, first author of the study Real-Time Gravitational Wave Science with Neural Posterior Estimation and Ph.D. student in the Empirical Inference Department at MPI-IS.