The Taurid asteroids, also known as the Taurid swarm, are a group is asteroids hypothesized to be leftovers chunks from the comet Encke, which orbits the Sun every 3.3 years. But what risk could the Taurid swarm have regarding potential impacts with Earth? This is what a recent study presented at the 56th annual meeting of the American Astronomical Society (AAS) Division for Planetary Sciences (DPS) hopes to address as a team of researchers from the United States and Canada investigated the potential threat of the Taurid swarm impacting the Earth. This study holds the potential to help researchers better understand how to identify threats of asteroid impacts on Earth and the steps that can be taken to mitigate them.

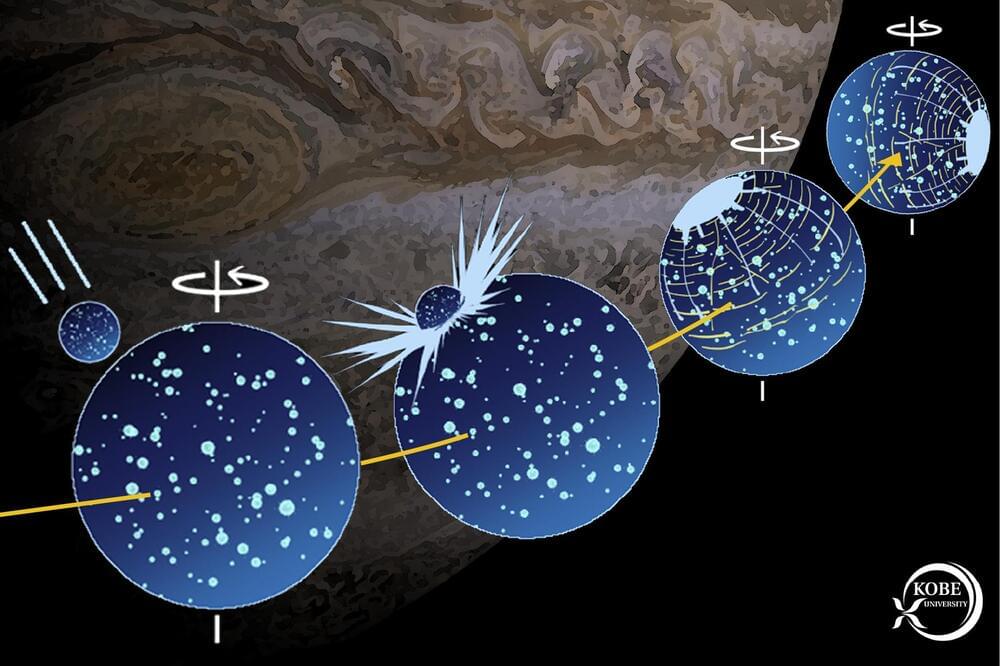

This study builds off previous research pertaining to the Taurid swarm, which estimated the size of the bodies as being kilometer-sized, and while this swarm is responsible for the Taurid meteor shower, asteroids that large could cause significant damage on the Earth if one impacts on our planet’s surface. This new study conducted a first-time analysis of the risk these asteroids pose for impacting the Earth, and with promising results.

“We took advantage of a rare opportunity when this swarm of asteroids passed closer to Earth, allowing us to more efficiently search for objects that could pose a threat to our planet,” said Dr. Quanzhi Ye, who is an assistant research scientist in the Department of Astronomy at the University of Maryland and lead author of the study. “Our findings suggest that the risk of being hit by a large asteroid in the Taurid swarm is much lower than we believed, which is great news for planetary defense.”