In the various incarnations of Douglas Adams’ Hitchhiker’s Guide To The Galaxy, a sentient robot named Marvin the Paranoid Android serves on the starship Heart of Gold. Because he is never assigned tasks that challenge his massive intellect, Marvin is horribly depressed, always quite bored, and a burden to the humans and aliens around him. But he does write nice lullabies.

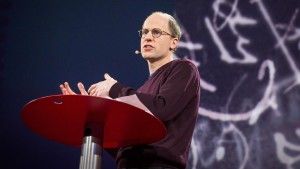

While Marvin is a fictional robot, Scholar and Author David Gunkel predicts that sentient robots will soon be a fact of life and that mankind needs to start thinking about how we’ll treat such machines, at present and in the future.

For Gunkel, the question is about moral standing and how we decide if something does or does not have moral standing. As an example, Gunkel notes our children have moral standing, while a rock or our smartphone may not have moral consideration. From there, he said, the question becomes, where and how do we draw the line to decide who is inside and who is outside the moral community?

“Traditionally, the qualities for moral standing are things like rationality, sentience (and) the ability to use languages. Every entity that has these properties generally falls into the community of moral subjects,” Gunkel said. “The problem, over time, is that these properties have changed. They have not been consistent.”

To illustrate, Gunkel cited Greco-Roman times, when land-owning males were allowed to exclude their wives and children from moral consideration and basically treat them as property. As we’ve grown more enlightened in recent times, Gunkel points to the animal rights movement which, he said, has lowered the bar for inclusion in moral standing, based on the questions of “Do they suffer?” and “Can they feel?” The properties that are qualifying properties are no longer as high in the hierarchy as they once were, he said.

While the properties approach has worked well for about 2,000 years, Gunkel noted that it has generated more questions that need to be answered. On the ontological level, those questions include, “How do we know which properties qualify and when do we know when we’ve lowered the bar too low or raised it too high? Which properties count the most?”, and, more importantly, “Who gets to decide?”

“Moral philosophy has been a struggle over (these) questions for 2,000 years and, up to this point, we don’t seem to have gotten it right,” Gunkel said. “We seem to have gotten it wrong more often than we have gotten it right… making exclusions that, later on, are seen as being somehow problematic and dangerous.”

Beyond the ontological issues, Gunkel also notes there are epistemological questions to be addressed as well. If we were to decide on a set of properties and be satisfied with those properties, because those properties generally are internal states, such as consciousness or sentience, they’re not something we can observe directly because they happen inside the cranium or inside the entity, he said. What we have to do is look at external evidence and ask, “How do I know that another entity is a thinking, feeling thing like I assume myself to be?”

To answer that question, Gunkel noted the best we can do is assume or base our judgments on behavior. The question then becomes, if you create a machine that is able to simulate pain, as we’ve been able to do, do you assume the robots can read pain? Citing Daniel Dennett’s essay Why You Can’t Make a Computer That Feels Pain, Gunkel said the reason we can’t build a computer that feels pain isn’t because we can’t engineer a mechanism, it’s because we don’t know what pain is.

“We don’t know how to make pain computable. It’s not because we can’t do it computationally, but because we don’t even know what we’re trying to compute,” he said. “We have assumptions and think we know what it is and experience it, but the actual thing we call ‘pain’ is a conjecture. It’s always a projection we make based on external behaviors. How do we get legitimate understanding of what pain is? We’re still reading signs.”

According to Gunkel, the approaching challenge in our everyday lives is, “How do we decide if they’re worthy of moral consideration?” The answer is crucial, because as we engineer and build these devices, we must still decide what we do with “it” as an entity. This concept was captured in a PBS Idea Channel video, an episode based on this idea and on Gunkel’s book, The Machine Question.

To address that issue, Gunkel said society should consider the ethical outcomes of the artificial intelligence we create at the design stage. Citing the potential of autonomous weapons, the question is not whether or not we should use the weapon, but whether we should even design these things at all.

“After these things are created, what do we do with them, how do we situate them in our world? How do we relate to them once they are in our homes and in our workplace? When the machine is there in your sphere of existence, what do we do in response to it?” Gunkel said. “We don’t have answers to that yet, but I think we need to start asking those questions in an effort to begin thinking about what is the social status and standing of these non-human entities that will be part of our world living with us in various ways.”

As he looks to the future, Gunkel predicts law and policy will have a major effect on how artificial intelligence is regarded in society. Citing decisions stating that corporations are “people,” he noted that the same types of precedents could carry over to designed systems that are autonomous.

“I think the legal aspect of this is really important, because I think we’re making decisions now, well in advance of these kind of machines being in our world, setting a precedent for the receptivity to the legal and moral standing of these other kind of entities,” Gunkel said.

“I don’t think this will just be the purview of a few philosophers who study robotics. Engineers are going to be talking about it. AI scientists have got to be talking about it. Computer scientists have got to be talking about it. It’s got to be a fully interdisciplinary conversation and it’s got to roll out on that kind of scale.”

By not having to answer to industry or academia, OpenAI hopes to focus not just on developing digital intelligence, but also guide research along an ethical route that, according to

By not having to answer to industry or academia, OpenAI hopes to focus not just on developing digital intelligence, but also guide research along an ethical route that, according to