Actually, it is proving to be more effective, cheaper and quicker to advance people with technologies such as BMI v. trying to create machines to be human. BMI technology started development in the 90’s for the most part; and today we have proven tests where people have used BMI to fly drones and operate other machinery as well as help others to have feelings in prosthesis arm or leg, etc. So, not surprised by Musk’s position.

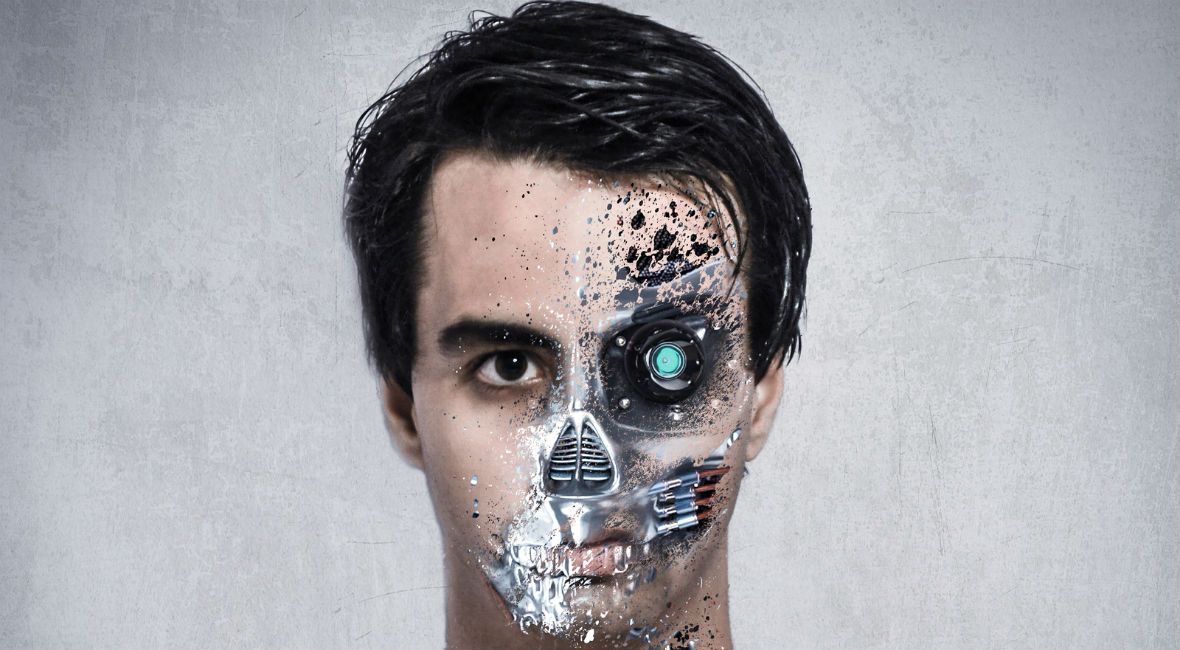

Would you ever chip yourself? The idea of human microchipping, once confined to the realms of science fiction and conspiracy theory, has fascinated people for ages, but it always seemed like something for the distant future. Yet patents for human ‘implants’ have been around for years, and the discussion around chipping the human race has been accelerating recently.

Remember when credit and debit cards went from smooth plastic to microchipped? That could be you in a few years, as multiple corporations are pushing to microchip the human race. In fact, microchip implants in humans are already on the market, and an American company called Applied Digital Solutions (ADS) has developed one approximately the size of a grain of rice which has already been approved by the U.S. Food and Drug Administration for distribution and implementation. Here is a video taken three years ago of DARPA Director and Google Executive Regina Dugan promoting the idea of microchipping humans.