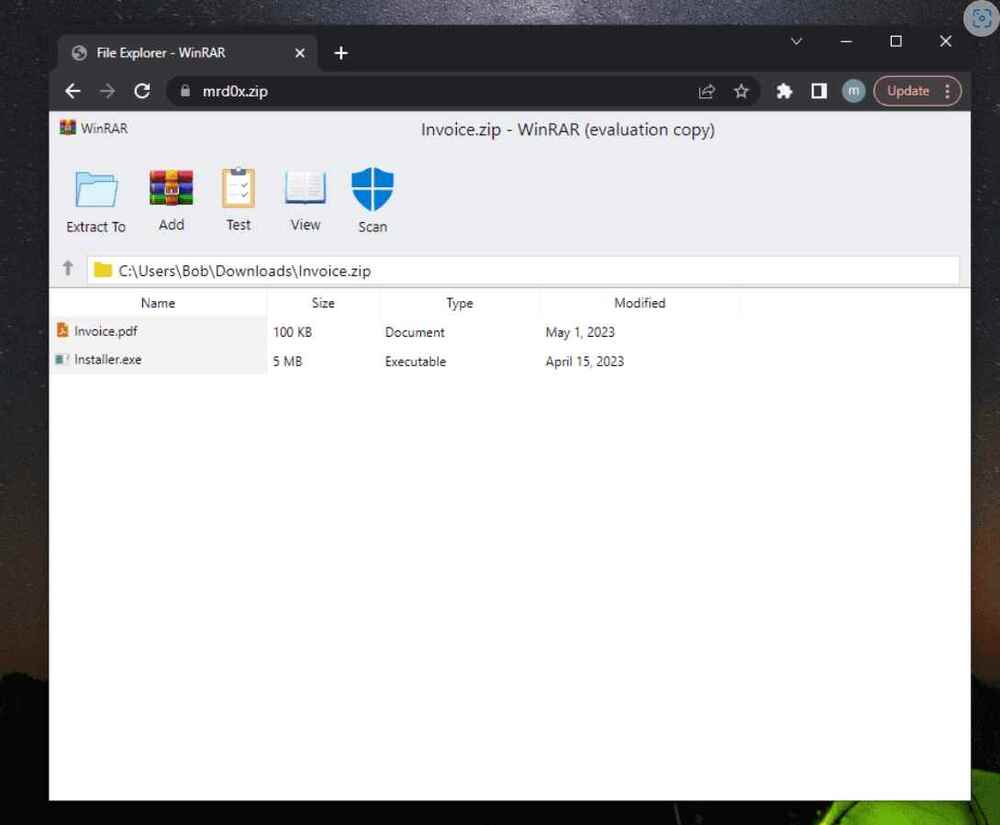

When a victim visits a website ending in. ZIP, a recently developed phishing method known as “file archiver in the browser” may be used to “emulate” file-archiving software in the target’s web browser.

According to information published by a security researcher named mr.d0x last week, “with this phishing attack, you simulate a file archiver software (e.g., WinRAR) in the browser and use a.zip domain to make it appear more legitimate,”

In a nutshell, threat actors could develop a realistic-looking phishing landing page using HTML and CSS that replicates genuine file archiving software. They could then host the website on a.zip domain, which would elevate social engineering tactics to a higher level.