An experiment with superconducting qubits opens the door to determining whether quantum devices could be less energetically costly if they are powered by quantum batteries

Support this channel on Patreon to help me make this a full time job: https://www.patreon.com/whatdamath (Unreleased videos, extra footage, DMs, no ads)

Alternatively, PayPal donations can be sent here: http://paypal.me/whatdamath.

Get a Wonderful Person Tee: https://teespring.com/stores/whatdamath.

More cool designs are on Amazon: https://amzn.to/3QFIrFX

Hello and welcome! My name is Anton and in this video, we will talk about a few studies that explain how the human brain developed complexity.

Links:

https://linkinghub.elsevier.com/retrieve/pii/S0092867423009170

https://www.science.org/doi/10.1126/science.ade5645

https://www.biorxiv.org/content/10.1101/2024.05.01.592020v5.full.pdf.

https://www.science.org/doi/10.1126/science.abm1696

https://www.nature.com/articles/s41559-022-01925-6

https://www.microbiologyresearch.org/content/journal/mgen/10…01322#tab2

Other videos:

https://www.youtube.com/watch?v=qyMbXCzcS0k.

https://www.youtube.com/watch?v=e10yOoP-x3g.

#brain #biology #evolution.

0:00 Discoveries about the evolution of the brain.

1:20 800 Million years ago… how it all began.

3:10 Did nervous system evolve multiple times? Comb jellies.

4:45 Big brains — primates vs octopuses.

9:20 Human brains and human intelligence genes.

11:20 Gut microbes and fuel for the brain.

12:20 Conclusions and implications.

Enjoy and please subscribe.

Bitcoin/Ethereum to spare? Donate them here to help this channel grow!

bc1qnkl3nk0zt7w0xzrgur9pnkcduj7a3xxllcn7d4

or ETH: 0x60f088B10b03115405d313f964BeA93eF0Bd3DbF

The hardware used to record these videos:

Stephen Wolfram shares surprising new ideas and results from a scientific approach to metaphysics. Discusses time, spacetime, computational irreducibility, significance of the observer, quantum mechanics and multiway systems, ruliad, laws of nature, objective reality, existence, mathematical reality.

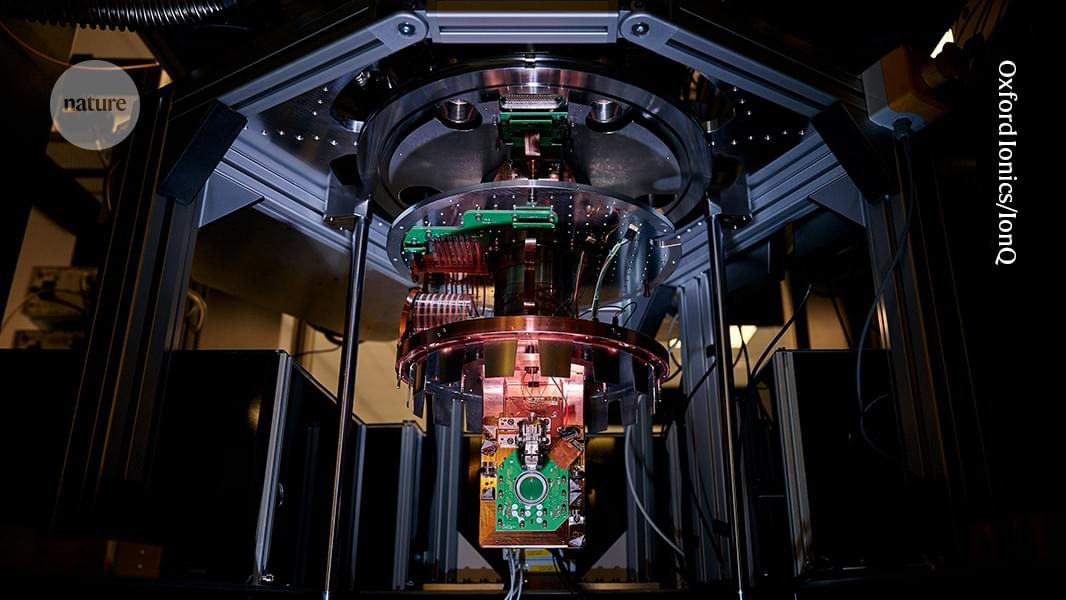

Mikhail Lukin’s team at Harvard presented a “universal” design for neutral-atom processors with robust error-correction capabilities using just 448 qubits, alongside a 3,000-qubit processor that can run for hours.

As Lukin notes: “These are really new kinds of instruments—by some measures, they’re not even computers… What’s really exciting is that these systems are now working already at a reasonable scale and we can start experimenting with them to figure out what we can do with them.”

A string of surprising advances suggests usable quantum computers could be here in a decade.

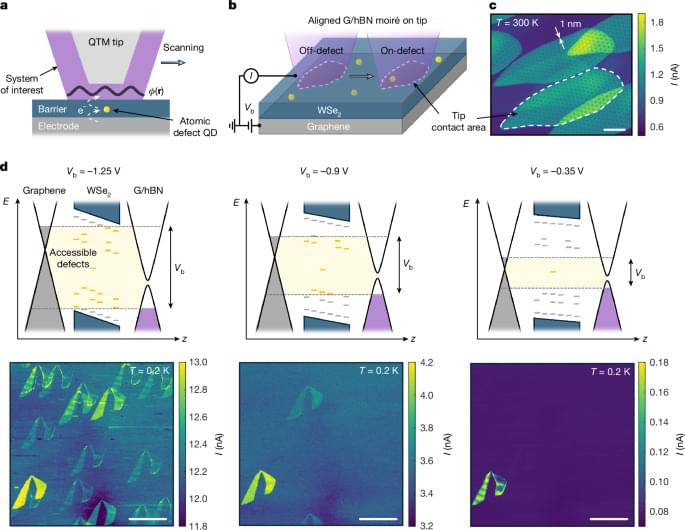

Quantum computers hold great promise for exciting applications in the future, but for now they keep presenting physicists and engineers with a series of challenges and conundrums. One of them relates to decoherence and the errors that result from it: bit flips and phase flips. Such errors mean that the logical unit of a quantum computer, the qubit, can suddenly and unpredictably change its state from “0” to “1,” or that the relative phase of a superposition state can jump from positive to negative.

These errors can be held at bay by building a logical qubit out of many physical qubits and constantly applying error correction protocols. This approach takes care of storing the quantum information relatively safely over time. However, at some point it becomes necessary to exit storage mode and do something useful with the qubit—like applying a quantum gate, which is the building block of quantum algorithms.

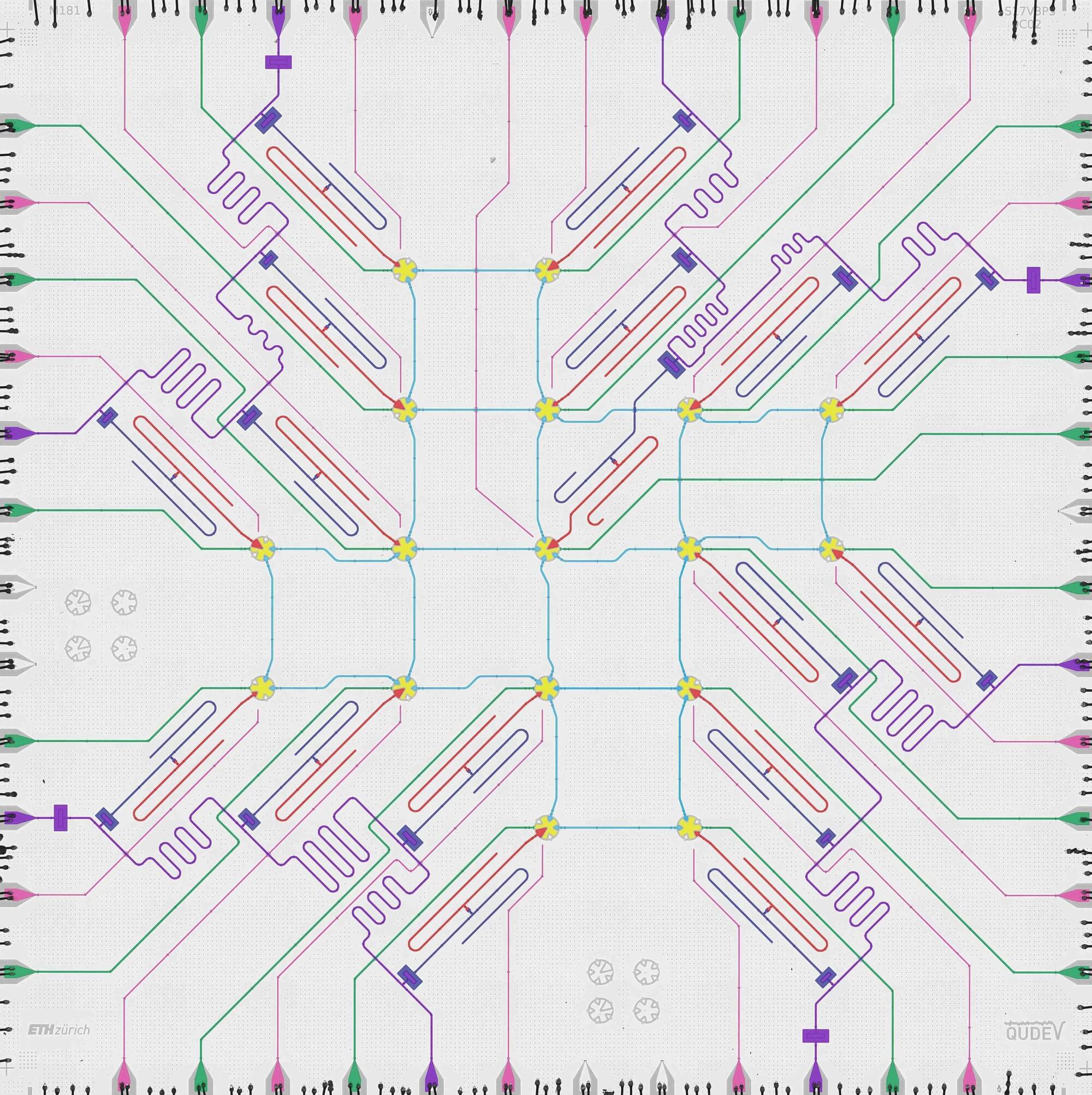

The research group led by D-PHYS Professor Andreas Wallraff, in collaboration with the Paul Scherrer Institute (PSI) and the theory team of Professor Markus Müller at RWTH Aachen University and Forschungszentrum Jülich, has now demonstrated a technique that makes it possible to perform a quantum operation between superconducting logical qubits while correcting for potential errors occurring during the operation. The researchers have just published their results in Nature Physics.

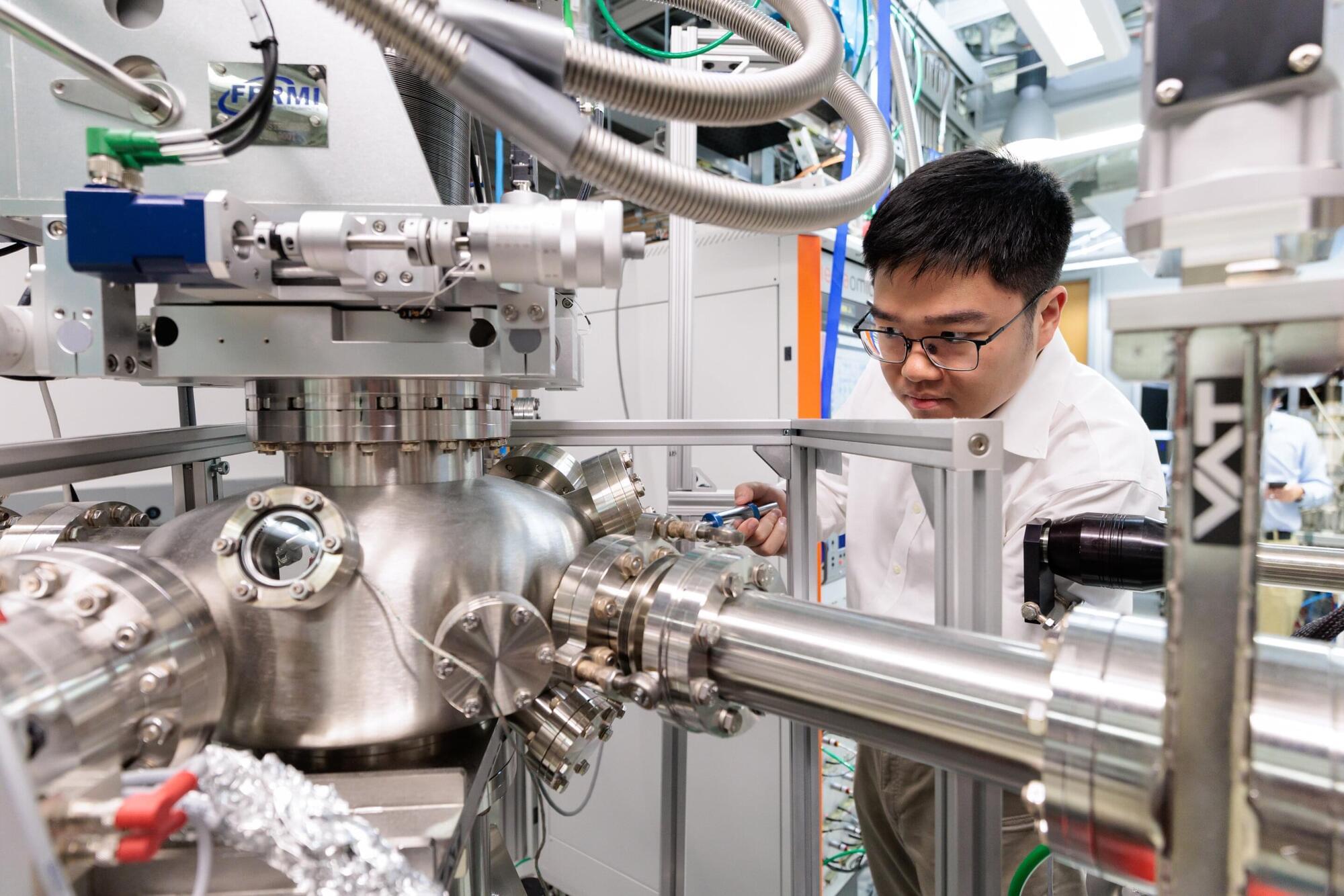

Today’s most powerful computers hit a wall when tackling certain problems, from designing new drugs to cracking encryption codes. Error-free quantum computers promise to overcome those challenges, but building them requires materials with exotic properties of topological superconductors that are incredibly difficult to produce. Now, researchers at the University of Chicago Pritzker School of Molecular Engineering (UChicago PME) and West Virginia University have found a way to tune these materials into existence by simply tweaking a chemical recipe, resulting in a change in many-electron interactions.

The team adjusted the ratio of two elements— tellurium and selenium —that are grown in ultra-thin films. By doing so, they found they could switch the material between different quantum phases, including a highly desirable state called a topological superconductor.

The findings, published in Nature Communications, reveal that as the ratio of tellurium and selenium changes, so too do the correlations between different electrons in the material—how strongly each electron is influenced by those around it. This can serve as a sensitive control knob for engineering exotic quantum phases.