Scientists have successfully teleported a three-dimensional quantum state. The international effort between Chinese and Austrian scientists could be crucial for the future of quantum computers.

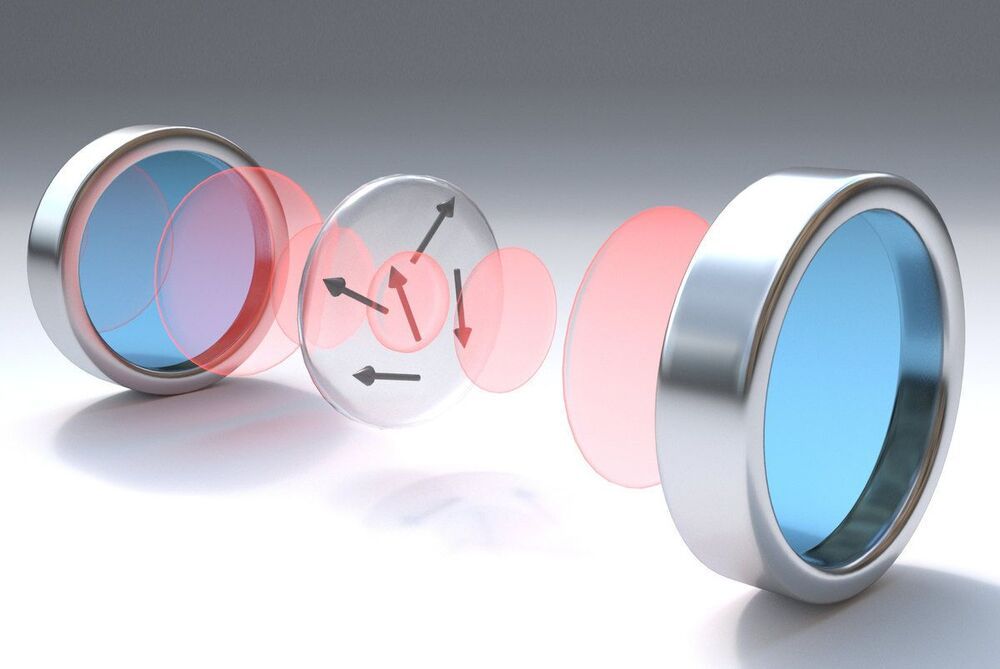

The researchers, from Austrian Academy of Sciences, the University of Vienna, and University of Science and Technology of China, were able to teleport the quantum state of one photon to another distant state. The three-dimensional transportation is a huge leap forward. Previously, only two-dimensional quantum teleportation of qubits has been possible. By entering a third dimension, the scientists were able to transport a more advanced unit of quantum information known as a “qutrit.”

Quantum computing is different than what’s known as classical computing, which is what powers phones and laptops. These traditional devices store information in bits, which are represented with a binary 0 or 1. A good metaphor is to imagine a circle, where each 0 and 1 are on opposite points. In Quantum computing, which deals with atomic and subatomic particles, qubits can exist at both of those points as well as anywhere else in the circle.