Researchers have developed a cutting-edge optical computing system that represents a major leap in the field of optical logic.

Traditionally, optical logic computing—using light to perform logical operations—has faced challenges when trying to handle more than four inputs due to limitations in…

Researchers have long sought to harness the power of light for computing, aiming to achieve higher speeds and lower energy consumption compared to traditional electronic systems. Optical computing, which uses light instead of electricity to perform calculations, promises significant advantages, including high parallelism and efficiency. However, implementing complex logic operations optically has been a challenge, limiting the practical applications of optical computing.

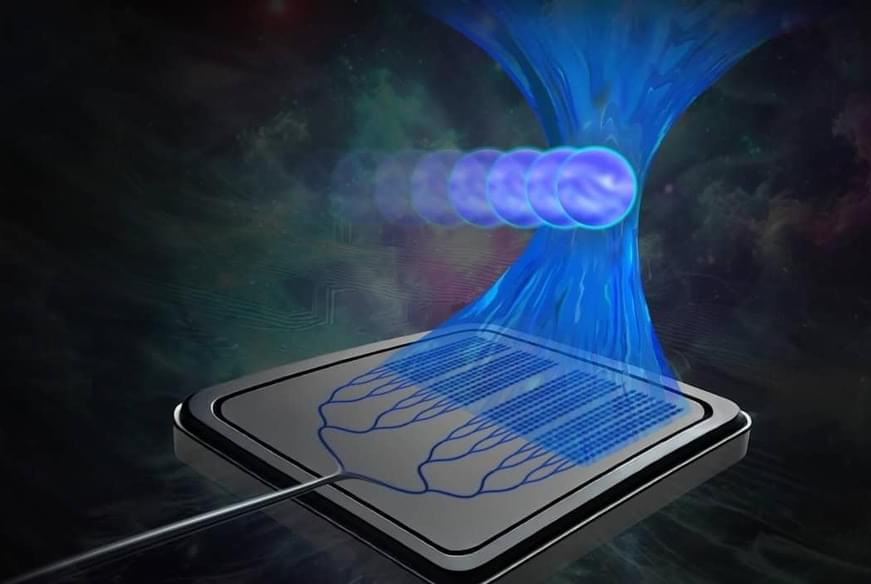

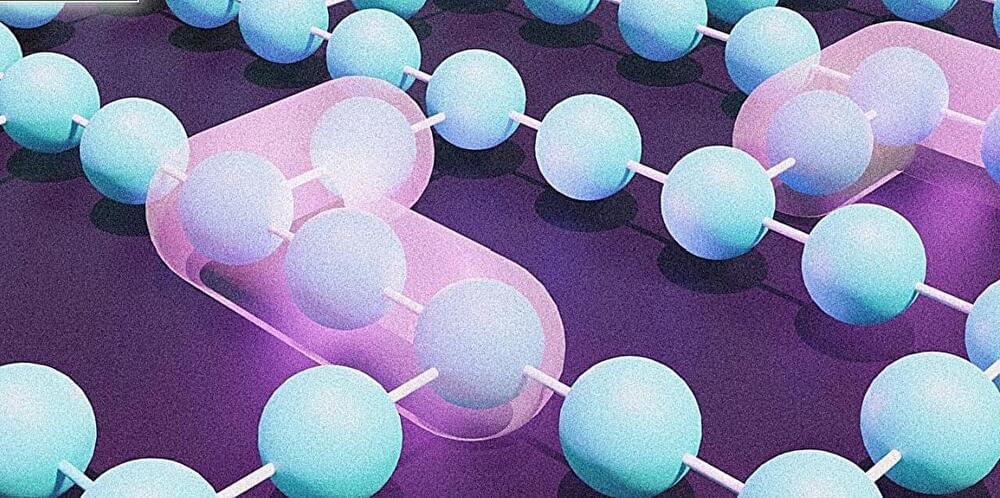

A recent breakthrough by researchers at Huazhong University of Science and Technology and the Wuhan National Laboratory for Optoelectronics has pushed the boundaries of optical computing. As reported in Advanced Photonics, they developed a large-scale optical programmable logic array (PLA) capable of handling more complex computations. This new optical PLA uses parallel spectrum modulation to achieve an 8-input system, significantly expanding the capabilities of optical logic operations.

The researchers demonstrated the potential of their optical PLA by successfully running Conway’s Game of Life, a well-known two-dimensional cellular automaton. This achievement marks the first time such a complex model has been executed on an optical platform without relying on electronic components for nonlinear computing.