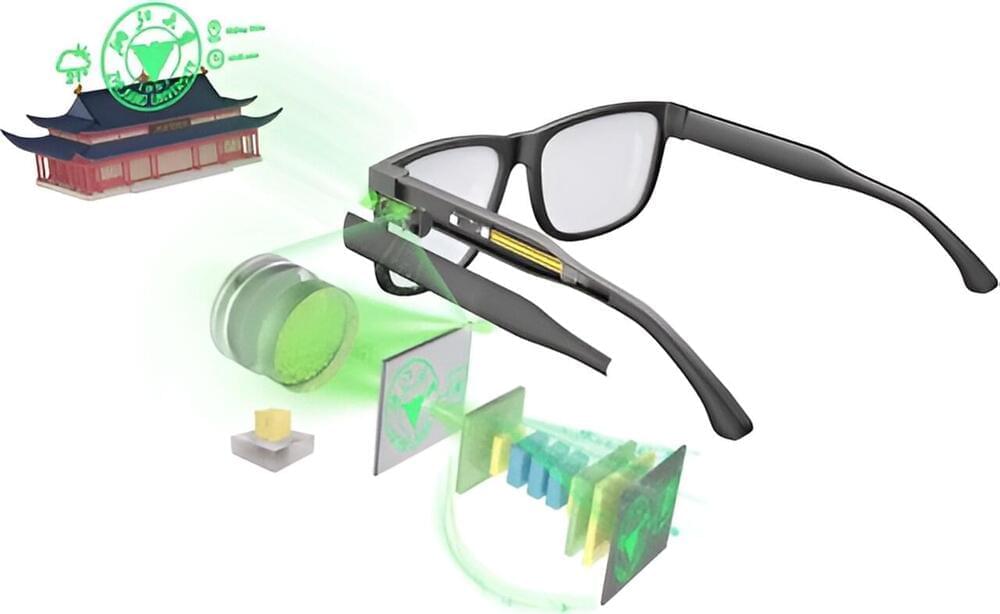

A big emphasis of the Journey Lens is to pull you away from your smartphone and allow you to control your day-to-day experience more from the glasses. That’s why this lightweight device has that small see-through display in the upper righthand corner, acting almost like an annotation on what you encounter rather than something in the middle of your field of view that’s trying to control what you see.

Phantom Technology offers a range of different monthly plans based on the experience the user wants with the Journey Lens glasses. These start with a free plan and go up to a premium pro plan at $18 a month, which includes early access to new features and something called Deep Focus.

As shown in the image above, there is a range of how many apps you can connect to the glasses for getting notifications, reading messages and so on—just three with the standard plan, or unlimited apps with the premium and premium pro plans. Three months of the premium plan is included for free with a pre-order of the $195 device, but I believe Phantom Technology would be better served to give everyone three free months of this plan so that new users can understand the value.