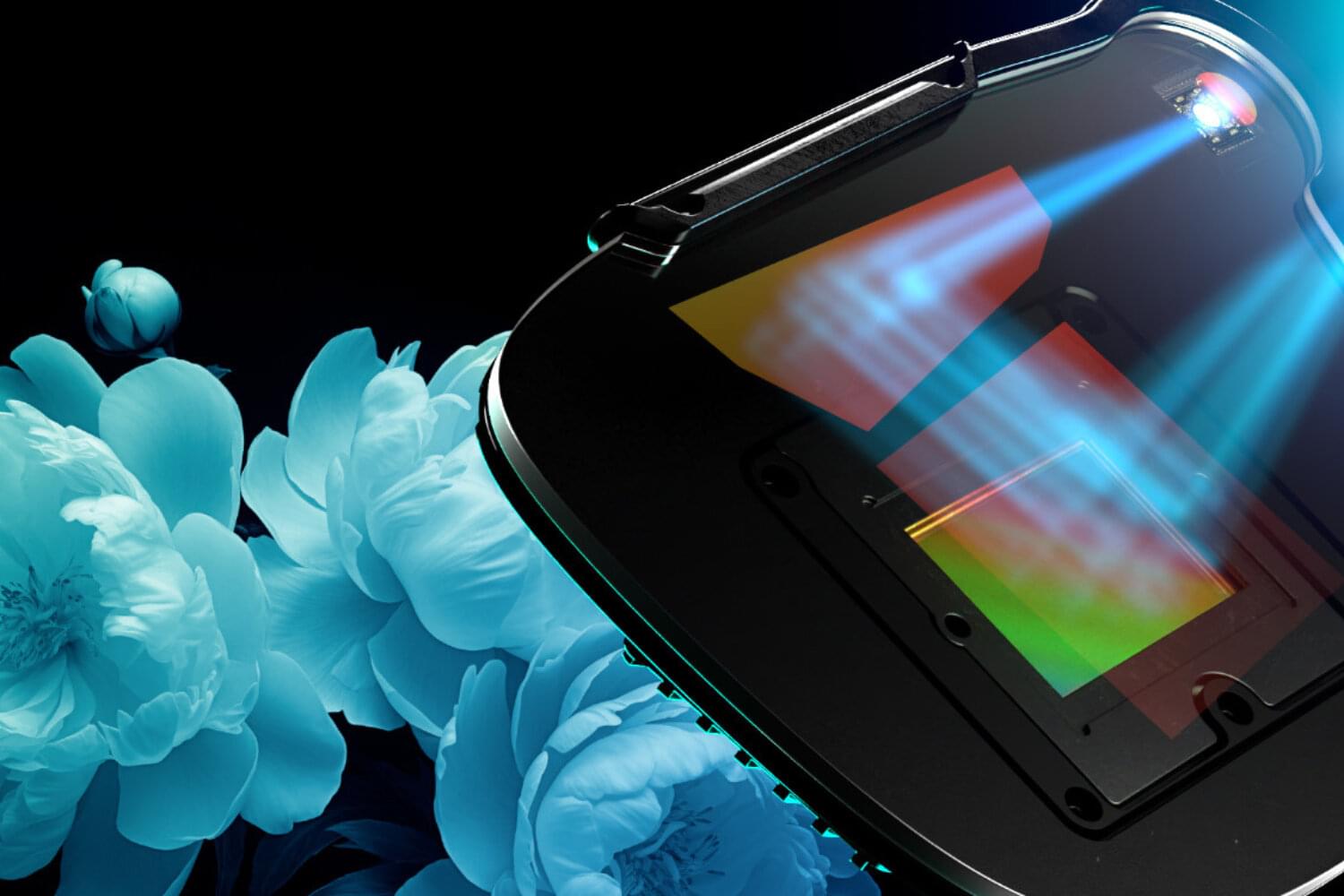

Meta has developed a new flat ultra-thin panel laser display that could lead to lighter, more immersive augmented reality (AR) glasses and improve the picture quality of smartphones, tablets and televisions. The new display is only two millimeters thick and produces bright, high-resolution images.

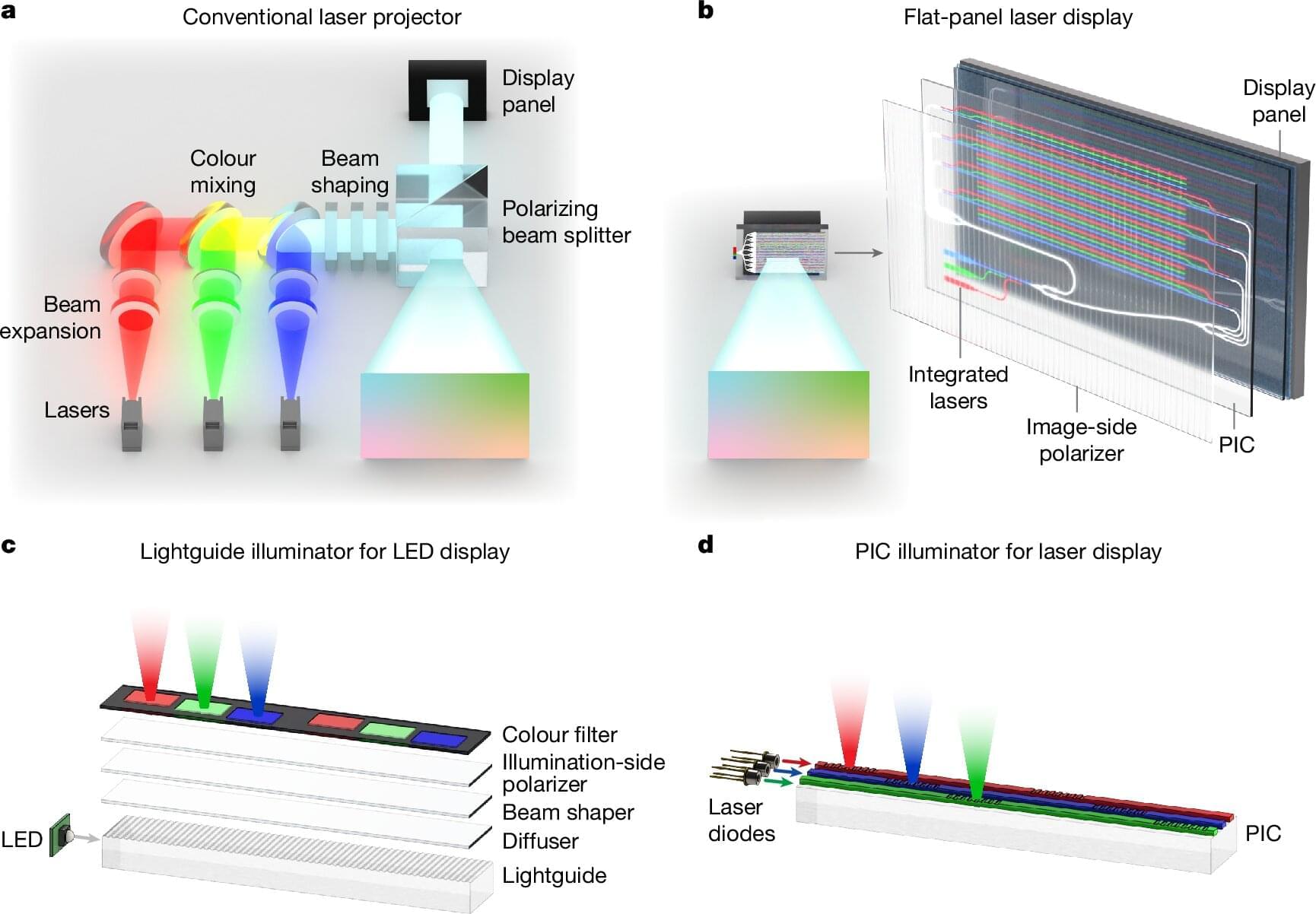

Flat-panel displays, particularly those illuminated by LEDs, are ubiquitous, seen in everything from smartphones and televisions to laptops and computer monitors. But no matter how good the current technology is, the search for better is always ongoing. Lasers promise superior brightness and the possibility of making the technology smaller and more energy efficient by replacing bulky and power-hungry components with compact laser-based ones.

However, current laser displays still need large, complex optical systems to shine light onto screens. Previous attempts at making flat-panel laser displays have come up short as they required complex setups or were too difficult to manufacture in large quantities.