Deep-learning models have proven to be highly valuable tools for making predictions and solving real-world tasks that involve the analysis of data. Despite their advantages, before they are deployed in real software and devices such as cell phones, these models require extensive training in physical data centers, which can be both time and energy consuming.

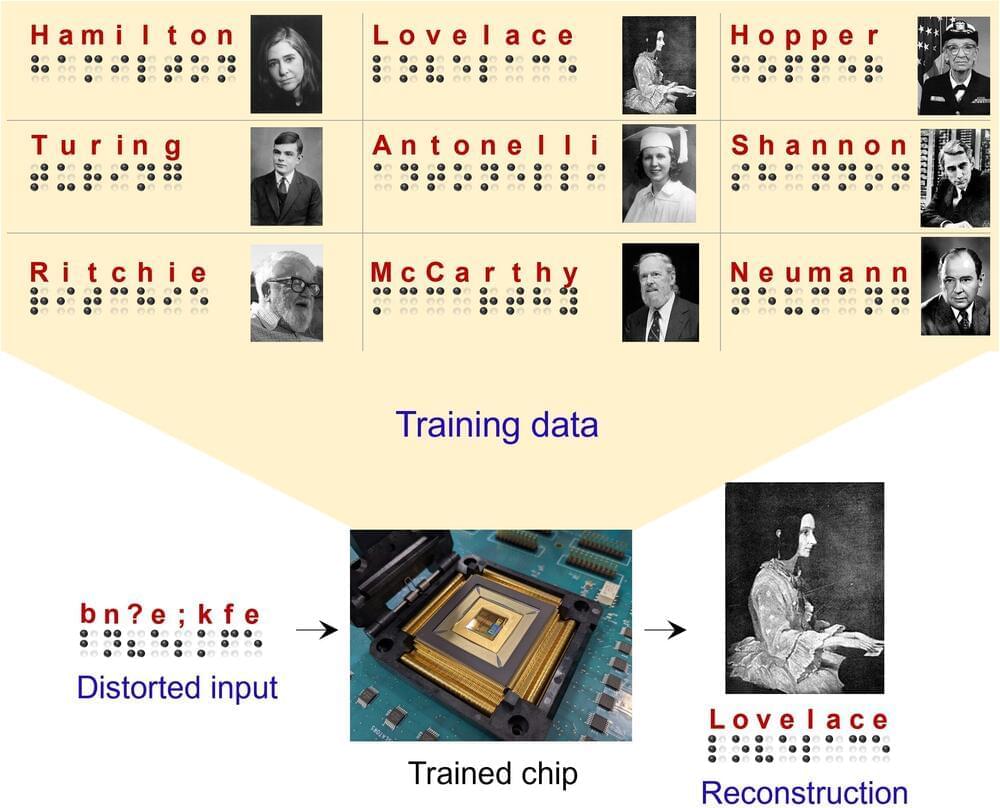

Researchers at Texas A&M University, Rain Neuromorphics and Sandia National Laboratories have recently devised a new system for training deep learning models more efficiently and on a larger scale. This system, introduced in a paper published in Nature Electronics, relies on the use of new training algorithms and memristor crossbar hardware, that can carry out multiple operations at once.

“Most people associate AI with health monitoring in smart watches, face recognition in smart phones, etc., but most of AI, in terms of energy spent, entails the training of AI models to perform these tasks,” Suhas Kumar, the senior author of the study, told TechXplore.