A group of computer scientists once backed by Elon Musk has caused some alarm by developing an advanced artificial intelligence (AI) they say is too dangerous to release to the public.

OpenAI, a research non-profit based in San Francisco, says its “chameleon-like” language prediction system, called GPT–2, will only ever see a limited release in a scaled-down version, due to “concerns about malicious applications of the technology”.

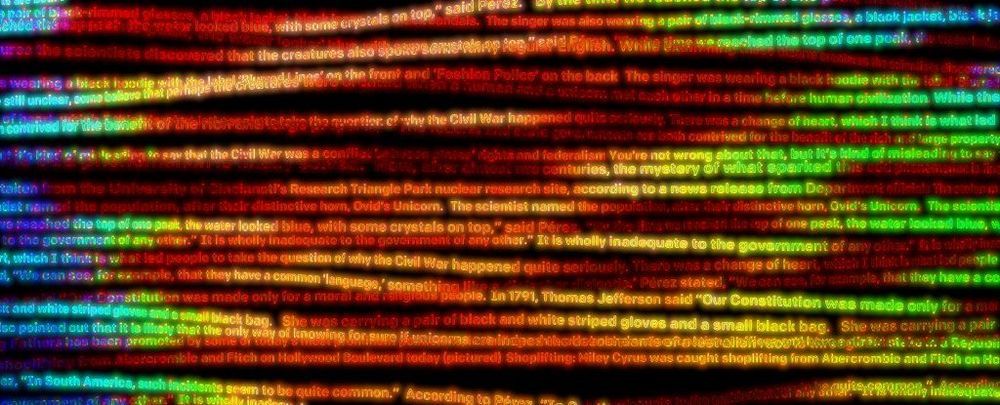

That’s because the computer model, which generates original paragraphs of text based on what it is given to ‘read’, is a little too good at its job.

Read more

Comments are closed.