Pseudo or Real?

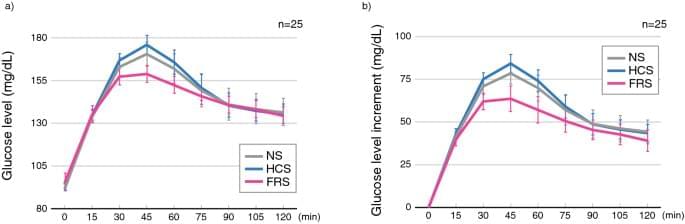

In this study, the FRS condition typically suppressed the increase in glucose levels in the OGTT compared with that in the HCS condition. This tendency was also observed after comparing glucose levels 1 h after glucose loading (Supplementary Fig. S2 online). The suppressive effect of the FRS condition on glucose elevation was more pronounced in the older age group and the group with high HbA1c. However, it was not evident in the younger age group or the group with low HbA1c. Similarly, this tendency was observed when we divided the participants into two groups: high glucose level and low glucose level by OGTT (Supplementary Fig. S3 online). These converging findings imply that sounds with inaudible HFC are more effective in improving glucose tolerance in individuals at a higher risk of glucose intolerance.

It is well experienced in daily practice that stress has a significant impact on glycemic control in patients with diabetes. Many reports have highlighted stress-induced increases in blood glucose levels in patients with type 2 diabetes22,23,24,25,26,27,28,29,30,31. In addition, a large population-based cohort study of Japanese participants reported a 1.22-fold (women) and 1.36-fold (men) increased risk of developing diabetes in individuals with high subjective stress levels compared with those with low levels32. This indicates that stress management influences the pathological transition of patients with diabetes and the prevention of its onset in healthy individuals or potential prediabetics. However, the effects of stress on individuals, both in type and degree, vary so widely33,34,35 that it is practically difficult to study them under experimentally controlled conditions, unlike with pharmacotherapy.

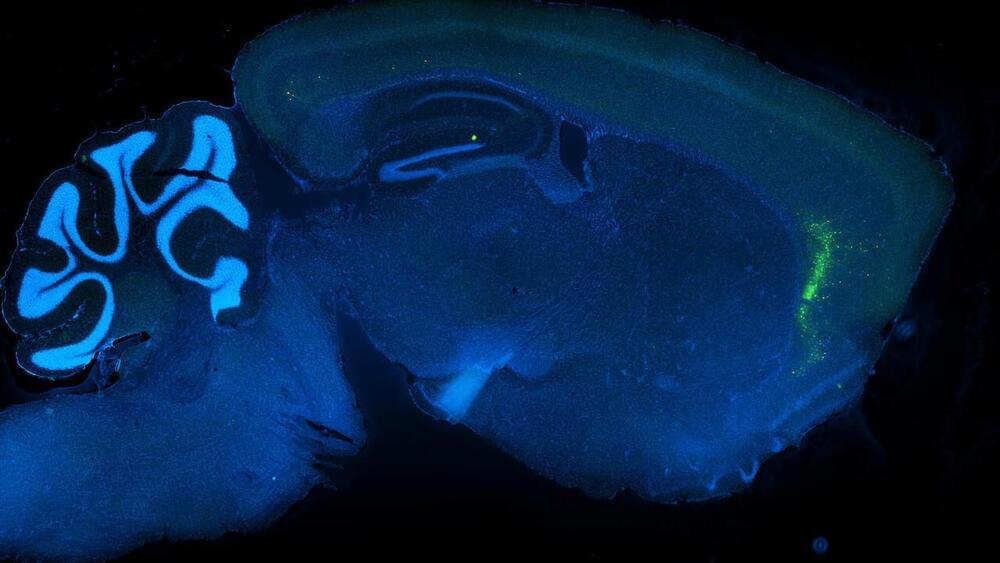

The effects of stress on blood glucose levels are believed to be primarily mediated by neural control from the brainstem and hypothalamus36,37. We considered it important to investigate the possibility that acoustic information acting on the hypothalamus and brainstem may have physiological effects on glucose tolerance, independent of psychological effects, rather than primarily reducing subjective stress, which varies considerably among individuals and is difficult to measure objectively.