Utopian Surgery: Early Arguments Against Anesthesia in Surgery, Dentistry, and Childbirth

by Lifeboat Foundation Scientific Advisory Board member David Pearce.

Introduction

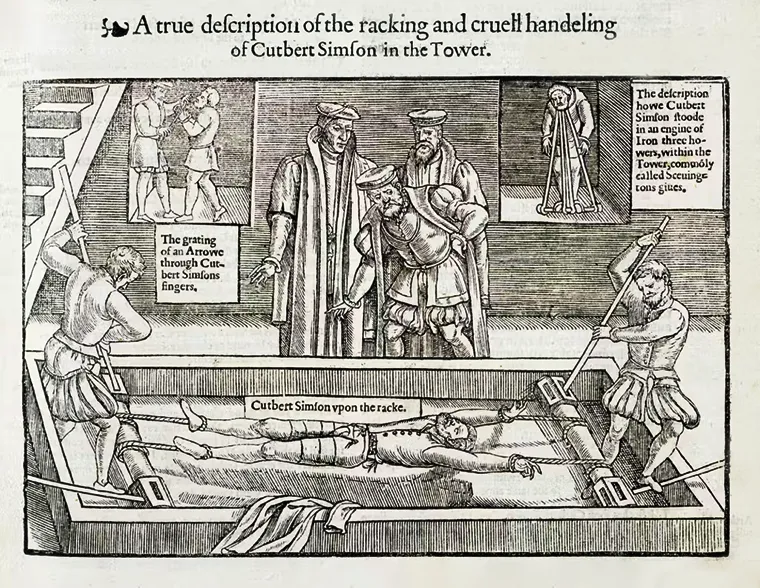

Before anesthesia, surgery was like medieval torture.

Before the advent of anesthesia, medical surgery was a terrifying prospect. Its victims could suffer indescribable agony. The utopian prospect of surgery without pain was a nameless fantasy — a notion as fanciful as the abolitionist project of life without suffering still seems today. The introduction of diethyl ether CH3CH2OCH2CH3 (1846) and chloroform CHCl3 (1847) as general anesthetics in surgery and delivery rooms from the mid-19th century offered patients hope of merciful relief. Surgeons were grateful as well: within a few decades, controllable anesthesia would at last give them the chance to perform long, delicate operations.

So it might be supposed that the adoption of painless surgery would have been uniformly welcomed too by theologians, moral philosophers and medical scientists alike. Yet this was not always the case. Advocates of the “healing power of pain” put up fierce if disorganized resistance.

The debate over whether to use anesthetics in surgery, dentistry and obstetrics might now seem of merely historical interest. Yet it is worth briefly recalling some of the arguments used against the introduction of pain-free surgery raised by a minority of 19th century churchmen, laity and traditionally-minded physicians.

For their objections parallel the arguments put forward in the early 21st century against technologies for the alleviation or abolition of “emotional” pain — whether directed against the use of crude “psychic anesthetizers” like today’s SSRIs, or more paradoxically against the use of tomorrow’s mood-elevating feeling-intensifiers i.e. so-called “empathogen-entactogens”, hypothetical safe and long-acting analogues of MDMA.

It’s worth recalling too that early critics of surgical and obstetric anesthesia weren’t (all) callous reactionaries or doctrinaire religious fundamentalists. Nor are all contemporary critics of the use of pharmacotherapy to treat psychological distress. The doubters, critics and advocates of caution were right to consider the potential diagnostic role of pain — and to emphasize that the risks, mechanisms and adverse side-effects of the new anesthetic procedures were poorly understood.

In Victorian Britain, around 1 in 2500 people given chloroform anesthesia died directly in consequence. Around 1 in 15,000 died as a direct result of being administered ether. This statistic pales beside the proportion that died from post-surgical infection; but it compares with the present-day mortality figure of 1 in around 250,000 people who die as a direct result of undergoing surgical anesthesia in the UK. Safe and sustainable total anesthesia that is 100% reliable — and reliably reversible — is as hard to achieve as safe and sustainable analgesia, euthymia, or euphoria. Yet the technical and ideological challenges ahead in banishing suffering from the world shouldn’t detract from the moral case for its abolition.

Historical Background

The effect of inhaling ether, chloroform, nitrous oxide and similar agents was christened by the physician-poet Oliver Wendell Holmes, Sr (1809–94). In a letter to etherization pioneer William Morton, who had solicited his opinion, Holmes coined the words “anæsthetic” and “anæsthesia” from the Greek an for “without” and esthesia for “sensibility”. Holmes once remarked that if the whole pharmacopoeia of his era “were sunk to the bottom of the sea, it would be all the better for mankind, and all the worse for the fishes”; but he knew anesthetics were a spectacular exception.

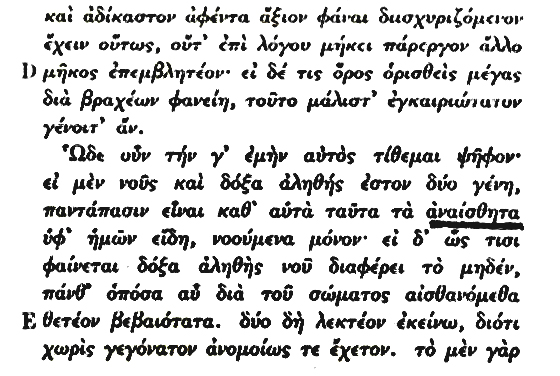

The word anesthesia was used in Plato’s writings.

Strictly speaking, the word for anesthesia wasn’t new — the Greeks themselves occasionally used the term, notably the herbalist physician and surgeon Dioscorides (c.40-c.90 AD). It had been revived on more than one occasion since: Bailey’s English Dictionary (1724) defines anesthesia as “a defect of sensation”. But Holmes was the first to propose the term to designate the state of unconsciousness induced by gas-inhalation for painless surgery. Holmes apparently thought hard about his recommendation, and urged Morton to consult with other scholars too. For he recognized that as news of the revolution spread like wildfire across the globe, the term would be “repeated by the tongues of every civilized race of mankind.”

The concept of pain-relief and even total insensibility for surgery wasn’t original or unfamiliar. However, for thousands of years its reliable prospect had seemed impossibly utopian — as unrealistic as a future of lifelong bliss seems at present.

The single or combined use of stupefying agents such as ethyl alcohol, mandragora, cannabis and opium to deaden the sensibilities prior to surgery had been practised in classical antiquity. Herodotus (c.484-c.432 BC) relates how the Scythians induced stupor by inhalation of the vapors of some kind of hemp, a remarkable if apocryphal feat in the low-THC era before superskunk.

Inhaling vapors to alter one’s state of consciousness was practised too by the pythonesses of Delphi, sacred female oracles who breathed in vapors from a rock crevice in the course of their priestly duties. However, inhalation was performed for the purposes of divination rather than anesthesia.

Assyrian surgeons apparently half-asphyxiated children undergoing circumcision by first almost strangling them. This practice sounds almost as barbarous as the operation itself.

Centuries later, Saint Hilary Bishop of Poitiers (315–367), exiled to the Orient in 356 A.D. by the Roman Emperor Constantius, described drugs that “lulled the soul to sleep”. But if they were administered in adequate dosage, there was a risk that the soul would not wake up, in this world at least.

Apuleius, a 5th century compiler of medical literature, recommends that “if anyone is to have a member mutilated, burned or sawed let him drink half an ounce with wine, and let him sleep till the member is cut away without any pain or sensation.” Unfortunately, extreme pain tends to exert a sobering effect.

A few medievals were surprisingly resourceful. The 13th century occultist, alchemist and learned physician Arnold of Villanova (c.1238-c.1310) searched for an effective anesthetic. In a book usually credited to him, a variety of medicines are named and different methods of administration are set out, designed to make the patient insensible to pain, so that “he may be cut and feel nothing, as though he were dead.” For this purpose, a mixture of opium, mandragora, and henbane was to be used. This method was similar to inhaling the vapors of the soporific sponge mentioned around 1200 by Nicholas of Salerno, and sporadically in different sources from the 9th to 14th centuries.

Arnold’s recipe was modified by the Dominican friar Theodoric of Lucca (1210–1298), who added the mildly narcotic juice of lettuce, ivy, mulberry, sorrel and hemlock to the opium-mandragora mixture. From this decoction, a new soporific sponge would be boiled and then dried; when needed again, it was dipped in hot water and applied to the nostrils of the afflicted.

The typical effect still left much to be desired: general anesthesia avant la lettre was more of an aspiration than an achievement. Yet if the outcome was often disappointing, so too are the response- and remission-rates for drugs licensed to treat emotional distress in the era of Big Pharma.

Other painkilling techniques for surgery had a longer pedigree. Blood-letting undoubtedly relieved pain, though it was carried out to dangerous and often fatal excess. Before the invention of the suture or stitch in the 16th century by the French military surgeon Ambroise Paré (c.1510–1590), patients undergoing surgery frequently died — either because of bleeding or as a result of the method used to close the wound.

Wound-closure usually entailed cauterization by the application of hot oil or hot irons. To seal the exposed blood vessels after amputations, the stump of the bloodied limb might be dipped in boiling pitch. At a distance of several centuries, the use of boiling oil strikes us as brutal and primitive; but it’s worth recalling that as late as the 20th century and beyond, the deficiencies of somatic and psychiatric medicine alike could still drive patients to suicidal despair.

Primitive method of pain-relief.

Further options for surgical pain-relief were explored with limited success. Non-pharmacological methods besides blood-letting included the use of cold water, ice, distraction by counterirritation with stinging nettles, carotid compression and nerve clamping. Concussion anesthesia relied on the hammer stroke: the victim’s head was first encased in a leather helmet, after which the surgeon delivered a solid blow to his patient’s skull with a wooden hammer. A less refined concussion method involved a knockout punch to the jaw.

In the early 19th century, the most common technique was “Mesmerism”, a pseudo-scientific hypnosis dressed up in the language of “animal magnetism”. Its eponymous originator was Anton Mesmer (1734–1815). Mesmer believed that all living bodies contain a magnetic fluid. By manipulating this fluid into a state of balance within the body, physical health could allegedly be restored. It’s hard to rescue such notions and their proponents from what E.P. Thompson called “the enormous condescension of posterity”; but equally, it’s hard to know whether our own descendants will more probably feel condescension or compassion for today’s lame theories of, say, consciousness or pain relief.

Across the Atlantic, the New World enjoyed the benefits of coca. Inca medicine men sucked coca leaf with vegetable ash and dripped saliva into the wounds of their patients. Thanks to Viennese ophthalmologist Karl Koller (1857–1944) the anesthetic effects of the celebrated product of the coca plant were to prove a godsend for surgical operations on the eye. Cocaine relieves other forms of pain too, though these uses are now deprecated.

In the East, the Chinese developed a long tradition of acupuncture. Unlike total anesthesia, which confers benefits on true believer and sceptic alike, acupuncture works well only with the highly suggestible, and far from reliably even then. But the endogenous opioids released by its application may be better for even the sceptical patient than no palliative relief at all. More obscurely, the Chinese physician Hua Tuo (c.110-c.207) reputedly used hemp boiled with wine to anesthetize his patients. It is claimed Hua Tuo performed complex surgical operations on the abdominal organs, though only fragmentary details of his exploits with “foamy narcotic powder” are known.

During the Middle Ages in the West, the practice of using natural soporifics, sedatives and pain-relievers to ease the agonies of surgical intervention fell largely into disuse. This neglect was mainly due to the influence of the Christian Church, many of whose leading lights were more adept at causing pain than relieving it. Saving the soul from eternal damnation was conceived as more important than healing the mortal body — a reasonable inference given the assumptions on which it was based.

Afflictions of the flesh were commonly understood as punishment for sin, original or otherwise. Pain was supposedly the result of Satanic influence, demonic possession or simply The Will of God rather than an evolved response to potentially noxious stimuli. Investigators who aspired to relieve mortal suffering and understand the workings of the body were not highly esteemed. In the words of Cistercian abbot Saint Bernard of Clairvaux (1090–1153): “…to consult physicians and take medicines befits no religion and is contrary to purity.”

Surgery and anatomical dissection were widely perceived as shameful activities, not least because they threatened the long-awaited Resurrection of the Flesh. In retrospect, it’s clear the theological conception of disease retarded medical progress for generations — no less than the theological conception of mental disorder impedes progress toward a cruelty-free world to this day.

Viewing our Darwinian pathologies of emotion as God-given rather than gene-driven obscures how biotechnology can abolish suffering of the flesh and spirit alike. Tomorrow’s genetic medicine promises to turn heaven-on-earth from a pipe dream into a policy option. Yet if pain is a punishment for original sin, then one would assume it is wicked as well as futile to try and escape it.

Realistic or otherwise, by the 19th century a new age of humanitarianism and scientific optimism about mankind’s capacity for earthly self-improvement was (slowly) dawning. The synthesis of the atmospheric gases oxygen, carbon dioxide and nitrogen oxide by early scientific luminaries such as Black, Priestley, and Lavoisier gave birth to the ill-conceived but seminal discipline of “pneumatic medicine”.

Its most famous champion was Thomas Beddoes (1760–1808), founder of the Pneumatic Medical Institution in Bristol. Beddoes hired the teenage Humphry Davy as its Research Director. Doctors and patients tried inhaling the newly discovered gases and vapors of volatile liquids to see if their inhalation cured any diseases.

Tooth extraction under nitrous oxide.

The first gas recognized to have anesthetic powers was nitrous oxide N2O. Inert, colorless, odorless and tasteless, nitrous oxide was first isolated and identified in 1772 by the English chemist, Joseph Priestley (1733–1804). Priestley was a remarkable polymath: a Unitarian clergyman, political theorist, natural philosopher and educator. Writing of his research on gases, he observed, “I cannot help flattering myself that, in time, very great medicinal use will be made of the application of these different kinds of airs…” [Priestley J., Experiments and Observations on Different Kinds of Airs. 6 vols. 1:228, 1774].

Nitrous oxide doesn’t induce an anesthesia nearly as deep or effective as ether: it’s a strong analgesic in virtue of its tendency to promote opioid peptide release in the periaqueductal gray area of the midbrain; but unlike ether, it’s only a weak anesthetic. Nitrous oxide is not a muscle relaxant. Induction is rapid because of its low solubility. Its metabolism in the body is minimal, but it inhibits vitamin B-12 metabolism; chronic use of nitrous oxide can cause bone marrow damage. It also inactivates the enzyme methionine synthetase, critical to DNA synthesis and cell proliferation. Nitrous oxide is short-acting and generally regarded as safe to use. Even so, patients are in danger of hypoxia if it’s employed at the very high concentrations needed when it’s the sole anesthetic agent.

The exhilarating effects of inhaling nitrous oxide were noted by English chemist Sir Humphry Davy (1778–1829). “Whenever I have breathed the gas,” he wrote, “the delight has been often intense and sublime.” “Sublime” may not be quite le mot juste: Davy found that inhaling the compound made him want to giggle uncontrollably until he passed out. So the illustrious scientist dubbed it “laughing gas”. Regrettably, such a frivolous nickname probably discouraged the idea that the gas might serve a serious medical purpose. In like manner today, the racy street slang of short-acting recreational drugs belies the clues they offer to a post-genomic era of mental superhealth.

Sublime or otherwise, the nitrous oxide experience was so much fun Davy wanted to share it with his friends, notably the Romantic poets Samuel Taylor Coleridge (1772–1834) and Robert Southey (1774–1843). “I am sure the air in heaven must be this wonder working gas of delight”, enthused Southey. Tantalizingly, Davy himself remarked on “the power of the immediate operation of the gas in removing intense physical pain”; in 1799 he inhaled nitrous oxide to banish the pain of an erupting molar tooth. Davy also discovered that taking the gas could induce “voluptuous sensations”.

His early research at Beddoes’ Pneumatic Medical Institute is well-documented, even though its implications were missed. In an 80,000 word book on nitrous oxide, Researches, Chemical and Philosophical; Chiefly Concerning Nitrous Oxide, or Dephlogisticated Nitrous Air, and Its Respiration (1800), Davy describes the different planes of anesthesia [stage 1: analgesia; stage 2: delirium; stage 3: surgical anesthesia; stage 4: respiratory paralysis], though without appreciating the significance of the third plateau suitable for surgical operations.

Most tantalizingly of all, Davy explicitly suggested the use of nitrous oxide as an analgesic during surgery, since it “…appears capable of destroying physical pain, it may probably be used with advantage during surgical operations in which no great effusion of blood takes place”. Unfortunately, this was an idea ahead of its time: several decades of continuing surgical mayhem were to pass before the worldwide anesthetic revolution.

Davy’s student, Michael Faraday (1791–1867), studied nitrous oxide too. He compared its pain-relieving effects with the action of sulphuric ether. In a brief, anonymous 1818 article in The Quarterly Journal of Science and the Arts, Faraday noted how:

“When the vapor of ether mixed with common air is inhaled it produces effects very similar to those occasioned by nitrous oxide…a stimulating effect is at first perceived at the epiglottis, but soon becomes very much diminished…By the imprudent administration of ether, a gentleman was thrown into a very lethargic state, which continued with occasional periods of intermission for more than 30 hours.”

In the years ahead, there were other missed opportunities, dashed hopes and false starts. In 1824, English country doctor Henry Hill Hickman (1800–30), a contemporary of Davy and Faraday, performed (allegedly) painless operations upon non-human animals using carbon dioxide-induced anesthesia — thereby more-or-less asphyxiating the various mice, kittens, rabbits, puppies and an adult dog whose various body-parts he amputated. Hickman created in his victims a condition of what he called “suspended animation”.

This demonstration of inhalational anesthesia didn’t create the stir he anticipated, arousing the interest only of Napoleonic surgeon Baron Dominique-Jean Larrey (1766–1842). Hickman canvassed the possibility of pain-free surgery for humans, though the asphyxial narcosis induced by carbon dioxide makes this particular gas an unsuitable agent. In vain, he sent accounts of his work to the Royal Society of London. It seems Hickman’s experiments reminded the Royal Society’s President, the ageing Sir Humphry Davy, of the undignified excesses of his youth. Nothing came of it beyond a footnote in the history books.

Instead, gases and vapors were used by medical instructors and their students for the purposes of hilarity and intoxication rather than the performance of pain-free surgery. Nitrous oxide in particular was exploited in stage shows. An advertisement for one such public entertainment promised that “the effect of the gas is to make those who inhale it either laugh, sing, dance, speak or fight, etc., etc., according to the leading trait of their character. They seem to retain consciousness enough not to say or do that which they would have occasion to regret.”

Admixed with oxygen, nitrous oxide remains in surgical use. But the first really effective and (relatively) safe general anesthetic to gain acceptance was the now abandoned ether.

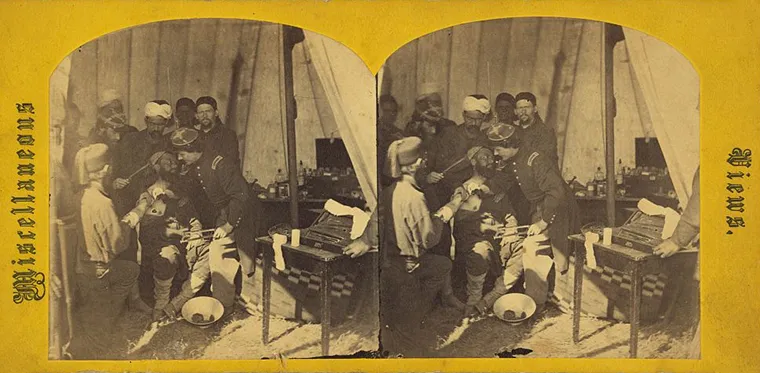

Ether use in the U.S. Civil War. Note an ether mask is held just to the left of the patient’s mouth and in front of the Assistant Surgeon’s face.

Ether is liquid at room temperature, but it vaporizes very easily. It can therefore readily be either swallowed or inhaled. Unlike nitrous oxide, its vapor can induce anesthesia without diluting the oxygen in room air to dangerously hypoxic levels. Ether itself had a long history before its use as a surgical anesthetic. It was marketed under the brand name Anodyne by Halle medical professor Friedrich Hoffmann (1660–1742).

Professor Hoffmann recommended Anodyne for pain due to earache, toothache, intestinal cramps, kidney stones, gallstones and menstrual distress. In England, Materia Medica (London, 1761) by W. Lewis describes ether as “one of the most perfect tonics, friendly to the nerves, cordial, and anodyne.” Readers are advised that three to twelve drops should be taken on a lump of sugar, and swallowed down with water. In the 1790s, medical maverick James Graham (1745–1794), “a famous London quack, proprietor of the Temple of Hymen and owner of the Celestial Bed,” habitually inhaled an ounce or two of ether in public several times a day. He took ether “with manifest placidity and enjoyment”. But no one who witnessed him seems to have thought of exploiting its effects for operations.

Ether was first discovered by Catalan philosopher chemist Raymundus Lullius (1232–1315). Lullius called it “sweet vitriol”, its name until rechristened by German-born London chemist W.G. Frobenius in 1730. In Greek, “ether” means heavenly. Its synthesis described by German alchemist Valerius Cordus (1514–1554) in 1540.

Soon afterwards, Philippus Aureolus Theophrastus Bombastus von Hohenheim (1490–1541), better known as Paracelsus, noted its tendency to promote sleep; and he observed how sweet vitriol/ether “…quiets all suffering without any harm and relieves all pain, and quenches all fevers, and prevents complications in all disease.” Paracelsus observed how chickens take ether gladly, and they “…undergo prolonged sleep, awake unharmed”. He had picked up much of his medical knowledge while working as a surgeon in several of the mercenary armies of the period; 16th century warfare was endemic, brutal and bloody.

Paracelsus was not unduly afraid to challenge received medical wisdom or its proponents: “This is the cause of the world’s misery, that your science is founded upon lies. You are not professors of the truth, but professors of falsehood”, he informed his fellow doctors. Yet Paracelsus didn’t make the intellectual leap needed to take advantage of the properties of ether for human surgical medicine. Had he done so, then given his undoubted brilliance as a publicist, centuries of untold suffering might have been averted.

The first use of general anesthesia probably dates to early nineteenth century Japan. On 13th October 1804, Japanese doctor Seishu Hanaoka (1760–1835) surgically removed a breast tumor under general anesthesia. His patient was a 60-year-old woman called Kan Aiya. Her sisters had all died of breast cancer; Kan sought Hanaoka’s help.

For the anesthetic, Hanaoka used “Tsusensan”, an orally administered herbal preparation he had painstakingly developed over many years. Its main active ingredient seems to have been the plant Chosen-asagao. Many details of Hanaoka’s early life and experiments are obscure. Scholars rely on “Mayaku-ko” (a collection of anesthetics and analgesics), a pamphlet written by his close colleague Shutei Nakagawa’s in 1796.

As a young man, Hanaoka had arrived in Kyoto aged 23. He learned both traditional Japanese medicine and Dutch-inspired surgery. For centuries, Western presence in Japan was limited by law to a single island in Nagasaki Bay. The import of medical books was prohibited. But Japanese physicians were able to write down the orally transmitted medical lore of their Dutch counterparts. Critically, and allied to his surgical prowess, Hanaoka believed in “the duty to relieve pain”.

Apparently he performed numerous experiments on non-human animals in his search for a non-toxic anesthetic. Hanaoka went on to perform scores of operations on human beings under anesthesia; he even operated on his daughter and wife. Unfortunately, under the national seclusion policy of the Tokugawa Shogunate (1603–1868), Japan was essentially isolated. Western physicians and their patients knew nothing of Hanaoka’s work and tradition.

The breakthroughs that heralded the modern era of anesthesiology were to come in the New World. Once again the story is messy and involved, albeit better known. In Artificial anesthesia and anesthetics (New York, William Wood and Co., 1881), Henry M. Lyman records how in January 1842 the chemist and Berkshire Medical College student William E. Clarke (1818–78) administered ether on a towel to a Miss Hobbie, after which the dentist, Elijah Pope, extracted a painful tooth. It seems Clarke was inspired by his earlier experience of “ether frolics” in Rochester.

Yet this was a one-off. Somehow Clarke and Pope failed to recognize the potentially momentous ramifications of what they had done. They neither wrote about nor repeated their feat, as far as we know. So conventionally, the first clinical use of ether as a surgical general anesthetic on humans is usually credited to rural Georgian pharmacist and physician Crawford Williamson Long (1815–78).

On 30th March 1842, Dr Long removed a cyst from the neck of a Mr James Venable under ether anesthesia; Mr Venable consented to be a guinea-pig for the occasion on account of his “dread of pain”. Dr Long had learned of its properties while ether-frolicking at medical school at the University of Pennsylvania. The use of such social intoxicants was as prevalent in the 1830s and 1840s as the MDMA-fuelled raves of a later era. Ether-filled balloons were liberally handed out for the enjoyment of the audience, a practice that might fruitfully enliven some academic lectures even today. In the era of ether frolics, medical students and budding chemists helped prepare gases for the festivities, a tradition of service that likewise continues more discreetly in the groves of academe even now. Historically, it seems likely that the medical connection may finally have helped several people, more-or-less independently, to draw the link between a form of stage-show entertainment and the opportunity to perform pain-free operations.

In any event, although Dr Long administered anesthesia to his patients on various occasions, and extended its use to obstetrics, he didn’t publicize his discovery beyond his local practice. Indeed until the publication in 1849 of his scholarly article for the Southern Medical and Surgical Journal; “An Account of the First Use of Sulfuric Ether by Inhalation as an Anesthetic in Surgical Operations”, his work was mostly unknown to the wider world.

Long’s adoption of pain-free surgery was common knowledge in Jefferson, Georgia, at least: some local residents apparently suspected him of practising witchcraft, others thought merely that it was unnatural, and religious traditionalists objected that pain was God’s way of cleansing the soul.

Long’s explanation of his early reticence was rational; it may even be true, though the full story is probably more complex. “The question will no doubt occur, why did I not publish the results of my experiments in etherization soon after they were made? I was anxious, before making my publication, to try etherization in a sufficient number of cases to fully satisfy my mind that anesthesia was produced by the ether, and was not the effect of the imagination, or owing to any peculiar insusceptibility to pain in the persons experimented on.”

Whatever the reason, the anesthetic revolution was now imminent. Connecticut dentist Horace Wells (1815–1848) attempted a public demonstration of surgical anesthesia in January 1845. Wells had earlier been one of the stage-volunteers who tried inhaling nitrous oxide during a demonstration by P.T. Barnum’s apt disciple “Professor” Gardner Quincy Colton (1814–98) at Union Hall in Hartford, Connecticut. One of the other volunteers, Samuel Cooley, a clerk at the local drugstore, injured his legs while agitated in the immediate aftermath of inhaling the gas. Wells afterwards asked the victim if his injury was painful. Cooley said he hadn’t felt anything at all; he was surprised to find blood all over his leg.

As ever, chance proverbially favors the prepared mind: critically in this context, Wells was a tender-hearted dentist who hated to see his patients suffer. He had always sought ways to minimize their distress as best he could; dental pain was a notoriously terrible affliction, and so was its cure. Fatefully, Wells now conceived the notion of pain-relief/anesthesia by gas inhalation. He asked Quincy Colton if he knew any reason why nitrous oxide couldn’t be used for dental extractions. Colton said he didn’t know any good reason.

So the next day Wells submitted to the extraction of one of his own molars by fellow dentist Dr John Riggs. Colton administered the nitrous oxide. Almost insensible, Wells felt no more than a pinprick. Groggy at first, he soon recovered his senses. “A new era in tooth pulling!” he exclaimed; and also, “It is the greatest discovery ever made!” More conservatively, Stuart Hameroff nominates anesthesia as the greatest invention of the past 2000 years.

Wells was overjoyed. Hugely encouraged at his success, Wells, together with his colleague Riggs, went on to extract teeth from their patients with the aid of nitrous oxide. Wells experimented energetically with ether and other agents too; but he preferred nitrous oxide because it was usually safer.

He was now ready to spread news of his discovery as widely as possible. With the help of his former colleague Morton, Wells approached Dr John Collins Warren (1778–1856), founder of the New England Journal of Medicine and Massachusetts General Hospital, bearing an account of his marvelous innovation. Warren was sceptical; but with some reluctance he agreed to cooperate. If fate had been kinder, the name of Horace Wells might have echoed down the ages as one of the greatest benefactors of humankind.

Unfortunately, during the public demonstration at Massachusetts General Hospital that Wells staged to publicize his discovery, the patient stirred and cried out. He had been under-anesthetized; the gasbag was withdrawn too soon. The reaction of Wells’ audience, a class of irreverent medical students, was scornful. There was laughter and cries of “humbug”. Wells was mortified.

In the rest of his short life, it seems he never really recovered from the humiliation. Wells attempted to resume his normal practice back in Hartford. In the wake the Massachusetts disaster, he tried using nitrous oxide anesthesia once more the very next day. His determination not to under-medicate led him instead to administer too much gas; he almost killed his patient. Shortly thereafter Wells had some kind of nervous breakdown.

For a time, he referred all his patients to his colleague Riggs. Nonetheless, Wells wrote a dissertation A History of the Discovery of the Application of Nitrous Oxide Gas, Ether, and Other Vapors to Surgical Operations (1847). He searched for an alternative to the nitrous oxide gas that had let him down in Massachusetts. Tragically, in the course of his experiments he became a chloroform addict.

While intoxicated, he attacked a prostitute with sulfuric acid. Fearing he would now be utterly discredited, and resentful that his unscrupulous protégé Morton was intent on stealing all the credit he deserved, Wells died shortly afterwards by his own hand, embittered and insane. The Daily Hartford Courant recorded:

The Late Horace Wells. The death of this gentleman has caused profound and melancholy sensation in the community. He was an upright and estimable man, and had the esteem of all who knew him, of undoubted piety, and simplicity and generosity of character.”

Historical curiosities aside, the era of surgical anesthesia was inaugurated in a public demonstration inside the same surgical amphitheater by Wells’ former apprentice and colleague, William Morton (1819–1868). The date was 16 October 1846. Not wishing to risk the perceived fiasco of Wells’ public demonstration, Morton sought a stronger anesthetic agent. He was advised by the Boston physician and chemist Professor Charles Jackson (1805–1880) to use ether rather than nitrous oxide.

Morton experimented secretly with ether vapor in his office. He also tried ether anesthesia on a goldfish, his pet water spaniel, two assistants and himself. On 30 September 1846, Morton performed a dental extraction under ether on Eben Frost, a Boston merchant. Mr Frost said he “did not experience the slightest pain whatever”.

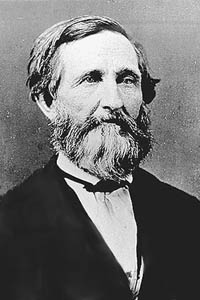

Henry Bigelow

The event was reported over the next two days in the local Boston press, attracting the attention of Henry Bigelow (1818–1890), a smart, sensitive and compassionate young surgeon at Massachusetts General Hospital. Bigelow contacted Morton and Warren so they could liaise. Morton recognized that ether was suitable for full-blown hospital surgery as well as dentistry; he was now ready to enlighten the world.

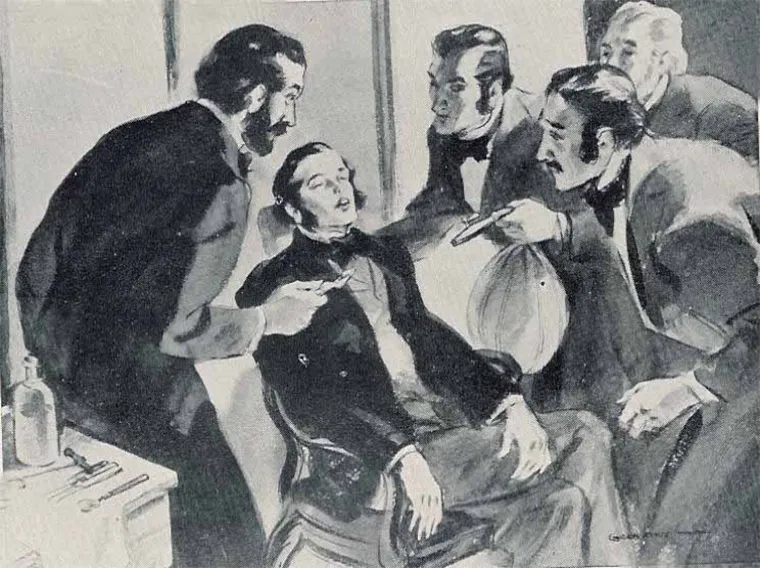

For the public performance, Morton’s patient was a 20 year-old printer, Gilbert Abbott. Morton’s surgeon was again Dr Warren, before whose audience Wells’ disastrous demonstration had taken place less than two years earlier. The spectators consisted of both medical students and surgeons. The operation consisted in the excision of a vascular tumor located under Mr Abbott’s jaw.

Morton’s audience was initially sceptical. The failure of Wells’ demonstration was locally well known; Morton and Jackson had been present in the amphitheater too, Morton because he had left Wells’ practice and signed up as a medical student. This time, however, everyone who watched the spectacle was amazed.

First, Dr Morton briefed his patient on what to do. Before an expectant gallery, Mr Abbot breathed for several minutes from the glass inhaler and its sulphuric ether-soaked sponge. Dr Warren then proceeded to perform the operation. It lasted about ten minutes. Mr Abbott appeared to sleep peacefully throughout, give or take the odd twitch. At no stage did he cry out, though his anesthesia may not have been entirely complete: Abbott later recalled that he “…did not experience pain at the time, although aware that the operation was proceeding.” When the operation was over, the suitably impressed Dr Warren said, “Gentlemen, this is no humbug”.

Astonished, the surgeons present rushed to try out the procedure themselves; and to spread the word to the rest of the continent — and across the Atlantic, where the innovation rapidly took hold. Bigelow published a report of Morton’s triumph in the Boston Medical Surgery Journal.

The first use of general anesthesia in Europe is generally credited to English surgeon Robert Liston (1794–1847). “This Yankee dodge, gentlemen, beats mesmerism hollow”, Professor Liston observed after painlessly amputating a patient’s leg. In principle, more ambitious surgical operations and investigations inside the abdomen, chest and skull were now feasible — though several decades were to pass before they became common. Operations no longer needed to be conducted at breakneck pace, though until Lister’s carbolic spray allowed antisepsis, vast numbers of patients still died of post-operative infection. The Boston surgical amphitheater is now The Ether Dome.

Morton himself was eager to patent his procedure and get rich. From the outset, he had sought to disguise the nature of the agent he used: pure ether is a pungent, volatile, aromatic gas that was unpatentable owing to its long use for other purposes. Morton called his own secret ether-based concoction “The Letheon”; it contained various aromatic oils and opium as well as sulfuric ether. Unsurprisingly, the identity of its prime active ingredient soon leaked out.

For the rest of his life, Morton would be engaged in rancorous disputes with rival claimants to priority. “In science the credit goes to the man who convinces the world, not to the man to whom the idea first occurs,” wrote Francis Darwin in 1914. Morton’s PR machine “won”. More importantly, during the American Civil War (1861–65) Morton personally administered anesthesia to thousands of Union and Confederate soldiers on the battlefield. His grave in Mount Auburn Cemetery near Boston bears the inscription:

Suffering humanity the world over would find the last line cruelly ironic, and the priority assertion has been questioned; but the main claim of Morton’s epitaph is in substance correct. The reasons for the persistence of suffering in the world are now more ideological than scientific. Pain — and pleasure — are controllable.

The Case for Pain

Despite its obvious advantages, pain-free surgery, dentistry and (especially) pain-free childbirth were opposed by a conservative minority.

The City of Zurich initially outlawed anesthesia altogether. “Pain is a natural and intended curse of the primal sin. Any attempt to do away with it must be wrong”, averred the Zurich City Fathers (Harpers (1865); 31: 456–7). Their stance contrasts with the more enlightened Swiss attitude of the 1990s. Latter-day Zurich experimented with what became known as Needle Park. Addicts could openly buy narcotics and inject heroin without police intervention.

In Scotland, Sir James Young Simpson (1811–1870), eloquent advocate of chloroform anesthesia and pioneer of painless delivery in childbirth, offended various Calvinist Scots by his presumption. For did not Genesis 3:16 declare: “Unto the woman he said, ‘I will greatly multiply thy sorrow and thy conception; in sorrow thou shalt bring forth children’”?

Religious traditionalists held that mothers ought to fulfil the “edict of bringing forth children in sorrow” as laid down in the Holy Bible. Simpson was accordingly denounced by a vocal minority of ministers and priests as a blaspheming heretic who uttered words put into his mouth by Satan. [see Triumph over Pain by René Fülöp-Miller, New York Library Guild, 1938].

One clergyman saw the new chloroform anesthesia as “a decoy from Satan, apparently offering to bless woman; but, in the end, it will harden society and rob God of the deep earnest cries, which arise in time of trouble for help.” God’s reaction to being robbed of the cries of women in labor is not on record; but there were mutterings that infants delivered painlessly should be denied the sacrament of baptism.

This never came to pass: mid-Victorian religious opposition to anesthesia was neither as widespread nor as organized as some historians were later to suggest. Yet a hostile reaction to human tampering with the God-given order of things hadn’t always been empty rhetoric. In the text of his A History of the Warfare of Science with Theology (1896), A.D. White relates how “as far back as the year 1591, Eufame Macalyane, a lady of rank, being charged with seeking the aid of Agnes Sampson for the relief of pain at the time of the birth of her two sons, was burned alive on the Castle Hill of Edinburgh; and this old theological view persisted even to the middle of the nineteenth century.”

Fortunately, Professor Simpson knew his Old Testament. He contended that the Biblical “sorrow” was better translated as toil, an allusion to the muscular effort a woman exerted against the anatomical forces of her pelvis in expelling her child at birth. Moreover he cited Genesis 2:21: “And the Lord God caused a deep sleep to fall upon Adam, and he slept: and he took one of his ribs, and closed up the flesh instead thereof”.

Casting God in the role of The Great Anesthetist might seem at variance with the historical record; and not everyone was convinced. A Dr Ashwell (The Lancet (1848:1, p.291)) replied that “Dr Simpson surely forgets that the deep sleep of Adam took place before the introduction of pain into the world, during his state of innocence.” Yet the suggestion that God Himself employed anesthesia helped carry the day.

Simpson had risen from humble origins to become Professor of Obstetrics in Edinburgh. A strong-willed and opinionated controversialist, he was also a compassionate doctor who ministered to rich and poor alike. As a young man, he had almost abandoned his choice of a career in medicine after being shocked at witnessing the suffering that surgical practice then entailed.

Patients undergoing the knife had first to be tightly strapped down or held by several strong men so as to restrain their agonized writhings. Operating rooms had “hooks, rings and pulleys set into the wall to keep the patients in place during operations” (Julie M. Fenster, Ether Day, 2002); victims of surgery still underwent pain as excruciating as anything inflicted in a medieval torture chamber. In that respect, little had changed since the famous Roman physician Cornelius Celsus, writing in 30 A.D., claimed that the ideal surgeon should be “so far void of pity that while he wishes to cure his patient yet is not moved by his cries to go too fast or cut less than is necessary”.

Eighteen hundred years later, surgery was still performed only as a desperate last resort. Operations were typically conducted against a backdrop of hideous screaming or groaning. “The escape from pain in surgical operations is a chimera…’Knife’ and ‘pain’ in surgery are words which are always inseparable in the minds of patients”, affirmed the great French surgeon Alfred-Armand-Louis-Marie Velpeau (1795–1867) in 1839.

Surgery could be emotionally traumatic for surgeons as well as their patients. As President of Harvard, Edward Everett (1794–1865), noted with regret: “I do not wonder that a patient sometimes dies, but that the surgeon ever lives.” Yet within little more than a decade, the anesthetic revolution had spread across the globe, and its opponents vanquished.

Writing to a fellow doctor in 1836, Simpson had asked: “Cannot something be done to render the patient unconscious while under acute pain, without interfering with the free and healthy play of natural functions?” Simpson tried mesmerism; but it didn’t work.

He first learned of ether anesthesia from his old tutor in London, Robert Liston. News had travelled to England by letter via the fastest possible route, transatlantic steamship. Simpson himself used ether in surgery three weeks later, publishing an account in Edinburgh Monthly Journal of Medical Science in March 1847. However, ether was disagreeably smelly, slow-acting and irritating to the bronchial tubes.

Simpson sought a better agent, more suitable for women-in-labor. In October, his Liverpool manufacturing chemist, David Waldie, sent him a chloroform sample. Simpson self-experimented. He then used chloroform successfully in his obstetric practice, publishing an enthusiastic account of the advantages of chloroform in the Lancet in November.

Soon, he was insisting that “every operation without it is the most deliberate and cold-blooded cruelty”. But Simpson went further. Among his patients, he favored general anesthesia in midwifery for every delivery. He quoted Galen: “Pain is useless to the pained”. Simpson maintained: “All pain is per se and especially in excess, destructive and ultimately fatal in its nature and effects.” Simpson’s sentiments were admirable even if his medical science was sometimes flawed.

Professor Simpson didn’t confine his use of anesthesia to surgical practice. In his search for new and improved anesthetics, he tried everything out on himself and his colleagues. Some accounts of his research on new anesthetizing agents read more like the exploits of a teenage glue-sniffer than a shining example of methodological rigor.

Simpson was fond of using young women as guinea-pigs. A larger-than-life figure, he was in the habit of administering chloroform to overawed dinner-party guests in drawing rooms across the country, and then kissing the young ladies who passed out under its influence — a form of experimentation now unlikely to pass muster with a medical ethics committee.

“One of the young ladies, Miss Petrie, wishing to prove that she was as brave as a man, inhaled the chloroform, folded her arms across her breast, and fell asleep chirping “I’m an angel! Oh, I’m an angel!”. René Fülöp-Miller describes one such scene:

“On awakening, Simpson’s first perception was mental. ‘This is far stronger and better than ether,’ said he to himself. His second was to note that he was prostrate on the floor. Hearing a noise, he turned round and saw Dr. Duncan beneath a chair – his jaw dropped, his eyes staring, his head bent under him; quite unconscious, and snoring in the most determined manner. Dr. Keith was waving feet and legs in an attempt to overturn the supper table. The naval officer, Miss Petrie and Mrs. Simpson were lying about on the floor in the strangest attitudes, and a chorus of snores filled the air.” “They came to themselves one after another. When they were soberly seated round the table once more, they began to relate the dreams and visions they had had during the intoxication with chloroform. When at length Dr. Simpson’s turn came, he blinked and said with profound gratification: ‘This, my dear friends, will give my poor women at the hospital the alleviation they need. A rather larger dose will produce profound narcotic slumber.’”

Unfortunately, Simpson failed to realize that chloroform is a potentially dangerous agent for the patient — or the recreational user — even if employed under ideal conditions. It can cause ventricular fibrillation of the heart, a potentially lethal complication. Initially, Simpson thought that chloroform anesthesia was absolutely safe; and he then blamed early fatalities and adverse reactions to the procedure not on its depression of cardiovascular and respiratory function, but the incompetence of English physicians.

He was mistaken; but myths and misconceptions about the new operating procedure ran rife among professionals and lay people alike. One popular rumor supposed that anesthetics provoked carnal fantasies, converting childbirth into a gigantic orgasm. Some physicians thought likewise.

Anesthetics were supposed to turn Victorian women into harlots.

The American Journal of Medical Surgery (1849 18:182) cites a leading obstetrician who “insist[ed] on the impropriety of etherization…in consequence of the sexual orgasm under its use being substituted for the natural throes of parturition”. In A Lecture on the Utility and Safety of the Inhalation of Ether in Obstetric Practice (1847, Lancet 1, 321–323), Dr Tyler Smith reported the case of a young Frenchwoman who gave birth under ether anesthesia and afterwards confessed to have been dreaming of sexual intercourse with her husband.

“To a woman of this country the bare possibility of having feelings of such a kind excited and manifested in outward uncontrollable actions would be more shocking even to anticipate than the endurance of the last extremity of physical pain”, Dr Smith observed. As recounted in Linda Stratmann’s illuminating Chloroform: the Quest for Oblivion (2003), this incident was taken up by Simpson’s opponent Dr George Thompson Gream of Queen Charlotte’s Lying-in Hospital. In Remarks on the Employment of Anaesthetic Agents in Midwifery (London, John Churchill, 1848), Gream offered his readers the further salacious detail that the wanton Frenchwoman had also offered to kiss a male attendant.

Gream was confident that as soon as women in general heard what anesthetics might do to them “they would undergo even the most excruciating torture, or I believe suffer death itself, before they would subject themselves to the shadow of a chance of exhibitions such as have been recorded….the facts are unfit for publication in a pamphlet that may fall into the hands of persons not belonging to the medical profession.”

Fortunately, Gream overestimated the stoicism and virtue of English women. His views were extreme even among strait-laced prudes; most doctors did not take them seriously even at the time. But worries about drug-induced sexual disinhibition were scarcely peculiar to the Victorians.

Periodic moral panics over drug-fuelled sex have always tended to be relatively independent of the pharmacodynamic properties of the agent in question. Thus in the popular press, Chinese immigrants in the age of “Yellow Peril” were intent on luring young white women into their opium dens to become sex slaves; GHB periodically turns chaste damsels into nymphomaniacs; and in the era of “Reefer Madness”, marijuana supposedly transformed healthy youngsters into sexual deviants prone to inter-racial sex. Other examples are legion.

On a more realistic note, cocaine use can indeed promote promiscuous hypersexuality, though not if used as a local anesthetic in dentistry; and the sense of universal love and trust promoted by MDMA can lead to “inappropriate bonding” and unprotected sex.

In Victorian Britain, not all women who experienced troublesome post-surgical imagery were deluded. A minority of doctors made a habit of seducing insensible female patients and ascribing any confused recollections of impropriety on their part to a known side-effect of the anesthetic.

But the notion that anesthesia might promote lewd thoughts and disinhibited behaviour did little to promote the acceptance of painless delivery in polite society. Men especially were prone to believe that reducing mothers to a helpless state of unconsciousness while they enacted their life-defining childbearing role was unnatural and immoral. “The very suffering which a woman undergoes in labor is one of the strongest elements in the love she bears for her offspring,” said one clergyman.

In The Lancet 2 (1849), 537, English doctor Robert Brown explained how God and Nature “walked hand in hand”; painless delivery was an invention of the Devil. In an era when most people still subscribed to the metaphysics of vitalism, Simpson’s opponents were convinced that the experience of pain must serve some essential purpose. “Pain in surgical operations is in a majority of cases even desirable, and its prevention or annihilation is for the most part hazardous to the patient”, alleged Simpson’s adversary Dr James Pickford, though without adducing any compelling evidence why this might be so.

At the South London Medical Society, sentiment ran strongly against painless surgery. Addressing a meeting held shortly after Simpson’s original chloroform paper, the well-respected Dr Samuel Gull declared that it was a “dangerous folly to try to abolish pain”. Even if its abolition were morally desirable, Dr Gull averred, “ether was a well-known poison”. [F. Stanley. For Fear of Pain, British Surgery 1790–1850, (2003)]. Ether and chloroform were described by Gull’s colleague Dr Cole as “pernicious”. A Dr Nunn “failed to see how surgeons could get on without pain”. The views of Francois Magendie (“La Douleur Toujours!”) across the Channel were quoted with approval.

Dr Radford, drawing the meeting to a close, concluded that “there was nothing but evil” in the new-fangled procedure [see T. Dormandy The Worst of Evils, The Fight Against Pain, (2006)]. The rationalization of human suffering is widely shared among foes of the medical prevention or annihilation of emotional pain today; and defended on equally tenuous metaphysical grounds.

Queen Victoria: “Doctor Snow gave that blessed chloroform and the effect was soothing, quieting, and delightful beyond measure.”

In England, at least, the practice of anesthesia during childbirth won greater respectability following its widely-publicized use on Queen Victoria. The delivery in 1853 of Victoria’s eighth child and youngest son, Prince Leopold, was successful: chloroform was administered by Dr John Snow (1813–1858) of Edinburgh, the world’s first anesthesiologist/anesthetist. In 1847 Snow had published On the Inhalation of Ether in Surgical Operations, a scientific milestone. Dr Snow sought to put the principles of anesthesia on a sound scientific basis. Critically, Snow introduced inhalers designed to deliver an accurate and controlled “dose” of anesthetizing agent to each closely monitored patient.

Prudently, in Queen Victoria’s case the dosage of chloroform induced analgesia rather than complete anesthesia. “Dr Snow gave that blessed chloroform and the effect was soothing, quieting and delightful beyond measure”, Her Majesty reported. If the Queen had died in consequence, then the progress of anesthesia might have been set back a generation; fortunately, she survived unscathed. Anesthesia à la reine even became fashionable in high society.

Patients and many mothers-to-be were understandably thrilled. One mother was so delighted by a painless delivery that she named her child Anesthesia. Yet controversy didn’t abate altogether. The Lancet was scandalized at the use of anesthesia on the Queen. The distinguished journal even professed to doubt if the story were true, since chloroform “has unquestionably caused instantaneous death in a considerable number of cases” [“Administration of Chloroform to the Queen”, The Lancet 1 (May 14, 1853): 453) ].

As its commentary noted with alarm, “Royal examples are followed with extraordinary readiness by a certain class of society in this country.” The Lancet wasn’t persuaded of the need for general anesthesia in dentistry either. After a death in an Epsom dentist’s chair in 1858, its editor warned: “It is chiefly fashionable ladies who demand chloroform. This time it was a servant girl who was sacrificed; the next time it may be a Duchess.”

Though snobbish and rhetorically overblown, The Lancet’s caution was far from amiss. One problem was the lack of controlled clinical trials comparing use of chloroform and ether. Chloroform is faster-acting and easier to use, but ether is generally safer.

Chloroform use also had a shorter history. A colorless, volatile liquid with a characteristic smell and a sweet taste, chloroform was discovered in July 1831 by American physician Samuel Guthrie (1782–1848); and independently a few months later by Eugène Soubeiran (1797–1859) in France and Justus von Liebig (1803–73) in Germany.

Prophetically, Guthrie’s eight-year-old granddaughter Cynthia once anesthetized herself by accident after inhaling chloroform vapor; she was in the habit of dipping her finger into the liquid and tasting it. “Guthrie’s sweet whiskey” became a popular local tipple; its consumption induced what Guthrie described as “a lively flow of animal spirits, and consequent loquacity.” Chloroform soon found its way into patent medicines. The most famous of these concoctions was chlorodyne, a tincture of chloroform and morphine designed as a remedy for cholera by British army surgeon Dr. J. Collins Browne.

Unlike ether, chloroform isn’t flammable, an important virtue in a candlelit era. Chloroform is also less of a chemical irritant to the respiratory passageways. However, it is a cardiovascular depressant. More insidiously, chloroform has toxic metabolites that can cause delayed-onset damage to the liver. Like most anesthetics, it has a relatively low therapeutic window.

This posed a particular risk when chloroform was administered in the preferred Edinburgh fashion by folded silk handkerchief. There was no set dose; when chloroform was used in quantities suitable for anesthesia rather than inebriation, it was simply administered until the patient became insensible. “The notion that extensive experience is required for the administration of chloroform is quite erroneous, and does harm by weakening the confidence of the profession in this invaluable agent”, declared the father of antiseptic surgery Joseph Lister (1827–1912), Surgeon to the Royal Infirmary and Professor of Surgery in the University of Glasgow.

With hindsight, this opinion was ill-judged and dangerously naïve. The first known death under anesthesia was reported as early as January 1848: the case of Hannah Greener, a 15-year-old girl who died under chloroform while undergoing toenail excision. In response to such early tragedies, Dr Joseph Clover (1825–1882) developed in 1862 the first apparatus to provide chloroform in controlled concentrations; and a “portable regulating ether-inhaler” in 1877. Yet serious risks remained even as technology to control the depth of anesthesia improved.

Anesthesiology as practised in the modern era is recognized as a technically demanding medical specialism with a long and arduous apprenticeship. Even now, anesthetics can occasionally cause serious complications: liver or kidney damage, strokes, heart attacks, seizures, pneumonia, low blood pressure and allergic reactions are all known risks.

In Victorian England, there was none of our sophisticated cardiorespiratory monitoring equipment, endotracheal intubation, ventilators and extensive perioperative care for the patient. Moreover, the epoch-making transition to pain-free surgery wasn’t initially accompanied by an appreciation of the germ theory of disease and the importance of asepsis: this critical breakthrough would await the discoveries of Semmelweis, Pasteur, Koch and Lister.

Tragically, variations on “The operation was a success but the patient died” remained a common refrain in the aftermath of surgery for several decades to come. Almost half of patients undergoing some kinds of invasive surgery in the 19th century still died soon thereafter, mainly through septicemia.

There are in truth few obstetric situations today where general anesthesia is either medically or humanely essential: use of local or regional anesthesia usually suffices for natural childbirth, though millions of mothers in labor throughout the world endure grossly inadequate pain-relief. But four years after the birth of Prince Leopold, Dr Snow again used chloroform for the birth of Victoria’s youngest daughter, Princess Beatrice. Dr Snow also delivered a baby for the daughter of the Archbishop of Canterbury. In the end, royal and ecclesiastical, if not divine, sanction was enough to silence the critics.

Rhetorical passions nonetheless ran as high across the Atlantic as they did in Great Britain. In 1847, The Philadelphia Presbyterian thundered, “Let everyone who values free agency beware of the slavery of etherization”. The American Temperance movement took an equally dim view of surgical anesthesia. It regarded etherization as a form of intoxication that posed a threat to the virtue of female patients. Although surgeons and their patients mostly embraced pain-free operations with gratitude, a motley collection of conventionally-minded doctors, dentists and scientists voiced vehement opposition.

Dr William Henry Atkinson, first president of the American Dental Association (ADA), protested, “I think anesthesia is of the devil, and I cannot give my sanction to any Satanic influence which deprives a man of the capacity to recognize the law! I wish there were no such thing as anesthesia. I do not think men should be prevented from passing through what God intended them to endure.” [see Sacred Pain: Hurting the Body For the Sake of the Soul, By Ariel Glucklich, Oxford University Press, 2001]. Atkinson apparently conceived pain as spiritually uplifting. Pain wasn’t an expression of God’s punishment of man, but His paternal affection.

Theologians in particular were prone to believe that agony bravely borne was spiritually uplifting. In Milan, Cardinal Berlusconi, distant relative of the later Italian premier, delivered a much-cited sermon condemning advocates of painless surgery for seeking to abolish “one of the Almighty’s most merciful provisions” [Unsere Schmerzen (Vienna, 1868)].

In human society, and especially the Judaeo-Christian tradition, heroes and heroines who stoically endure the greatest suffering are usually awarded the greatest esteem. An unheroic tendency toward self-pity is despised. Thus in Canada, surgeons-general in the army initially refused to use anesthetics for operations on the grounds that their manly soldiers could take such trifles in their stride. In the USA, regular army surgeon John B. Porter banned use of anesthetics on stricken soldiers under his command, allegedly on grounds of safety but probably in part because “the easiest pain to bear is someone else’s”. Our Darwinian concepts of moral strength and nobility of character are bound up with the ability to withstand extremes of suffering, whether the pain is called “physical” or “emotional” or both.

Alas sensitive psychological weaklings are seldom respected by Society or Mother Nature. In the case of “physical” pain, early critics of anesthesia held that the prospect of rendering patients insensible for the purposes of surgery was dehumanizing. Pain-free existence supposedly robbed human beings of their essential humanity and dignity. Unfortunately the dignity of unbearable pain is frequently lost on its victims.

This view did not go theologically unchallenged. A few religiously-minded physicians used theological arguments to justify the medical use of anesthetics. In On the Property of Anaesthetic Agents in Surgical Operations (1855), Dr Eliza Thomas describes anesthesia as “a second dispensation”: a gift from God. But clerical enthusiasm, as distinct from acquiescence, was uncommon.

How would God be able to punish His children for unrighteousness if the main weapon at His disposal, namely pain, were taken away? The argument that doctors and surgeons should not “play God” is common today even among those who pay homage to Nature rather than the Almighty. Naturopaths, homeopaths and herbalists were as hostile to “unnatural” anesthesia as they are to the interventions of contemporary scientific medicine.

Critics of anesthesia could count on academic allies. Doctor Charles Delucena Meigs (1792–1869), Professor of obstetrics and diseases of women at Jefferson Medical College, was of the opinion that labor-pains were “a most desirable, salutary and conservative manifestation of the life force.” This “life force” was weakened by etherization, which should thus be avoided.

Dr Meigs thought chloroform was objectionable too; he regarded taking it as little different from getting drunk. His degree of empathy with women in labor is captured in his remark of a woman that she “has a head almost too small for intellect and just big enough for love”. More reasonably, Meigs pointed out that the mechanism and long-term effects of anesthesia on the brain were unknown.

Antipathy to painless surgery soon entered scholarly print. The New York Journal of Medicine [9 (1847) 1223–25] declared that pain was vital to surgical procedure, and that its removal was harmful to the patient. This notion now sounds quaint, perhaps as quaint as our own assumption that a capacity for emotional pain is indispensable to health — or at least an essential diagnostic guide to problems — may one day seem to our descendants.

Anesthesia was supposed to cause loss of “vital spirit”.

But the anxiety which the journal’s reaction expressed was common. Francois Magendie (1783–1855), the famous French physiologist, neurologist and puppy vivisectionist, held that pain was essential to life. Magendie believed that anesthesia reduced the “patient to a corpse”. The loss of “vital spirit” induced by anesthesia would supposedly endanger the patient in the operating theatre — and delay or prevent recovery after an operation. Like supporters of the “heroic medicine” of Benjamin Rush (1745–1813), Magendie supposed that etherization sapped the life force.

After Darwin and the triumphs of organic chemistry, we are more likely to view each other as neurochemical robots devoid of vital spirit; but physical pain had previously been so intimately bound up with life that many 19th century philosophers and scientists assumed that suffering must be inseparable from the mysterious life force itself. Sections of the medical profession even valued pain and its manifestations as an encouraging sign of a patient’s vitality — and the effectiveness of a doctor’s prescription.

In Calculus of Suffering: Pain, Professionalism, and Anesthesia in Nineteenth-Century America. New York, NY: Columbia University Press; 1985), Martin Pernick quotes physician Felix Pascalis: “The greater the pain, the greater must be our confidence in the power and energy of life”. By contrast, anesthesia evoked death.

Contemporary media commentators are prone to express similar sentiments if not idiom when conjuring up the spectre of a soma-driven Brave New World. Within the lifetime of people now living, biotechnology threatens to abolish life’s rich tapestry of psychological distress. Suffering in its many guises is assuredly terrible, its rationalizers acknowledge; but its loss would deprive us of our humanity, freedom and dignity — and perhaps an indefinable vital energy too, though the expression itself has fallen into disuse.

Pain, its apologists suggest, is somehow more authentic than happiness. Certainly, for evolutionary reasons euphoric well-being has hitherto been impossible to sustain for most of us, irrespective of its propositional content. So there is a tendency to regard its episodes as “false”, or alternatively as rare and necessarily elusive “peak experiences”. Perhaps its “reality” may seem greater if invincible bliss becomes part of the genetically coded fabric of conscious life rather than a drug-induced aberration.

Other objections to the anesthetic revolution were harder to refute. A number of critics were worried that anesthetics merely immobilized the body and induced amnesia but didn’t extinguish pain. The patient might then be left paralyzed under the surgeon’s knife but fully conscious — trapped in incommunicable agony.

Although chloroform and ether are (we believe) innocent of any such charge, a terrible medical error was committed almost a century later with a neuromuscular blocking agent, the South American Indian arrow poison curare. In its day, curare represented a significant surgical advance. Although its use necessitated intubation of the trachea and mechanical ventilation of the patient’s lungs, its introduction led to a decline in anesthetic mortality. This is because curare lacks the depressant effects of general anesthetics on the heart.

Unfortunately, some surgeons and anesthetists initially assumed that curare was an anesthetic as well as a muscle-relaxant. A few patients endured surgery under curare while paralyzed and awake. But rather than forgetting their nightmare afterwards, the victims were traumatized. Curare does not induce amnesia. Although this particular mistake has not been repeated, in operations on humans at least, the use of neuromuscular blocking agents in conjunction with anesthetics increases the risk of awareness during surgery.

The Conquest of Suffering

So how close are the parallels between arguments used against technologies to relieve emotional pain and somatic pain? Where, if at all, does the analogy break down?

There are of course disanalogies between, on the one hand, the use of anesthetics and analgesics to prevent pain in clinical medicine and, on the other hand, the use of therapeutic agents to dispel the “natural” mental pain of everyday Darwinian life.

For a start, whereas painkillers typically diminish the intensity of consciousness, and general anesthesia suppresses it, post-genomic medicine promises to deepen, diversify and intensify the quality of our awareness.

By contrast, too, strong analgesics tend to diminish the functional capacity of the user, and anesthetics effectively abolish it, whereas mood-enriching designer drugs and gene therapies of the future are more likely to extend our intellectual, physical, sensual and aesthetic capacities — and possibly even our spiritual, introspective, empathetic and moral sensibilities as well. There are further disanalogies. Undergoing total anesthesia for surgery involves surrendering control of one’s body to others: one early argument against surgical anesthetics was that they left a woman defenseless — unable to defend her virtue should her half-naked body inflame the lust of her (male) surgeons, and perhaps a prey to wanton and disinhibited behaviour herself.

By contrast, electing to take long-acting mood-brighteners is typically empowering. Other things being equal, heightened mood at once increases one’s capacity for autonomous action, promotes enhanced social status in primate dominance-hierarchies, and strengthens one’s sense of agency — the obverse of the “learned helplessness” and submissive behaviour characteristic of depression.

None of the above should obscure the critical similarity between the two fundamental categories of suffering. Insofar as they can be distinguished, both somatic and emotional pain are at once profoundly distressing and, potentially, functionally redundant in the era of post-genomic medicine.

Their functional roles can be multiply realized in other ways that don’t involve the texture (“what it feels like”) of unpleasantness — insofar as their functional roles need to be realized at all. Both somatic and emotional pain share common substrates in the molecular machinery of the nerve cell. Intuitively, extreme “physical” pain is worse.

Yet it is unbearable “emotional” pain that causes almost a million people in the world to kill themselves each year. Emotional pain causes millions of “para-suicides” and cases of self-injurious behaviour; and emotional pain induces tens of millions of depressive people periodically to wish they could die or didn’t exist. In practice, the two kingdoms of pain are intimately linked. Untreated pain commonly leads to depression, and depression is frequently manifested in somatic symptoms.

There are of course (many) complications before the conquest of suffering can ever be complete. Sustaining lifelong bliss and a capacity for critical insight isn’t straightforward, especially if such bliss is to be empathetic and socially responsible rather than egotistic. Any intelligent organism depends on a complex web of interlocking, genetically regulated feedback mechanisms to flourish. So something as central to primordial human existence as aversive experience can’t be edited out of our lives without ensuring a rich network of functional analogues to take its place — short of wireheading.

Fortunately, there is nothing functionally indispensable to intelligent mind about the raw phenomenal texture of pain, whether it’s the searing agony provoked by acute tissue trauma or the aching despair of melancholic depression. For phenomenal pain is not the same as sensory nociception; nor should its “psychological” counterparts be equated with the functional role they play in the informational economy of Darwinian minds.

Our imminent capacity to edit and rewrite our genetic code means that other information-processing options can be explored too. At the most basic, we can ratchet up our normal mood-levels so we can all feel happy and emotionally fulfilled. Critically, dips in gradients of an exalted well-being that (stably) fluctuates around an elevated “hedonic set-point” can potentially signal “danger” or “error” (and motivate us to avoid them) at least as powerfully as do gradients of suffering.

If pleasure and pain were merely relative, then such homeostatic dips in exalted awareness would actively hurt; as it is, they may in future play an error-correcting role merely (dimly) analogous to the bestial horrors of the past. Opioid neurotransmitter system redesign will play a role in the recalibration; but re-engineering the architecture of the mesolimbic dopamine system will be a vital step toward recalibrating our reward circuitry so we can all be dynamically superwell throughout our lives. For the meso(cortico-)limbic dopamine system mediates, not just pleasure, but appetitive behaviour and so-called incentive-motivation.

Revealingly, dopamine-releasing drugs act as powerful analgesics as well as euphoriants; by contrast, some 50% of victims of the “dopamine deficiency disorder” better known as Parkinson’s disease report symptoms of physical pain. More generally, a large minority of people in contemporary human society are driven mainly by gradients of misery, discomfort and discontent. A small minority are animated primarily by gradients of well-being, and many — but not all — of this small minority are labelled either (hypo)manic or bipolar. Most people fall somewhere in between in their daily mood spectrum. “Hyperthymic” people animated by gradients of lifelong happiness without mania are currently medically rare freaks of nature, though not unknown.

Within the next few decades, however, humanity will have the pharmaceutical and genetic opportunity to choose whatever range of the affective axis we wish to occupy as the backdrop to our lives. To date, we have barely glimpsed the potential extremes of the pleasure-pain axis; in the case of the dark side of sentience, it may be hoped (and statistically expected) that we never will. More ambitiously, the new biotechnologies promise to extend our range of choices way beyond tools for crude, unidimensional mood-modulation.

For we’ll have the tools to re-design the neural basis of our personalities to repair the deficits of natural selection. Even better, ethically speaking, the application of germline hedonic engineering can ensure that parenthood won’t entail bringing any more suffering into the world. Misery becomes physically impossible if the genetic code for its biological substrates is missing. Thus having children needn’t, as now, entail causing more heartache as well as episodic happiness. Procreation becomes permissible even for the negative utilitarian who finds it impossible to practise ethical parenthood with a Darwinian genome.

Yet will some form of real “emotional” pain still be endemic to future civilization, just as “physical” pain was endemic to the lives of our ancestors — and as it lingers among disease-stricken victims of opiophobia even today? Or is it possible our post-Darwinian descendants will enjoy lifelong mental superhealth that’s orders of magnitude richer than our own (though use of “health” terminology to describe our own malaise-ridden lives may be something of a misnomer)?

From an information-theoretic perspective, what matters functionally and computationally to any neurochemical robot is not our absolute “hedonic set point” on the pleasure-pain axis. What counts is that we are informationally sensitive to fitness-relevant changes in our internal and external environment. Our contemporary pains and pleasures reflect the genetic “fitness tokens” of the African savannah; in consequence, we’re stuck, scrabbling around in a severely sub-optimal homeostatic rut. It would be surprising if the genetic fitness tokens of our hominid ancestors were to remain adaptive in a post-Darwinian era of planned parenthood and paradise-engineering.

Nociception Without Tears

Not everyone has the physiological capacity to suffer pain. A few people quite literally do not understand what the term means. Several syndromes of congenital insensitivity to pain (CIP) are known. Their affective counterparts, sporadic cases of lifelong unipolar euphoric (hypo)mania and extreme hyperthymia without mania, occur in different subtypes; they are rare too.

The opposite syndromes of chronic pain and hyperalgesia, and chronic unipolar depression or dysthymia, are much more common, presumably reflecting the comparative selection pressures of our ancestral environment. In most cases today, a lack of pain-sensitivity can plausibly be presented as a deficit in signal-processing capacity rather than a harbinger of post-Darwinian superhealth.

This judgment may be premature. In retrospect, 19th century opponents of painless surgery were wrong to claim that pain was an essential diagnostic aid to surgical medicine, and wrong to claim that anesthesia extinguished a person’s “vital spirit”. Yet might opponents of genetically enriched life rooted in gradients of bliss be right to claim that emotional pain will always remain an indispensable diagnostic aid to danger and error?

Perhaps so. But abolitionists who seek life-long high functioning well-being can point to two families of alternative:

- the futuristic “cyborg” solution. We know that silicon robots can

be constructed with spectroscopic (etc) sensors that can “see” and

“hear” more sensitively than human beings — even though this

greater

discriminative capacity isn’t matched by the felt textures of

phenomenal color or sound.

Artificial silicon (etc) systems can also be designed or trained up so as to be more sensitive to noxious insults and structural damage as well. In future, modular implants can benefit rare victims of congenital anesthesia who are prone to life-threatening injury through lack of efficient feedback-signalling mechanisms.

But if the rest of us, too, ever want to augment ourselves with modules performing an analogous adaptive role, i.e. efficient sensory nociception and avoidance behaviour without the cruel textures of phenomenal pain, then enlisting all sorts of smart neurochips and prostheses is technically feasible — whether or not their widespread adoption is ever sociologically realistic.

Analogously, the information-theoretic role of our nastier emotions (jealousy, spite, etc) can in principle be replicated without their current sinister textures as bequeathed by evolution —though it may be wondered whether the “functional role” of modules mediating some of our baser feelings can’t be discarded altogether along with their vicious “raw feels”.

It’s hard to see what jealousy is good for beyond its tendency to maximize the inclusive fitness of our genes in the ancestral environment of adaptation. Our descendants may make the judgment that neither its texture nor functional role have any redeeming value; and may therefore elect to discard both.

For sure, most people who aren’t transhumanists are unexcited at the prospect of updating the “fitness tokens” of our evolutionary past, let alone implanting neural prostheses that tamper with their intimate soul-stuff. But it’s worth stressing that this bionic strategy isn’t committed to turning us into hyperintelligent “zombies”. This is because desirable facets of our subjective consciousness can be exquisitely enriched and amplified even as the nastier phenomenology of Darwinian life is phased out.

Thus our descendants may not just be super-smart but hyper-sentient too. If so, then “awakened” life is likely to be founded on gradations of blissful hypersentience that replaces gradations of Darwinian malaise. The nature of what we’ll be happy “about” is currently hard to guess; but this uncertainty reflects our ignorance rather than the likelihood of some kind of collective cosmic orgasm. - the alternative organic “software” or “wetware” option. This

family of scenarios for high-functioning well-being takes either a)

pharmacological or b) genetic guises; or combines both. But each

variant assumes that organic biochemistry and molecular genetics can

transcend their terrible genesis in a Darwinian world

red-in-tooth-and-claw without significant intracranial